![$$\left[\matrix{ 1 & -3 & 0 & 5 \cr 3 & 0 & -17 & 9 \cr -1 & -2 & 4 & -3 \cr}\right] \quad \left[\matrix{0 \cr 5 \cr -5 \cr 3 \cr -3.14 \cr}\right] \quad \left[\matrix{\dfrac{17}{5} & -4 & 0 & \dfrac{1}{2} \cr}\right] \quad \left[\matrix{0 & 0 \cr 0 & 0 \cr}\right]$$](matrix-arithmetic1.png)

Most of linear algebra involves mathematical objects called matrices. A matrix is a finite rectangular array of numbers:

![$$\left[\matrix{ 1 & -3 & 0 & 5 \cr 3 & 0 & -17 & 9 \cr -1 & -2 & 4 & -3 \cr}\right] \quad \left[\matrix{0 \cr 5 \cr -5 \cr 3 \cr -3.14 \cr}\right] \quad \left[\matrix{\dfrac{17}{5} & -4 & 0 & \dfrac{1}{2} \cr}\right] \quad \left[\matrix{0 & 0 \cr 0 & 0 \cr}\right]$$](matrix-arithmetic1.png)

In this case, the numbers are elements of ![]() (or

(or ![]() ). In general, the entries will be

elements of some commutative ring or field.

). In general, the entries will be

elements of some commutative ring or field.

In this section, I'll explain operations with matrices by example. I'll discuss and prove some of the properties of these operations later on.

Dimensions of matrices. An ![]() matrix is a matrix with m rows and n columns.

Sometimes this is expressed by saying that the

dimensions of the matrix are

matrix is a matrix with m rows and n columns.

Sometimes this is expressed by saying that the

dimensions of the matrix are ![]() .

.

![$$\matrix{\left[\matrix{1 & 2 & 3 \cr 4 & 5 & 6 \cr}\right] & \left[\matrix{0 & 0 \cr 0 & 0 \cr 0 & 0 \cr 0 & 0 \cr}\right] & \left[\matrix{\pi \cr}\right] \cr \quad\hbox{a}\quad 2 \times 3 \quad\hbox{matrix}\quad & \quad\hbox{a}\quad 4 \times 2 \quad\hbox{matrix}\quad & \quad\hbox{a}\quad 1 \times 1 \quad\hbox{matrix}\quad \cr}$$](matrix-arithmetic6.png)

A ![]() matrix is called an

n-dimensional row vector. For example, here's a 3-dimensional

row vector:

matrix is called an

n-dimensional row vector. For example, here's a 3-dimensional

row vector:

![]()

Likewise, an ![]() matrix is called an n-dimensional column vector. Here's a

3-dimensional column vector:

matrix is called an n-dimensional column vector. Here's a

3-dimensional column vector:

![$$\left[\matrix{0 \cr 17 - e^2 \cr {\root 3 \of {617}} \cr}\right]$$](matrix-arithmetic10.png)

An ![]() matrix is called a square

matrix. For example, here is a

matrix is called a square

matrix. For example, here is a ![]() square matrix:

square matrix:

![]()

Notice that by convention the number of rows is listed first and the number of columns second. This convention also applies to referring to specific elements of matrices. Consider the following matrix:

![$$\left[\matrix{ 11 & -0.4 & 33 & \sqrt{2} \cr a + b & x^2 & \sin y & j - k \cr -8 & 0 & 13 & 114.71 \cr}\right]$$](matrix-arithmetic14.png)

The ![]() element is the element in row 2

and column 4, which is

element is the element in row 2

and column 4, which is ![]() . (Note that the first row is

row 1, not row 0, and similarly for columns.) The

. (Note that the first row is

row 1, not row 0, and similarly for columns.) The ![]() element is the element in row 3 and column

3, which is 13.

element is the element in row 3 and column

3, which is 13.

Equality of matrices. Two matrices are equal if they have the same dimensions and the corresponding entries are equal. For example, suppose

![]()

Then if I match corresponding elements, I see that ![]() ,

, ![]() , and

, and ![]() .

.

Definition. If R is a commutative ring, then

![]() is the set of

is the set of ![]() matrices with

entries in R.

matrices with

entries in R.

Note: Some people use the notation ![]() .

.

For example, ![]() is the set of

is the set of ![]() matrices with real entries.

matrices with real entries. ![]() is the set of

is the set of ![]() matrices with entries in

matrices with entries in ![]() .

.

Adding and subtracting matrices. For these

examples, I'll assume that the matrices have entries in ![]() .

.

You can add (or subtract) matrices by adding (or subtracting) corresponding entries.

![]()

Matrix addition is associative --- symbolically,

![]()

This means that if you are adding several matrices, you can group them any way you wish:

![]()

![]()

Here's an example of subtraction:

![$$\left[\matrix{1 & -3 \cr 2 & 0 \cr 4 & \sqrt{2} \cr}\right] - \left[\matrix{6 & 3 \cr 2 & \pi \cr 0 & 0 \cr}\right] = \left[\matrix{-5 & -6 \cr 0 & -\pi \cr 4 & \sqrt{2} \cr}\right].$$](matrix-arithmetic35.png)

Note that matrix addition is commutative:

![]()

Symbolically, if A and B are matrices with the same dimensions, then

![]()

Of course, matrix subtraction is not commutative.

You can only add or subtract matrices with the same dimensions. These two matrices can't be added or subtracted:

![]()

Adding or subtracting matrices over ![]() . You add or subtract matrices over

. You add or subtract matrices over ![]() by adding or subtracting corresponding entries, but

all the arithmetic is done in

by adding or subtracting corresponding entries, but

all the arithmetic is done in ![]() .

.

For example, here is an addition and a subtraction over ![]() :

:

![]()

![]()

Note that in the second example, there were some negative numbers in

the middle of the computation, but the final answer was expressed

entirely in terms of elements of ![]() .

.

Definition. A zero

matrix ![]() is a matrix all of whose entries

are 0.

is a matrix all of whose entries

are 0.

![$$\left[\matrix{0 & 0 \cr 0 & 0 \cr}\right] \quad \left[\matrix{0 & 0 & 0 \cr 0 & 0 & 0 \cr 0 & 0 & 0 \cr 0 & 0 & 0 \cr}\right]$$](matrix-arithmetic47.png)

There is an ![]() zero matrix for every pair of

positive dimensions m and n.

zero matrix for every pair of

positive dimensions m and n.

If you add the ![]() zero matrix to another

zero matrix to another ![]() matrix A, you get A:

matrix A, you get A:

![]()

In symbols, if ![]() is a zero matrix and A is a matrix

of the same size, then

is a zero matrix and A is a matrix

of the same size, then

![]()

A zero matrix is said to be an identity element for matrix addition.

Note: At some point, I may just write "0" instead of

"![]() " (with the boldface) for a zero

matrix, and rely on context to tell it apart from the number 0.

" (with the boldface) for a zero

matrix, and rely on context to tell it apart from the number 0.

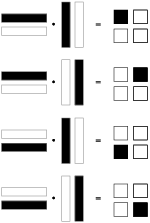

Multiplying matrices by numbers. You can multiply a matrix by a number by multiplying each entry by the number. Here is an example with real numbers:

![$$7 \cdot \left[\matrix{3 & -3 \cr 4 & -1 \cr 0 & 2 \cr}\right] = \left[\matrix{7 \cdot 3 & 7 \cdot (-3) \cr 7 \cdot 4 & 7 \cdot (-1) \cr 7 \cdot 0 & 7 \cdot 2 \cr}\right] = \left[\matrix{21 & -21 \cr 28 & -7 \cr 0 & 14 \cr}\right].$$](matrix-arithmetic55.png)

Things work in the same way over ![]() , but all the

arithmetic is done in

, but all the

arithmetic is done in ![]() . Here is an

example over

. Here is an

example over ![]() :

:

![]()

Notice that, as usual with ![]() , I simplified

my final answer so that all the entries of the matrix were in the set

, I simplified

my final answer so that all the entries of the matrix were in the set

![]() .

.

Unlike the operations I've discussed so far, matrix multiplication (multiplying two matrices) does not work the way you might expect: You don't just multiply corresponding elements of the matrices, the way you add corresponding elements to add matrices.

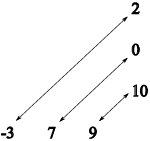

To explain matrix multiplication, I'll remind you first of how you take the dot product of two vectors; you probably saw this in a multivariable calculus course. (If you're seeing this for the first time, don't worry --- it's easy!) Here's an example of a dot product of two 3-dimensional vectors of real numbers:

![$$\left[\matrix{-3 & 7 & 9 \cr}\right] \left[\matrix{2 \cr 0 \cr 10 \cr}\right] = (-3) \cdot 2 + 7 \cdot 0 + 9 \cdot 10 = -6 + 0+ 90 = 84.$$](matrix-arithmetic62.png)

Note that the vectors must be the same size, and that the product is

a number. This is actually an example of matrix

multiplication, and we'll see that the result should technically be a

![]() matrix. But for dot products, we will write

matrix. But for dot products, we will write

![]()

If you've seen dot products in multivariable calculus, you might have seen this written this way:

![]()

But for what I'll do next, I want to distinguish between the row (first) vector and the column (second) vector.

Multiplying matrices. To compute the product ![]() of two matrices, take the dot

products of the rows of A with the columns of B. In this example,

assume all the matrices have real entries.

of two matrices, take the dot

products of the rows of A with the columns of B. In this example,

assume all the matrices have real entries.

![$$\left[\matrix{{\bf 2} & {\bf 1} & {\bf 4} \cr -1 & 0 & 3 \cr}\right] \left[\matrix{{\bf 1} & 6 \cr {\bf 5} & 1 \cr {\bf 2} & -2 \cr}\right] = \left[\matrix{(2 + 5 + 8 = 15) & \cdot \cr \cdot & \cdot \cr}\right]$$](matrix-arithmetic68.png)

![$$\left[\matrix{{\bf 2} & {\bf 1} & {\bf 4} \cr -1 & 0 & 3 \cr}\right] \left[\matrix{1 & {\bf 6} \cr 5 & {\bf 1} \cr 2 & {\bf -2} \cr}\right] = \left[\matrix{15 & (12 + 1 - 8 = 5) \cr \cdot & \cdot \cr}\right]$$](matrix-arithmetic69.png)

![$$\left[\matrix{2 & 1 & 4 \cr {\bf -1} & {\bf 0} & {\bf 3} \cr}\right] \left[\matrix{{\bf 1} & 6 \cr {\bf 5} & 1 \cr {\bf 2} & -2 \cr}\right] = \left[\matrix{15 & 5 \cr (-1 + 0 + 6 = 5) & \cdot \cr}\right]$$](matrix-arithmetic70.png)

![$$\left[\matrix{2 & 1 & 4 \cr {\bf -1} & {\bf 0} & {\bf 3} \cr}\right] \left[\matrix{1 & {\bf 6} \cr 5 & {\bf 1} \cr 2 & {\bf -2} \cr}\right] = \left[\matrix{15 & 5 \cr 5 & (-6 + 0 - 6 = -12) \cr}\right]$$](matrix-arithmetic71.png)

![$$\left[\matrix{2 & 1 & 4 \cr -1 & 0 & 3 \cr}\right] \left[\matrix{1 & 6 \cr 5 & 1 \cr 2 & -2 \cr}\right] = \left[\matrix{15 & 5 \cr 5 & -12 \cr}\right].$$](matrix-arithmetic72.png)

This schematic picture shows how the rows of the first matrix are "dot-producted" with the columns of the second matrix to produce the 4 elements of the product:

You can see that you take the dot products of the rows of the first matrix with the columns of the second matrix.

In order for the multiplication to work, the matrices must have

compatible dimensions: The number of columns in A should equal the

number of rows of B. Thus, if A is an ![]() matrix and B is an

matrix and B is an ![]() matrix,

matrix, ![]() will be an

will be an ![]() matrix. For example,

this won't work:

matrix. For example,

this won't work:

![$$\left[\matrix{ -2 & 1 & 0 & 8 \cr 1 & 11 & 13 & 9 \cr}\right] \left[\matrix{-5 & -5 \cr 2 & 13 \cr 1 & 7 \cr}\right] \quad\hbox{(Won't work!)}\quad$$](matrix-arithmetic78.png)

Do you see why? The rows of the first matrix have 4 entries, but the columns of the second matrix have 3 entries. You can't take the dot products, because the entries won't match up.

Here are two more examples, again using matrices with real entries:

![$$\left[\matrix{-2 & 3 & 0 \cr}\right] \left[\matrix{2 & 0 \cr 9 & -4 \cr 1 & 5 \cr}\right] = \left[\matrix{23 & -12 \cr}\right].$$](matrix-arithmetic79.png)

![]()

Here is an example with matrices in ![]() . All the arithmetic is done in

. All the arithmetic is done in ![]() .

.

![]()

Notice that I simplify the final result so that all the entries are

in ![]() .

.

If you multiply a matrix by a zero matrix, you get a zero matrix:

![]()

In symbols, if ![]() is a zero matrix and A is a matrix

compatible with it for multiplication, then

is a zero matrix and A is a matrix

compatible with it for multiplication, then

![]()

Matrix multiplication takes a little practice, but it isn't hard. The big principle to take away is that you take the dot products of the rows of the first matrix with the columns of the second matrix. This picture of matrix multiplication will be very important for our work with matrices.

I should say something about a point I mentioned earlier: Why not multiply matrices like this?

![]()

You could define a matrix multiplication this way, but unfortunately, it would not be useful for applications (such as solving systems of linear equations). Or when we study the relationship between matrices and linear transformations, we'll see that the matrix multiplication we defined using dot products corresponds to the composite of transformations. So even though the "corresponding elements" definition seems simpler, it doesn't match up well with the way matrices are used.

Here's a preview of how matrices are related to systems of linear equations.

Example. Write the system of equations which correspond to the matrix equation

![$$\left[\matrix{2 & 3 & 0 \cr -1 & 5 & 17 \cr}\right] \left[\matrix{a \cr b \cr c \cr}\right] = \left[\matrix{13 \cr 0 \cr}\right].$$ Multiply out the left side:](matrix-arithmetic89.png)

![]()

Two matrices are equal if their corresponding entries are equal, so equate corresponding entries:

![]()

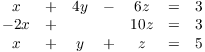

Example. Write the following system of linear equations as a matrix multiplication equation:

Take the 9 coefficients of the variables x, y, and z on the left side

and make them into a ![]() matrix. Put the

variables into a 3-dimensional column vector. Make the 3 numbers on

the right side into a 3-dimensional column vectors. Here's what we

get:

matrix. Put the

variables into a 3-dimensional column vector. Make the 3 numbers on

the right side into a 3-dimensional column vectors. Here's what we

get:

![$$\left[\matrix{ 1 & 4 & -6 \cr -2 & 0 & 10 \cr 1 & 1 & 1 \cr}\right] \left[\matrix{x \cr y \cr z \cr}\right] = \left[\matrix{3 \cr 3 \cr 5 \cr}\right].$$](matrix-arithmetic94.png)

Work out the multiplication on the left side of this equation and you'll see how this represents the original system.

This is really important! It allows us to represent a system of

equations as a matrix equation. Eventually, we'll figure out how to

solve the original system by working with the matrices.![]()

Identity matrices. There are special matrices

which serve as identities for multiplication:

The ![]() identity matrix is the square matrices with

1's down the main diagonal --- the diagonal

running from northwest to southeast --- and 0's everywhere else. For

example, the

identity matrix is the square matrices with

1's down the main diagonal --- the diagonal

running from northwest to southeast --- and 0's everywhere else. For

example, the ![]() identity matrix is

identity matrix is

![$$I = \left[\matrix{1 & 0 & 0 \cr 0 & 1 & 0 \cr 0 & 0 & 1 \cr}\right].$$](matrix-arithmetic97.png)

If I is the ![]() identity and A is a matrix which

is compatible for multiplication with A, then

identity and A is a matrix which

is compatible for multiplication with A, then

![]()

For example,

![$$\left[\matrix{ 3 & -7 & 10 \cr \noalign{\vskip2pt} \pi & \dfrac{1}{2} & -1.723 \cr \noalign{\vskip2pt} 0 & 19 & 712 \cr}\right] \left[\matrix{1 & 0 & 0 \cr 0 & 1 & 0 \cr 0 & 0 & 1 \cr}\right] = \left[\matrix{ 3 & -7 & 10 \cr \noalign{\vskip2pt} \pi & \dfrac{1}{2} & -1.723 \cr \noalign{\vskip2pt} 0 & 19 & 712 \cr}\right].$$](matrix-arithmetic100.png)

Matrix multiplication obeys some of the algebraic laws you're familiar with. For example, matrix multiplication is associative: If A, B, and C are matrices and their dimensions are compatible for multiplication, then

![]()

However, matrix multiplication is not

commutative in general. That is, ![]() for all matrices A, B.

for all matrices A, B.

One trivial way to get a counterexample is to let A be ![]() and let B be

and let B be ![]() . Then

. Then ![]() is

is ![]() while

while ![]() is

is ![]() . Since

. Since ![]() and

and ![]() have different dimensions, they

can't be equal.

have different dimensions, they

can't be equal.

However, it's easy to come up with counterexamples even when ![]() and

and ![]() have the same dimensions. For

example, consider the following matrices in

have the same dimensions. For

example, consider the following matrices in ![]() :

:

![]()

Then you can check by doing the multiplications that

![]()

Many of the properties of matrix arithmetic are things you'd expect --- for example, that matrix addition is commutative, or that matrix multiplication is associative. You should pay particular attention when things don't work the way you'd expect, and this is such a case. It is very significant that matrix multiplication is not always commutative.

Transposes. If A is a matrix, the transpose ![]() of A is obtained by

swapping the rows and columns of A. For example,

of A is obtained by

swapping the rows and columns of A. For example,

![$$\left[\matrix{1 & 2 & 3 \cr 4 & 5 & 6 \cr}\right]^T = \left[\matrix{1 & 4 \cr 2 & 5 \cr 3 & 6 \cr}\right].$$](matrix-arithmetic117.png)

Notice that the transpose of an ![]() matrix is an

matrix is an

![]() matrix.

matrix.

Example. Consider the following matrices with real entries:

![$$A = \left[\matrix{1 & 0 & 1 & 2 \cr 2 & 1 & -1 & 0 \cr}\right], \quad B = \left[\matrix{c & c \cr 1 & 1 \cr}\right], \quad C = \left[\matrix{0 & 1 \cr 2 & -1 \cr 0 & 0 \cr 1 & 1 \cr}\right].$$](matrix-arithmetic120.png)

(a) Compute ![]() .

.

(b) Compute ![]() .

.

(a)

![$$C B + A^T = \left[\matrix{0 & 1 \cr 2 & -1 \cr 0 & 0 \cr 1 & 1 \cr}\right] \left[\matrix{c & c \cr 1 & 1 \cr}\right] + \left[\matrix{1 & 0 & 1 & 2 \cr 2 & 1 & -1 & 0 \cr}\right]^T = \left[\matrix{1 & 1 \cr 2 c - 1 & 2 c - 1 \cr 0 & 0 \cr c + 1 & c + 1 \cr}\right] + \left[\matrix{1 & 2 \cr 0 & 1 \cr 1 & -1 \cr 2 & 0 \cr}\right] = \left[\matrix{2 & 3 \cr 2 c - 1 & 2 c \cr 1 & -1 \cr c + 3 & c + 1 \cr}\right].\quad\halmos$$](matrix-arithmetic123.png)

(b)

![$$A C - 2 B = \left[\matrix{1 & 0 & 1 & 2 \cr 2 & 1 & -1 & 0 \cr}\right] \left[\matrix{0 & 1 \cr 2 & -1 \cr 0 & 0 \cr 1 & 1 \cr}\right] - 2 \left[\matrix{c & c \cr 1 & 1 \cr}\right] = \left[\matrix{2 & 3 \cr 2 & 1 \cr}\right] - \left[\matrix{2 c & 2 c \cr 2 & 2 \cr}\right] = \left[\matrix{2 - 2 c & 3 - 2 c \cr 0 & -1 \cr}\right].\quad\halmos$$](matrix-arithmetic124.png)

The inverse of a matrix. The

inverse of an ![]() matrix A is a matrix

matrix A is a matrix ![]() which satisfies

which satisfies

![]()

There is no such thing as matrix division in general, because

some matrices do not have inverses. But if A has an inverse,

you can simulate division by multiplying by ![]() . This is often useful in solving matrix equations.

. This is often useful in solving matrix equations.

An ![]() matrix which does not have an inverse is

called singular.

matrix which does not have an inverse is

called singular.

We'll discuss matrix inverses and how you find them in detail later. For now, here's a formula that we'll use frequently.

Proposition. Consider the following matrix with entries in a commutative ring with identity R.

![]()

Suppose that ![]() is invertible in R. Then the

inverse of A is

is invertible in R. Then the

inverse of A is

![]()

Note: The number ![]() is called the determinant of the matrix. We'll discuss

determinants later.

is called the determinant of the matrix. We'll discuss

determinants later.

Remember that not every element of a commutative ring with identity

has an inverse. For example, ![]() is undefined in

is undefined in

![]() . If we're dealing with real numbers, then

. If we're dealing with real numbers, then

![]() has a multiplicative inverse if and only if

it's nonzero.

has a multiplicative inverse if and only if

it's nonzero.

Proof. To show that the formula gives the

inverse of A, I have to check that ![]() and

and ![]() :

:

![]()

![]()

This proves that the formula gives the inverse of a ![]() matrix.

matrix.![]()

Here's an example of this formula for a matrix with real entries.

Notice that ![]() . So

. So

![]()

(If I have a fraction outside a matrix, I may choose not to multiply it into the matrix to make the result look nicer. Generally, if there is an integer outside a matrix, I will multiply it in.)

Recall that a matrix which does not have an inverse is called singular. Suppose we have a real matrix

![]()

The formula above will produce an inverse if ![]() is invertible, which for real numbers means

is invertible, which for real numbers means ![]() . So the matrix is singular if

. So the matrix is singular if ![]() .

.

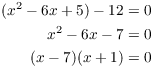

Example. For what values of x is the following real matrix singular?

![]()

The matrix is singular --- not invertible --- if

![]()

Solve for x:

This gives ![]() or

or ![]() . The matrix is

singular for

. The matrix is

singular for ![]() and for

and for ![]() .

.![]()

Be careful! The following matrix is singular over ![]() :

:

![]()

However, its determinant is ![]() , which is nonzero. The point is that 2 is not invertible in

, which is nonzero. The point is that 2 is not invertible in

![]() , even though it's nonzero.

, even though it's nonzero.

Example. (a) Find ![]() in

in ![]() .

.

(b) Find the inverse of the following matrix over ![]() .

.

![]()

(You should multiply any numbers outside the matrix into the matrix,

and simplify all the numbers in the final answer in ![]() .)

.)

(a) Since ![]() in

in ![]() , I have

, I have ![]() .

.![]()

(b) First, the determinant is ![]() . I saw in (a) that

. I saw in (a) that ![]() . So

. So

![]()

![]()

I reduced all the numbers in ![]() at the last

step; for example,

at the last

step; for example, ![]() in

in ![]() . As a check,

. As a check,

![]()

That is, when I multiply the inverse and the original matrix, I get

the identity matrix. Check for yourself that it also works if I

multiply them in the opposite order.![]()

We'll discuss solving systems of linear equations later, but here's an example of this which puts a lot of the ideas we've discussed together.

Example. ( Solving a system

of equations) Solve the following system over ![]() for x and y using the inverse of a matrix.

for x and y using the inverse of a matrix.

![]()

Write the equation in matrix form:

![]()

Using the formula for the inverse of a ![]() matrix, I find that

matrix, I find that

![]()

Multiply both sides by the inverse matrix:

![]()

On the left, the square matrix and its inverse cancel, since they multiply to I. (Do you see how that is like "dividing by the matrix"?) On the right,

![]()

Therefore,

![]()

The solution is ![]() ,

, ![]() .

.![]()

Copyright 2020 by Bruce Ikenaga