Definition. If m and n are integers, not both

0, the greatest common divisor ![]() of m and n is the largest integer which divides m and

n.

of m and n is the largest integer which divides m and

n. ![]() is undefined. I'll often get lazy and

abbreviate "greatest common divisor" to "gcd".

is undefined. I'll often get lazy and

abbreviate "greatest common divisor" to "gcd".

Example. ( Greatest common

divisors for small integers) Find by direct computation ![]() ,

, ![]() ,

, ![]() , and

, and ![]() .

.

The largest integer which divides 4 and 6 is 2:

![]()

The largest integer which divides -6 and 15 is 3:

![]()

The largest integer which divides 42 and 0 is 42:

![]()

Finally, the largest integer which divides 24 and 25 is 1:

![]()

Here are some easy properties of the greatest common divisor.

Proposition. Let ![]() , and suppose a and b aren't both

0.

, and suppose a and b aren't both

0.

(a) ![]() and

and ![]() .

.

(b) ![]() exists, and

exists, and ![]() .

.

(c) ![]() .

.

(d) If ![]() , then

, then ![]() .

.

(e) ![]() .

.

(f) ![]() .

.

Proof. (a) That ![]() and

and ![]() follows directly from the definition of

follows directly from the definition of

![]() . (I'm singling this out even though it's

easy, because it's a property that is often used.)

. (I'm singling this out even though it's

easy, because it's a property that is often used.)

(b) On the one hand, the set of common divisors is finite (because a

common divisor can't be larger than ![]() or

or ![]() ), so it must have a

largest element.

), so it must have a

largest element.

Now ![]() and

and ![]() , so 1 is a common divisor of a and b.

Hence, the greatest common divisor

, so 1 is a common divisor of a and b.

Hence, the greatest common divisor ![]() must be at least as big as 1 --- that is,

must be at least as big as 1 --- that is,

![]() .

.

(c) The largest integer which divides both a and b is the same as the largest integer which divides both b and a.

(d) ![]() , since

, since ![]() , and

, and ![]() , since

, since ![]() . Thus,

. Thus, ![]() is a common divisor of a and 0, so

is a common divisor of a and 0, so ![]() .

.

But ![]() , so

, so ![]() . Hence,

. Hence, ![]() .

.

(e) ![]() divides a, so it divides

divides a, so it divides ![]() . Likewise,

. Likewise, ![]() divides

divides ![]() . Since

. Since ![]() is a common divisor

of

is a common divisor

of ![]() and

and ![]() , I have

, I have ![]() .

.

In similar fashion, ![]() is a common

divisor of a and b, so

is a common

divisor of a and b, so ![]() .

.

Therefore, ![]() .

.

(f) ![]() , but

, but ![]() . The only positive integer that divides 1

is 1. Hence,

. The only positive integer that divides 1

is 1. Hence, ![]() .

.![]()

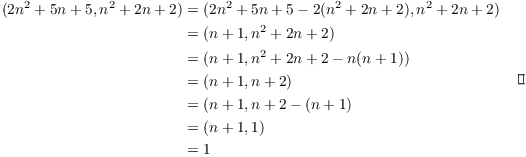

I'll use the Division Algorithm to derive a method for computing the greatest common divisor of two numbers. The idea is to perform the Division Algorithm repeatedly until you get a remainder of 0. First, I need a lemma which is useful in its own right.

Lemma. If a and b are integers, not both 0, and k is an integer, then

![]()

Proof. If d divides a and b, then d divides

![]() , so d divides

, so d divides ![]() . Thus, d is a common divisor of

. Thus, d is a common divisor of ![]() and b.

and b.

If d divides ![]() and b, then d divides

and b, then d divides ![]() , so d divides

, so d divides ![]() . Thus, d is a common divisor of

a and b.

. Thus, d is a common divisor of

a and b.

I've proved that the set of common divisors of a and b is the

same as the set of common divisors of ![]() and b. Since the two sets are the same,

they must have the same largest element --- that is,

and b. Since the two sets are the same,

they must have the same largest element --- that is, ![]() .

.![]()

The lemma says that the greatest common divisor of two numbers is not changed if I change one of the numbers by adding or subtracting an integer multiple of the other. This can be useful by itself in determining greatest common divisors.

Example. Prove that if n is an integer, then

![]()

The idea is to subtract multiples of one number from the other to reduce the powers until I get an expression which is clearly equal to 1.

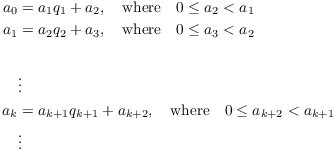

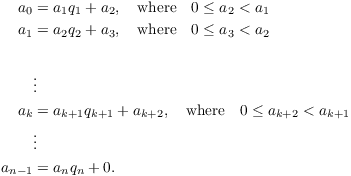

Theorem. ( The Euclidean

Algorithm) Let ![]() ,

and suppose

,

and suppose ![]() . Define

. Define ![]() ,

, ![]() , ... and

, ... and ![]() ,

, ![]() , ... by recursively

applying the Division Algorithm:

, ... by recursively

applying the Division Algorithm:

Then:

(a) The process will terminate with ![]() for some n.

for some n.

(b) At the point when the process terminates, ![]() .

.

Proof. There is no question that I can apply

the Division Algorithm as described above, as long as ![]() .

.

First, I'll show that the process terminates with ![]() for some n.

for some n.

Note that ![]() is a decreasing

sequence of nonnegative integers. The well-ordering principle implies

that this sequence cannot be infinite. Since the only way the process

can stop is if a remainder is 0, I must have

is a decreasing

sequence of nonnegative integers. The well-ordering principle implies

that this sequence cannot be infinite. Since the only way the process

can stop is if a remainder is 0, I must have ![]() for some n.

for some n.

Suppose ![]() is the first remainder that is 0.

I want to show

is the first remainder that is 0.

I want to show ![]() .

.

At any stage, I'm starting with ![]() and

and ![]() and producing

and producing ![]() and

and ![]() using the Division Algorithm:

using the Division Algorithm:

![]()

Since ![]() , the previous

lemma implies that

, the previous

lemma implies that

![]()

This means that

![]()

In other words, each step leaves the greatest common divisor of the

pair of a's unchanged. Thus, ![]() .

.![]()

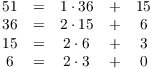

Example. ( Using the

Euclidean algorithm to find a greatest common divisor) Use the

Euclidean algorithm to compute ![]() .

.

Write

To save writing --- and to anticipate the setup I'll use for the Extended Euclidean Algorithm later --- I'll arrange the computation in a table:

The greatest common divisor is the last nonzero remainder (3). Hence,

![]() .

.![]()

Definition. If a and b are things, a linear combination of a and b is something of the

form ![]() , where s and t are numbers. (The

kind of "number" depends on the context.)

, where s and t are numbers. (The

kind of "number" depends on the context.)

The next result is a key fact about greatest common divisors.

Theorem. ( Extended Euclidean

Algorithm) ![]() is a linear combination of a

and b:

is a linear combination of a

and b: ![]() for some integers s and t.

for some integers s and t.

Note: s and t are not unique.

Proof. The proof will actually give an algorithm which constructs a linear combination. It is called a backward recurrence, and it appears in a paper by S. P. Glasby [2]. It will look a little complicated, but you'll see that it's really easy to use in practice.

![]() is only defined if at least one of a, b is

nonzero. If

is only defined if at least one of a, b is

nonzero. If ![]() ,

, ![]() and

and ![]() . This proves the result if

one of the numbers is 0, so I may as well assume both are nonzero.

Moreover, since

. This proves the result if

one of the numbers is 0, so I may as well assume both are nonzero.

Moreover, since ![]() , I can assume

both numbers are positive.

, I can assume

both numbers are positive.

Suppose ![]() . Apply the Euclidean Algorithm to

. Apply the Euclidean Algorithm to

![]() and

and ![]() , and suppose that

, and suppose that ![]() is the last nonzero remainder:

is the last nonzero remainder:

I'm going to define a sequence of numbers ![]() ,

, ![]() , ...

, ... ![]() ,

, ![]() . They will be

constructed recursively, starting with

. They will be

constructed recursively, starting with ![]() ,

, ![]() and working

downward to

and working

downward to ![]() . (This is why this is

called a backward recurrence.)

. (This is why this is

called a backward recurrence.)

Define ![]() and

and ![]() . Then define

. Then define

![]()

Now I claim that

![]()

I will prove this by downward induction, starting with ![]() and working downward to

and working downward to ![]() .

.

For ![]() , I have

, I have

![]()

The result holds for ![]() .

.

Next, suppose ![]() . Suppose the result holds

for

. Suppose the result holds

for ![]() , i.e.

, i.e.

![]()

I want to prove the result for k. Substitute ![]() in the preceding equation

and simplify:

in the preceding equation

and simplify:

![]()

![]()

![]()

This proves the result for k, so the result holds for ![]() , by downward induction.

, by downward induction.

In particular, for ![]() , the result says

, the result says

![]()

Since ![]() , I've expressed

, I've expressed ![]() as a linear combination of

as a linear combination of ![]() and

and ![]() .

.![]()

Remark. There are many algorithms (like the one in the proof) which produce a linear combination. This one is pretty good for small computations which you're doing by hand.

One drawback of this algorithm is that you need to know all of the quotients (the q's) in order to work backwards to get the linear combination. This isn't bad for small numbers, but if you're using large numbers on a computer, you'll need to store all the intermediate results. There are algorithms which are better if you're doing large computations on a computer (see [1], page 300).

It's difficult to overemphasize the importance of this result! It has many applications --- from proving results about greatest common divisors, to solving Diophantine equations. I'll give some examples which illustrate the result, then discuss how you use the algorithm in the theorem.

Before I give examples of the algorithm, I'll look at some other ways of finding a linear combination.

Definition. Let ![]() . a and b are

relatively prime if

. a and b are

relatively prime if ![]() .

.

Example. ( A linear combination for a greatest common divisor) Show that 12 and 25 are relatively prime. Write their greatest common divisor as as linear combination with integer coefficients of 12 and 25. In some cases, the numbers are nice enough that you can figure out a linear combination by trial and error.

In this case, it's clear that ![]() and

and ![]() are relatively prime. So

are relatively prime. So ![]() ; to get a linear combination, I need

multiples of 12 and 25 which differ by 1. Here's an easy one:

; to get a linear combination, I need

multiples of 12 and 25 which differ by 1. Here's an easy one:

![]()

Note that ![]() , so

the linear combination is not unique.

, so

the linear combination is not unique.![]()

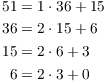

Example. ( Finding a linear

combination by algebra) Use the Division Algorithm computations

in the Euclidean algorithm to find an integer linear combination of

51 and 36 that is equal to ![]() .

.

It's possible --- but tedious --- to use the computations in

the Euclidean algorithm to find linear combinations. For ![]() , I have

, I have

The third equation says ![]() .

.

By the second equation, ![]() , so

, so

![]()

The first equation says ![]() , so

, so

![]()

I've expressed the greatest common divisor 3 as a linear combination of the original numbers 51 and 36.

I don't recommend this approach, since the proof of the Extended

Euclidean Algorithm gives a method which is much easier and less

error-prone.![]()

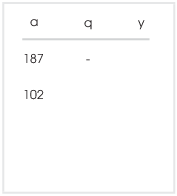

Example. ( Finding a linear

combination using the backward recursion) Find ![]() and express it as a linear combination with

integer coefficients of 187 and 102.

and express it as a linear combination with

integer coefficients of 187 and 102.

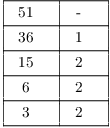

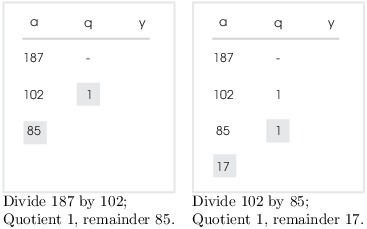

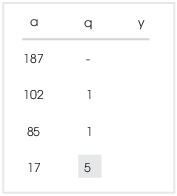

In this example, I'll show how you can use the bakcward recursion to obtain a linear combination. I'll arrange the computations in the form of a table; the table is simply an extension of the table I used for the Euclidean algorithm.

In this example only, I'm labelling the columns with the variable names a, q, and y from the proof so you can see the correspondence. Normally, I'll omit them.

Here's how you start:

(You can save a step by putting the larger number first.)

The a and q columns are filled in using the Euclidean algorith, i.e. by successive division: Divide the next-to-the-last a by the last a. The quotient goes into the q-column, and the remainder goes into the a-column.

When the division comes out evenly, you stop adding rows to the table. In this case, 85 divided by 17 is 5, and the remainder is 0.

The last entry in the a-column is the greatest common divisor. Thus,

![]() .

.

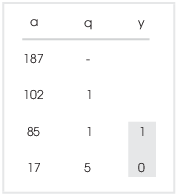

Having filled in the a and q columns, you now fill in the y-column from bottom to top. You always start in the same way: The last y is always 0 and the next-to-the-last y is always 1:

Then, working from bottom to top, fill in the y's using the rule

![]()

This comes from the recursion formula in the Extended Euclidean Algorithm Theorem:

![]()

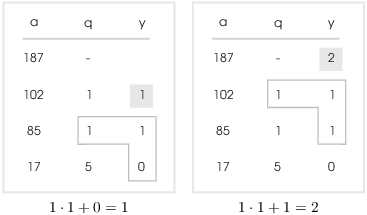

It's probably easier to show than it is to explain:

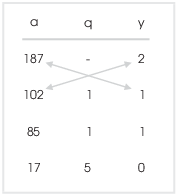

To get the linear combination, form the products of the top two a's and y's diagonally and subtract one from the other:

Thus,

![]()

How do you know the order for the subtraction? The proof gives a formula, but the easiest thing is to pick one of the two ways, then fix it if it isn't right. If you subtract "the wrong way", you'll get a negative number. For example,

![]()

Since I know the greatest common divisor should be 17 --- it's the last number in the a-column --- I just multiply this equation by -1:

![]()

This way, you don't need to memorize the exact formula.![]()

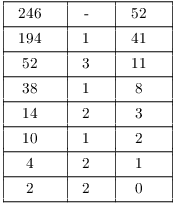

Example. ( Finding a linear

combination using the backward recursion) Compute ![]() and express it as an integer linear

combination of 246 and 194.

and express it as an integer linear

combination of 246 and 194.

Thus,

![]()

Example. ( The converse of the linear combination result) Give specific numbers a, b, m, n and d such that

![]()

The converse of the linear combination result is not always true.

That is, if ![]() for some numbers s and t,

it's not necessarily true that

for some numbers s and t,

it's not necessarily true that ![]() .

.

For example, ![]() . But

. But

![]() .

.![]()

There's an important situation in which the linear combination result does work backwards: namely, when the greatest common divisor is 1. The next result makes this precise, and also shows how you can use the linear combination rule to prove results about greatest common divisors.

Proposition. Let ![]() . Then

. Then ![]() if and only if

if and only if

![]()

Proof. The greatest common divisor of a and b

can be written as a linear combination of a and b. Therefore, if ![]() , then

, then

![]()

Conversely, suppose that ![]() for some

for some

![]() .

. ![]() divides a and

divides a and ![]() divides b, so

divides b, so ![]() divides

divides ![]() . But

. But ![]() is a positive integer, and the only

positive integer that divides 1 is 1. Therefore,

is a positive integer, and the only

positive integer that divides 1 is 1. Therefore, ![]() .

.![]()

Example. ( Using a linear

combination to prove relative primality) Prove that if k is any

integer, then the fraction ![]() is in lowest terms.

is in lowest terms.

For example, if ![]() , the fraction is

, the fraction is ![]() , which is in lowest terms.

, which is in lowest terms.

A fraction is in lowest terms if the numerator and denominator are

relatively prime. So I want to show that ![]() and

and ![]() are relatively prime.

are relatively prime.

I'll use the previous result, noting that

![]()

I found the coefficients by playing with numbers, trying to make the k-terms cancel.

Since a linear combination of ![]() and

and ![]() equals 1, the last proposition shows that

equals 1, the last proposition shows that

![]() and

and ![]() are relatively prime.

are relatively prime.![]()

The linear combination rule is often useful in proofs involving greatest common divisors. If you're proving a result about a greatest common divisor, consider expressing the greatest common divisor as a linear combination of the two numbers.

Proposition. Let a and b be integers, not both

0. If ![]() and

and ![]() , then

, then ![]() .

.

Proof.![]() is a linear combination of a and b, so

is a linear combination of a and b, so

![]()

Now ![]() and

and ![]() , so

, so ![]() .

.![]()

![]() was defined to be the greatest

common divisor of a and b, in the sense that it was the

largest common divisor of a and b. The last lemma shows that

you can take greatest in a different sense --- namely, that

was defined to be the greatest

common divisor of a and b, in the sense that it was the

largest common divisor of a and b. The last lemma shows that

you can take greatest in a different sense --- namely, that

![]() must be divisible by any other

common divisor of a and b.

must be divisible by any other

common divisor of a and b.![]()

Example. ( Using the linear

combination result to prove a greatest common divisor property)

Prove that if ![]() and

and ![]() , then

, then ![]() .

.

Since ![]() ,

,

![]()

Multiplying by k, I get

![]()

![]() and

and ![]() , so

, so ![]() .

.

On the other hand, ![]() and

and ![]() , so

, so ![]() .

.

Since k and ![]() are positive integers,

are positive integers, ![]() .

.![]()

[1] Alfred Aho, John Hopcroft, and Jeffrey Ullman, The Design and Analysis of Computer Algorithms. Reading, Massachusetts: Addison-Wesley Publishing Company, 1974.

[2] S. P. Glasby, Extended Euclid's algorithm via backward recurrence relations, Mathematics Magazine, 72(3)(1999), 228--230.

Copyright 2018 by Bruce Ikenaga