Calculus is over three hundred years old, but the modern approach via limits only dates to the early 1800's with Cauchy. Historically, the use of differentials antedates the "rigorous approach" we now take to the derivative.

For example, Carl Boyer [1] notes:

"Increments and decrements, rather than rates of change, were the fundamental elements in the work leading to that of Leibniz, and played a larger part in the calculus of Newton than is usually recognized. The differential became the primary notion, and it was not effectively displaced as such until Cauchy, in the nineteenth century, made the derivative the basic concept."

A differential was regarded loosely as an infinitely small nonzero

quantity. For example, here is a computation of the derivative of

![]() via differentials. Increment x by an infinitely small

amount

via differentials. Increment x by an infinitely small

amount ![]() , which produces an infinitely small change

, which produces an infinitely small change ![]() in

in ![]() . This change is

. This change is

![]()

Divide through by ![]() :

:

![]()

Since ![]() is "infinitely small", I may neglect it:

is "infinitely small", I may neglect it:

![]()

This approach came to be regarded as imprecise. What does it mean for something to be "infinitely small"? How can something be "infinitely small", but not 0? What can you "neglect"?

Eventually, infinitely small quantities --- infinitesimals --- as well as infinitely large quantities were rehabilitated in the work of the American logician Abraham Robinson. Though calculus can be done using infinitesimals, it is standard practice to use limits and difference quotients instead.

The approach developed by Cauchy, which is more or less the approach that I'll use, makes no reference to "infinitely small" quantities. The definition of the derivative is:

![]()

It does not give separate meanings to ![]() and

and ![]() , so

, so ![]() isn't a quotient. Nevertheless,

people have tried to define

isn't a quotient. Nevertheless,

people have tried to define ![]() and

and ![]() in sensible ways in

order to make the quotient

in sensible ways in

order to make the quotient ![]() equal the derivative.

equal the derivative.

Here is one way to do this. Regard ![]() as an independent

variable, and define

as an independent

variable, and define ![]() . Then formally have

. Then formally have

![]() . This has the following interpretation.

. This has the following interpretation.

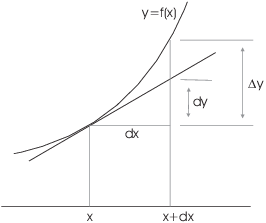

The tangent line at a point on ![]() has slope

has slope ![]() . If you move from x to

. If you move from x to ![]() , the tangent line rises by

, the tangent line rises by ![]() . On the other hand, the actual change in y is

. On the other hand, the actual change in y is

![]()

If ![]() is small,

is small, ![]() . Hence,

. Hence,

![]()

This formula can be used to approximate ![]() from

from ![]() . I'll refer to this procedure as

approximation by differentials, or the tangent line approximation.

. I'll refer to this procedure as

approximation by differentials, or the tangent line approximation.

Note that ![]() --- they're approximately

equal. Somewhat confusingly,

--- they're approximately

equal. Somewhat confusingly, ![]() and

and ![]() are often used interchangeably, so

are often used interchangeably, so ![]() . You might think of it this way:

. You might think of it this way: ![]() or

or ![]() is the change in the input variable, which

presumably you know exactly. But the change in f is given

exactly by the corresponding change in the height of the

graph of f, and given approximately by the corresponding

change in the height of the tangent line to the graph of f.

is the change in the input variable, which

presumably you know exactly. But the change in f is given

exactly by the corresponding change in the height of the

graph of f, and given approximately by the corresponding

change in the height of the tangent line to the graph of f.

Example. For ![]() ,

find:

,

find:

(a) The exact change in f if x goes from 1 to 1.01.

(b) The approximate change in f if x goes from 1 to 1.01.

(a) The exact change in f is ![]() .

.

![]()

(b) The approximate change in f is ![]() .

. ![]() , so

, so ![]() . Since

. Since ![]() ,

,

![]()

Notice that the approximate change differs from the exact change by only around 0.0003.

Example. Suppose ![]() and

and ![]() . Find:

. Find:

(a) The approximate change in f as x changes from 5 to 4.9.

(b) The approximate value of ![]() .

.

(a) The approximate change in f is ![]() . Now

. Now

![]() , while

, while ![]() . Thus,

. Thus,

![]()

(b) The approximate value of ![]() is given by

is given by

![]()

Example. A differentiable function ![]() has derivative

has derivative ![]() . Approximate the change in f that results when x

changes from 1 to 1.02.

. Approximate the change in f that results when x

changes from 1 to 1.02.

![]()

Hence, the approximate change in f is

![]()

Example. A function is defined implicitly by the equation

![]()

Approximate the change in y at the point ![]() , as x changes from 1 to 1.01.

, as x changes from 1 to 1.01.

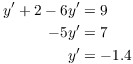

First, compute ![]() by implicit differentiation:

by implicit differentiation:

![]()

Set ![]() ,

, ![]() :

:

The change in x is ![]() . Therefore, the change

in y is approximately

. Therefore, the change

in y is approximately

![]()

Example. Use differentials to approximate ![]() .

.

Since I'm trying to approximate ![]() , I'll let

, I'll let

![]() .

.

I know that ![]() ; I'll use differentials to

approximate the change in going from 1 to 1.01. First,

; I'll use differentials to

approximate the change in going from 1 to 1.01. First,

![]()

The change in x is

![]()

Then

![]()

Hence,

![]()

The actual value is 1.004988.![]()

In some applications, you can interpret ![]() as the error in w. In this case,

as the error in w. In this case, ![]() is the approximate error in w.

is the approximate error in w.

In some cases, you need to know how large the error is relative to

the thing you're measuring. For instance, an error of 1 cm in

measuring something of length 10 cm is fairly large: It is an error

of ![]() . But an error of 1 cm in

measuring something of length 100 cm is an error of

. But an error of 1 cm in

measuring something of length 100 cm is an error of ![]() .

.

The relative error in w is ![]() , and the approximate

relative error is

, and the approximate

relative error is ![]() . A relative

error is often expressed as a percentage.

. A relative

error is often expressed as a percentage.

Example. The side of a square is measured to be 10 light-years, with an error of 0.2 light-years.

(a) Use differentials to approximate the error in the area.

(b) Approximate the relative error and the relative percentage error.

(a) If s is the length of the side, the area is ![]() . Then

. Then ![]() , so

, so ![]() . The error

. The error ![]() is approximated

by

is approximated

by

![]()

(b) For ![]() , the area is

, the area is ![]() . The approximate relative error is

. The approximate relative error is ![]() .

.![]()

[1] Carl B. Boyer, The History of the Calculus and Its Conceptual Development. New York: Dover Publications, 1949. [ISBN 0-486-60509-4]

Copyright 2018 by Bruce Ikenaga