An sequence is a list of numbers, finite or infinite. Most of what we do will be concerned with infinite sequences.

Here are some sequences:

![]()

![]()

The first sequence is an arithmetic sequence: You get the next term by adding a constant (in this case, 4) to the previous term.

The second sequence is a geometric sequence: You get the next term by multiplying the previous term by a constant (in this case, 2). The constant that you multiply by is called the ratio of the geometric sequence.

The expressions ![]() and

and ![]() give the general terms of the

sequences, and the ranges for n are given by

give the general terms of the

sequences, and the ranges for n are given by ![]() and

and ![]() . You could write these sequences

by just giving their general terms:

. You could write these sequences

by just giving their general terms:

![]()

![]()

The a and b are just dummy variables. The subscript n is the

important thing, since it keeps track of the number of the term and

also occurs in the formulas ![]() and

and ![]() .

.

You can also write ![]() and

and ![]() .

.

There is no reason why you have to start indexing at 0. Here is the second sequence, indexed from 1:

![]()

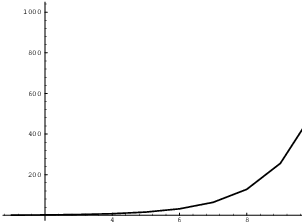

The picture below shows a plot of the first few terms of the sequence

![]() using n on the horizontal axis and the value of the

sequence on the vertical axis. That is, I plotted the points

using n on the horizontal axis and the value of the

sequence on the vertical axis. That is, I plotted the points

![]()

To make it look like an ordinary graph, I connected the dots with segments, but you can also plot the points by themselves.

Note that the order of the numbers in a sequence is important. These are different sequences:

![]()

Example. (a) Write the first 5 terms of the

arithmetic sequence ![]() where

where ![]() .

.

(b) Write the first 5 terms of the geometric sequence ![]() where

where ![]() .

.

(a) The sequence starts with the terms

![]()

(b) The sequence starts with the terms

![]()

We're often concerned with the limit of a sequence --- that is, a number that the terms approach (if there is one). The definition is like the definition of a limit of a function of a real number.

Definition. Let ![]() be a sequence.

Then

be a sequence.

Then ![]() means: For every

means: For every

![]() , there is a number M such that for

, there is a number M such that for ![]() ,

,

![]()

L is called the limit of the sequence. If a sequence has a number as a limit, the sequence converges; otherwise, it diverges.

There are two cases in which we can be more specific about the way that a sequence diverges.

We write ![]() to mean

that for every number L, there is a number M such that for

to mean

that for every number L, there is a number M such that for ![]() ,

,

![]()

Likewise, we write ![]() to mean that for every number L, there is a number M

such that for

to mean that for every number L, there is a number M

such that for ![]() ,

,

![]()

Many of the familiar rules for limits of functions hold for limits of sequences.

Theorem. Suppose ![]() ,

, ![]() , and

, and ![]() are sequences. Then:

are sequences. Then:

(a) ![]() , where k is a

constant.

, where k is a

constant.

(b) ![]() , where k is a constant.

, where k is a constant.

(c) ![]() .

.

(d) ![]() .

.

(e) ![]() , provided

that

, provided

that ![]() .

.

(f) ( Squeezing Theorem) If ![]() for all n, and

for all n, and ![]() and

and ![]() ,

then

,

then ![]() .

.

As usual, in parts (b), (c), (d), and (e) the interpretation is that the two sides of an equation are equal when all the limits involved are defined.

Besides the rules above, you may also use L'H\^opital's Rule to compute limits of sequences.

Proof. I'll prove (c) as an example. Suppose

![]()

I'll prove that

![]()

Let ![]() . Choose a number L so that if

. Choose a number L so that if ![]() , then

, then

![]()

Choose a number M so that if ![]() , then

, then

![]()

Then let ![]() , so N is the larger of L and M. Then if

, so N is the larger of L and M. Then if

![]() , I have both

, I have both

![]()

I add the inequalities and use the Triangle Inequality:

This proves that ![]() .

.![]()

The limit of a geometric sequence is determined entirely by its ratio r. The following result describes the cases.

Proposition. Let ![]() be a geometric

sequence.

be a geometric

sequence.

(a) If ![]() , then

, then ![]() if

if ![]() ,

, ![]() if

if ![]() , and

, and ![]() if

if ![]() .

.

(b) If ![]() , then

, then ![]() diverges to

diverges to ![]() by oscillation.

by oscillation.

(c) If ![]() , then

, then ![]() for all n, and

for all n, and ![]() .

.

(d) If ![]() , then

, then ![]() diverges to

diverges to ![]() by oscillation.

by oscillation.

(e) If ![]() , then

, then ![]() .

.

Proof. I'll sketch a proof that ![]() if

if ![]() to illustrate the ideas.

to illustrate the ideas.

Note that since ![]() ,

,

![]()

That is, the sequence ![]() decreases. The terms are all

positive, so they're bounded below by 0. As we'll see below, a

decreasing sequence that is bounded below must have a limit. So

decreases. The terms are all

positive, so they're bounded below by 0. As we'll see below, a

decreasing sequence that is bounded below must have a limit. So ![]() is defined.

is defined.

Now

![]()

But

![]()

Call this common limit L. Then the last equation says

![]()

Since ![]() , this implies that

, this implies that ![]() .

.![]()

Here are some examples to illustrate the cases:

![]()

![]()

![]()

![]()

![]()

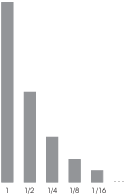

Here is a geometric sequence in which each term is ![]() times the previous term:

times the previous term:

![]()

The terms appear to approach 0, so ![]() .

.

Here is a picture of the terms in this sequence.

Notice that the rectangles' heights approach 0.

Here is a geometric sequence in which each term is ![]() times the previous term:

times the previous term:

![]()

The terms appear to increase indefinitely, so I'll write ![]() .

.

Here is an interesting way to picture of the terms in this sequence. Take a segment and divide it into thirds. Replace the middle third with a "bump" shaped like an equilateral triangle.

If the original segment had length 1, the new path with the

triangular bump has length ![]() .

.

Now repeat the process with each of the four segments:

Since each segment's length is multiplied by ![]() , this path has total length

, this path has total length ![]() .

.

Here's the result of repeating the process two more times:

If you continue this process indefinitely, the limiting path must

have infinite length, since ![]() . The limiting path is an example

of a self-similar fractal.

. The limiting path is an example

of a self-similar fractal.

Example. Determine whether the sequence ![]() for

for ![]() converges or diverges. If it converges, find the

limit.

converges or diverges. If it converges, find the

limit.

![]()

Hence, the series converges.![]()

Example. Determine whether the sequence ![]() for

for ![]() converges or

diverges. If it converges, find the limit.

converges or

diverges. If it converges, find the limit.

Divide the top and bottom by ![]() :

:

Now ![]() ,

and this goes to 0 because

,

and this goes to 0 because ![]() . Clearly

. Clearly

![]() and

and ![]() go to 0.

The limit reduces to

go to 0.

The limit reduces to

The sequence converges to 0.![]()

Example. (a) Determine whether the sequence

![]() for

for ![]() converges or

diverges. If it converges, find the limit.

converges or

diverges. If it converges, find the limit.

(b) Determine whether the sequence ![]() for

for ![]() converges or diverges. If it

converges, find the limit.

converges or diverges. If it

converges, find the limit.

(a) Since ![]() , I have

, I have

![]()

Now

![]()

By the Squeezing Theorem,

![]()

(b) Note that

![]()

Hence, when n is large and even, ![]() is

close to 1, and when n is large and odd,

is

close to 1, and when n is large and odd, ![]() is

close to -1. Therefore, the sequence diverges by oscillation.

is

close to -1. Therefore, the sequence diverges by oscillation.![]()

Example. Determine whether the sequence ![]() converges or diverges. If it converges,

find the limit.

converges or diverges. If it converges,

find the limit.

Note that

![]()

(The "![]() " comes from the fact that squares

can't be negative.) Divide by n:

" comes from the fact that squares

can't be negative.) Divide by n:

![]()

Now ![]() and

and ![]() , so by the

Squeezing Theorem,

, so by the

Squeezing Theorem,

![]()

The sequence converges to 0.![]()

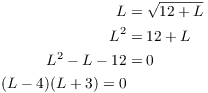

Example. A sequence defined by recursion:

![]()

Here are the first few terms:

![]()

Assume that ![]() exists. What is

it?

exists. What is

it?

Let ![]() . Then

. Then

![]()

They're equal because both represent the limit of sequences of terms with the "same infinite part".

So

![]()

Substitute L and solve the resulting equation:

This gives the solutions ![]() and

and ![]() . Since its clear

from the definition of the sequence that the sequence has positive

terms, the limit can't be negative. Hence,

. Since its clear

from the definition of the sequence that the sequence has positive

terms, the limit can't be negative. Hence, ![]() .

.![]()

Example. Start with a positive integer. If it is even, divide it by 2. If it is odd, multiply by 3 and add 1. Continue forever. You obtain a sequence of numbers --- a different sequence for each number you start with.

Show that the sequence of numbers produced by this procedure starting at 23 eventually leads to the terms 4, 2, 1, which repeat after this.

![]()

For example, 23 is odd, so the next number is ![]() . Then 70 is even, so the next number is

. Then 70 is even, so the next number is ![]() .

.

If you try other starting numbers, you'll find that you always seem

to get stuck in the ![]() loop. The

Collatz conjecture says that this always happens. It is known to

be true for starting numbers (at least) up to

loop. The

Collatz conjecture says that this always happens. It is known to

be true for starting numbers (at least) up to ![]() .

.![]()

Definition. A sequence ![]() :

:

(a) Increases if ![]() whenever

whenever ![]() .

.

(b) Decreases if ![]() whenever

whenever ![]() .

.

You can treat the terms of a sequence as values of a continuous function and use the first derivative to determine whether a sequence increases or decreases.

Example. Determine whether the sequence given

by ![]() increases, decreases, or does

neither.

increases, decreases, or does

neither.

Set ![]() . Then

. Then

![]()

Since ![]() for all x, the sequence increases.

for all x, the sequence increases.![]()

In some cases, it's not possible to use the derivative to determine whether a sequence increases or decreases. Here's another approach that is often useful:

Proposition. Let ![]() be a sequence with

positive terms, and suppose that

be a sequence with

positive terms, and suppose that ![]() . Then:

. Then:

(a) If ![]() , the terms eventually decrease.

, the terms eventually decrease.

(b) If ![]() , the terms eventually increase.

, the terms eventually increase.

Proof. Here's a sketch of the proof of (a).

Suppose ![]() . Choose a number r such that

. Choose a number r such that ![]() . Then there is a number M such that if

. Then there is a number M such that if ![]() ,

,

![]()

The last inequality says that the next term (![]() ) is less than the current term (

) is less than the current term (![]() ), which means that the terms decrease. Similar reasoning applies if

), which means that the terms decrease. Similar reasoning applies if

![]() .

.![]()

The reason I have to say the terms eventually decrease or increase is that the limit tells what the sequence does for large values of n. For small values of n, the sequence may increase or decrease, and this behavior won't be detected by taking the limit.

Example. Determine whether the sequence given

by ![]() increases, decreases, or does neither.

increases, decreases, or does neither.

I compute ![]() :

:

![]()

Since is limit is less than 1, the terms of the sequence eventually

decrease.![]()

Definition. A sequence ![]() is bounded if there is a

number M such that

is bounded if there is a

number M such that ![]() for all n.

for all n.

Pictorially, this means that all of the terms of the sequence lie

between the lines ![]() and

and ![]() :

:

I can also say a sequence is bounded if there are numbers C and D

such that ![]() for all n. This definition is

equivalent to the first definition. For if a sequence satisfies

for all n. This definition is

equivalent to the first definition. For if a sequence satisfies ![]() for all n, then

for all n, then ![]() for all n (so I

can take

for all n (so I

can take ![]() and

and ![]() in the second definition). On

the other hand, if

in the second definition). On

the other hand, if ![]() for all n, then

for all n, then ![]() , where

, where ![]() is the

larger of the numbers

is the

larger of the numbers ![]() and

and ![]() (so I can take

(so I can take ![]() in the first definition).

in the first definition).

Example. Prove that the sequence ![]() is bounded.

is bounded.

Since ![]() ,

,

![]()

Thus, the sequence is bounded according to the second definition.

Also, ![]() , and hence

, and hence ![]() . Therefore, the sequence is bounded

according to the first definition.

. Therefore, the sequence is bounded

according to the first definition.![]()

Here's another way of telling that a sequence is bounded:

Proposition. If the terms of a sequence approach a (finite) limit, then the sequence is bounded.

Proof. Suppose that ![]() . By definition, this means

that I can make

. By definition, this means

that I can make ![]() as close to L as I want by making n large

enough. Suppose, for instance, I know that

as close to L as I want by making n large

enough. Suppose, for instance, I know that ![]() is within 0.1 of L once n is greater than some number p. (I picked

the number 0.1 at random.) Thus, all the terms after

is within 0.1 of L once n is greater than some number p. (I picked

the number 0.1 at random.) Thus, all the terms after ![]() are within 0.1 of L:

are within 0.1 of L:

![]()

What about the first p terms ![]() ,

, ![]() , ...,

, ..., ![]() ? Since there are a finite number of these terms, there must be a

largest value and a smallest value among them. Suppose that the

smallest value is A and the largest value is B. Thus,

? Since there are a finite number of these terms, there must be a

largest value and a smallest value among them. Suppose that the

smallest value is A and the largest value is B. Thus,

![]()

Then if ![]() is the smaller of A and

is the smaller of A and ![]() and

and ![]() is the larger of B and

is the larger of B and

![]() , I must have

, I must have

![]()

Therefore, the sequence is bounded.![]()

Example. Prove that the sequence ![]() is bounded.

is bounded.

![]()

Therefore, the sequence is bounded.![]()

There is an important theorem which combines the ideas of increasing or decreasing and boundedness. It says that an increasing sequence that is bounded above has a limit, and a decreasing sequence that is bounded below has a limit.

Some of the material which leads up to this result is a bit technical, so you might want to skip the proofs if you find them heavy-going. The important thing is the last theorem in this section, which we'll often use in our discussion of infinite series.

Definition. Let S be a set of real numbers.

(a) An upper bound for S is a number M such

that ![]() for every number x in S. A set which has an upper

bound is bounded above.

for every number x in S. A set which has an upper

bound is bounded above.

(b) A lower bound for S is a number L such

that ![]() for every number x in S. A set which has a lower

bound is bounded below.

for every number x in S. A set which has a lower

bound is bounded below.

A sequence is bounded in the sense we discussed earlier if it's bounded above and bounded below.

For instance, consider the set

![]()

Then 1 is an upper bound for S. So is 2. So is ![]() . There are infinitely many upper bounds for S.

. There are infinitely many upper bounds for S.

0 is a lower bound for S. So is -17. There are infinitely many lower bounds for S.

Thus, a set can have many upper bounds or lower bounds.

Consider the set

![]()

S does not have an upper bound: There's no number which is greater than or equal to all the numbers in S. But 0 is a lower bound for S. So is -151.

Thus, a set does not have to have an upper bound or a lower bound.

Definition. Let S be a set.

(a) A number M is the least upper bound for S

if M is an upper bound for S, and ![]() for every upper bound

for every upper bound

![]() of S.

of S.

(b) A number L is the greatest lower bound for

S if L is an lower bound for S, and ![]() for every lower

bound

for every lower

bound ![]() of S.

of S.

We've seen that a set can have many upper bounds. The least upper bound is the smallest upper bound.

Let's consider again the set

![]()

We saw that 1, 2, and ![]() are upper bounds for S. The least

upper bound is

are upper bounds for S. The least

upper bound is ![]() . In this case, the least upper

bound is an element of the set.

. In this case, the least upper

bound is an element of the set.

Consider the set

![]()

Notice that ![]() . The least upper bound of the set T is 1. It is not

an element of T.

. The least upper bound of the set T is 1. It is not

an element of T.

We've already seen an example of a set with no upper bound:

![]()

Since this set has no upper bound, it can't have a least upper bound. There is an important circumstance when a set is guaranteed to have a least upper bound.

Axiom. ( Least Upper Bound Axiom) Let S be a nonempty set of real numbers that is bounded above. Then S has a least upper bound.

This is an axiom for the real numbers: that is, one of the assumptions which characterize the real numbers. Being an assumption, there's no question of proving it. You'd see the axioms for the real numbers in a course in analysis.

For a course in calculus, the following result is one of the most important consequences of this axiom. It will be used in our discussion of infinite series.

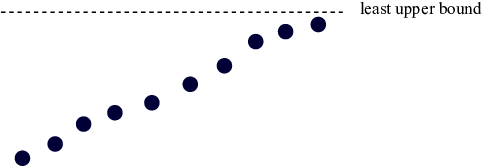

Theorem. (a) A sequence of numbers that increases and is bounded above has a limit.

(b) A sequence of numbers that decreases and is bounded below has a limit.

The following picture makes the theorem plausible:

Since the sequence is bounded above, it has a least upper bound. It appear that, since the terms increase, they should "pile up" at the least upper bound, and therefore have a limit.

Proof. I'll sketch the proof of (a) by way of example.

Suppose ![]() is an increasing sequence, and

suppose that it's bounded above. Since it's bounded above, the Least

Upper Bound Axiom implies that it has an upper bound M. I will show

that

is an increasing sequence, and

suppose that it's bounded above. Since it's bounded above, the Least

Upper Bound Axiom implies that it has an upper bound M. I will show

that

![]()

Let ![]() , and consider the number

, and consider the number ![]() . Suppose

. Suppose

![]()

Tthen ![]() is an upper bound for

is an upper bound for ![]() . But

. But ![]() , and M is supposed to be

the smallest upper bound for

, and M is supposed to be

the smallest upper bound for ![]() . This is

impossible. Hence, I can't have

. This is

impossible. Hence, I can't have ![]() for all n.

for all n.

This means that for some index k I have ![]() . The

the sequence increases, so

. The

the sequence increases, so

![]()

In other words, ![]() for all

for all ![]() .

.

By the definition of the limit of a sequence, I have ![]() .

.![]()

Copyright 2019 by Bruce Ikenaga