Let ![]() be a linear transformation of

finite dimensional vector spaces. Choose ordered bases

be a linear transformation of

finite dimensional vector spaces. Choose ordered bases ![]() for V and

for V and ![]() for W.

for W.

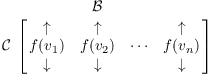

For each j, ![]() . Therefore,

. Therefore, ![]() may be written uniquely as a linear combination of

elements of

may be written uniquely as a linear combination of

elements of ![]() :

:

![]()

The numbers ![]() are uniquely determined by f. The

are uniquely determined by f. The

![]() matrix

matrix ![]() is the matrix of f relative to the ordered bases

is the matrix of f relative to the ordered bases ![]() and

and ![]() . I'll use

. I'll use ![]() to

denote this matrix. Here's how to find it.

to

denote this matrix. Here's how to find it.

I'll use std to denote the standard basis for

![]() .

.

Example. Here are two bases for ![]() :

:

![]()

![]()

Suppose ![]() is a linear

transformation such that

is a linear

transformation such that

![]()

Then

![]()

Read the description of ![]() preceding this example and verify that

preceding this example and verify that ![]() was constructed by following the

steps in the description.

was constructed by following the

steps in the description.![]()

Example. (a) Define ![]() by

by

![]()

Find ![]() .

.

Apply f to the elements of the standard basis for ![]() , and write the results in terms of the standard

basis for

, and write the results in terms of the standard

basis for ![]() :

:

![]()

![]()

Take the coefficients in the linear combinations and use them to make the columns of the matrix:

![$$[f]_{{\rm std}, {\rm std}} = \left[\matrix{1 & 2 \cr 3 & 0 \cr -1 & 5 \cr}\right].$$](matrix-linear-trans38.png)

Note that in matrix form,

![$$f\left(\left[\matrix{x \cr y \cr}\right]\right) = \left[\matrix{1 & 2 \cr 3 & 0 \cr -1 & 5 \cr}\right] \left[\matrix{x \cr y \cr}\right].$$](matrix-linear-trans39.png)

In other words, ![]() is the same matrix as the

one you'd usually use to represent f by matrix multiplication.

is the same matrix as the

one you'd usually use to represent f by matrix multiplication.![]()

(b) Let ![]() . Define

. Define ![]() by

by

![]()

Find ![]() .

.

Apply f to the elements of the standard basis for ![]() , and write the results in terms of

, and write the results in terms of ![]() :

:

![]()

![]()

Take the coefficients in the linear combinations and use them to make the columns of the matrix:

![]()

Here is a description of ![]() in words:

in words:

If you keep this in mind, change of coordinates will make much more sense.

I'll verify the claim above for one of the basis elements ![]() . In terms of

. In terms of ![]() ,

,

![]()

Then

![$$\left[\matrix{ a_{11} & \cdots & a_{1j} & \cdots & a_{1n} \cr a_{21} & \cdots & a_{2j} & \cdots & a_{2n} \cr \vdots & & \vdots & & \vdots \cr a_{m1} & \cdots & a_{mj} & \cdots & a_{mn} \cr}\right] \left[\matrix{ 0 \cr \vdots \cr 1 \cr \vdots \cr 0 \cr}\right] = \left[\matrix{ a_{1j} \cr a_{2j} \cr \vdots \cr a_{mj} \cr}\right].$$](matrix-linear-trans57.png)

This is correct, since ![]() , and the representation of

, and the representation of ![]() in terms of the basis

in terms of the basis

![]() is

is

![]()

The matrix of a linear transformation is like a snapshot of a person --- there are many pictures of a person, but only one person. Likewise, a given linear transformation can be represented by matrices with respect to many choices of bases for the domain and range.

In the last example, finding ![]() turned out to be easy, whereas finding the matrix of f relative to

other bases is more difficult. Here's how to use change of basis

matrices to make things simpler.

turned out to be easy, whereas finding the matrix of f relative to

other bases is more difficult. Here's how to use change of basis

matrices to make things simpler.

Suppose you have bases ![]() and

and ![]() and you want

and you want ![]() .

.

1. Find ![]() . Usually, you can

find this from the definition.

. Usually, you can

find this from the definition.

2. Find the change of basis matrices ![]() and

and ![]() . (Take the basis elements written

in terms of the standard bases and use them as the columns of the

matrices.)

. (Take the basis elements written

in terms of the standard bases and use them as the columns of the

matrices.)

3. Find ![]() .

.

4. Then

![]()

Do you see why this works? Reading from right to left, an input

vector written in terms of ![]() is translated to

the standard basis by

is translated to

the standard basis by ![]() .

Next,

.

Next, ![]() takes the standard vector,

applies f, and writes the output as a standard vector. Finally,

takes the standard vector,

applies f, and writes the output as a standard vector. Finally, ![]() takes the standard vector output

and translates it to a

takes the standard vector output

and translates it to a ![]() vector.

vector.

I'll illustrate this in the next example.

Example. Define ![]() by

by

![$$f\left[\matrix{x \cr y \cr}\right] = \left[\matrix{x + y \cr 2x - y \cr x - y \cr}\right] = \left[\matrix{1 & 1 \cr 2 & -1 \cr 1 & -1 \cr}\right] \left[\matrix{x \cr y \cr}\right].$$](matrix-linear-trans77.png)

The matrix above is the matrix of f relative to the standard bases of

![]() and

and ![]() .

.

Next, consider the following bases for ![]() and

and ![]() , respectively:

, respectively:

![]()

![$${\cal C} = \left\{\left[\matrix{1 \cr 1 \cr 1\cr}\right], \left[\matrix{1 \cr 2 \cr 1 \cr}\right], \left[\matrix{-1 \cr 0 \cr -2 \cr}\right]\right\}.$$](matrix-linear-trans83.png)

I'll find the matrix ![]() of

f relative to

of

f relative to ![]() and

and ![]() . Here's how:

. Here's how:

![]()

This matrix translates vectors in ![]() from

from ![]() to the standard basis:

to the standard basis:

![]()

This matrix translates vectors in ![]() from

from ![]() to the standard basis:

to the standard basis:

![$$[{\cal C} \to {\rm std}] = \left[\matrix{ 1 & 1 & -1 \cr 1 & 2 & 0 \cr 1 & 1 & -2 }\right].$$](matrix-linear-trans93.png)

Hence, the inverse matrix translates vectors from the standard basis

to ![]() :

:

![$$[{\rm std} \to {\cal C}] = \left[\matrix{ 1 & 1 & -1 \cr 1 & 2 & 0 \cr 1 & 1 & -2 }\right]^{-1} = \left[\matrix{ 4 & -1 & -2 \cr -2 & 1 & 1 \cr 1 & 0 & -1 \cr}\right].$$](matrix-linear-trans95.png)

Therefore,

![$$[f]_{{\cal B},{\cal C}} = \left[\matrix{ 4 & -1 & -2 \cr -2 & 1 & 1 \cr 1 & 0 & -1 \cr}\right] \left[\matrix{ 1 & 1 \cr 2 & -1 \cr 1 & -1 \cr}\right] \left[\matrix{ 2 & 1 \cr 1 & 1 \cr}\right] = \left[\matrix{ 7 & 7 \cr -2 & -3 \cr 2 & 2 \cr}\right].\quad\halmos$$](matrix-linear-trans96.png)

Example. (a) Suppose ![]() satisfies

satisfies

![]()

What is ![]() ?

?

Write ![]() as a linear combination of

as a linear combination of ![]() and

and ![]() :

:

![]()

The numbers are simple enough that I could figure out the linear combination by inspection.

Apply T:

![]()

![]()

(b) ![]() is a basis for

is a basis for ![]() . Suppose

. Suppose ![]() satisfies

satisfies

![]()

What is ![]() ?

?

Consider the equations for T above. T is applied to the elements of

![]() , and the results are written in terms of

the standard basis. Thus,

, and the results are written in terms of

the standard basis. Thus,

![]()

Since I'm applying T to the standard vector ![]() , I have to translate this to a

, I have to translate this to a ![]() vector to use the T-matrix I found.

vector to use the T-matrix I found.

![]()

Therefore,

![]()

Hence,

![]()

Example. Suppose ![]() is given by

is given by

![]()

Let

![]()

(a) Find ![]() .

.

![]()

(b) Find ![]() .

.

First,

![]()

Hence,

![]()

Then

![]()

(c) Compute ![]() .

.

This means: Apply T to the vector ![]() and write the result in terms of

and write the result in terms of ![]() .

.

![]()

Example. Here are two bases for ![]() :

:

![]()

Suppose ![]() is defined by

is defined by

![]()

(a) Find ![]() .

.

![]()

Make a matrix using these coordinate vectors as the columns:

![]()

(b) Find ![]() .

.

![]()

Find the translation matrices:

![]()

![]()

Therefore,

![]()

Send comments about this page to: Bruce.Ikenaga@millersville.edu.

Copyright 2012 by Bruce Ikenaga