Definition. Let V be a vector space over a

field F, and let ![]() . The set S is linearly independent if

. The set S is linearly independent if ![]() ,

, ![]() , and

, and

![]()

A set of vectors which is not linearly independent is linearly dependent. (I'll usually say

"independent" and "dependent" for short.) Thus, a

set of vectors S is dependent if there are vectors ![]() and numbers

and numbers ![]() , not all of which are 0,

such that

, not all of which are 0,

such that

![]()

Note that S could be an infinite set of vectors.

In words, the definition says that if a linear combination of any finite set of vectors in S equals the zero vector, then all the coefficients in the linear combination must be 0. I'll refer to such a linear combination as a trivial linear combination.

On the other hand, a linear combination of vectors is nontrivial if at least one of the coefficients is nonzero. ("At least one" doesn't mean "all" --- a nontrivial linear combination can have some zero coefficients, as long as at least one is nonzero.)

Thus, we can also say that a set of vectors is independent if there is no nontrivial linear combination among finitely many of the vectors which is equal to 0. And a set of vectors is dependent if there is some nontrivial linear combination among finitely many of the vectors which is equal to 0.

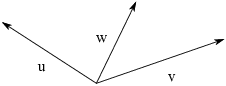

Let's see a pictorial example of a dependent set. Consider the

following vectors u, v, and w in ![]() .

.

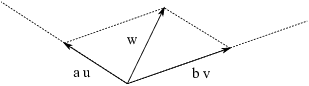

I'll show how to get a nontrivial linear combination of the vectors that is equal to the zero vector. Project w onto the lines of u and v.

The projections are multiples ![]() of u and

of u and ![]() of v. Since w is the diagonal of the parallelogram

whose sides are

of v. Since w is the diagonal of the parallelogram

whose sides are ![]() and

and ![]() , we have

, we have

![]()

This is a nontrivial linear combination of u, v and w which is equal

to the zero vector, so ![]() is dependent.

is dependent.

In fact, it's true that any 3 vectors in ![]() are dependent, and this pictorial example should make

this reasonable. More generally, if F is a field then any n vectors

in

are dependent, and this pictorial example should make

this reasonable. More generally, if F is a field then any n vectors

in ![]() are dependent if

are dependent if ![]() . We'll prove this below.

. We'll prove this below.

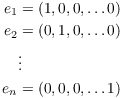

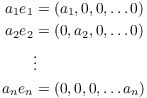

Example. If F is a field, the standard basis vectors are

Show that they form an independent set in ![]() .

.

Write

![]()

I have to show all the a's are 0. Now

So

![]()

Since by assumption ![]() , I get

, I get

![]()

Hence, ![]() , and the set is

independent.

, and the set is

independent.![]()

Example. Show that any set containing the zero vector is dependent.

If ![]() , then

, then ![]() . The left

side is a nontrivial (since

. The left

side is a nontrivial (since ![]() ) linear

combination of vectors in S --- actually, a vector in S. The

linear combination is equal to 0. Hence, S is dependent.

) linear

combination of vectors in S --- actually, a vector in S. The

linear combination is equal to 0. Hence, S is dependent.

Notice that it doesn't matter what else is in S (if anything).![]()

Example. Show that the vectors ![]() and

and ![]() are dependent in

are dependent in ![]() .

.

I have to find numbers a and b, not both 0, such that

![]()

In this case, you can probably juggle numbers in your head to see that

![]()

This shows that the vectors are dependent. There are infinitely many

pairs of numbers a and b that work. In examples to follow, I'll show

how to find numbers systematically in cases where the arithmetic

isn't so easy.![]()

Example. Suppose u, v, w, and x are vectors in

a vector space. Prove that the set ![]() is dependent.

is dependent.

Notice that in the four vectors in ![]() , each of u, v, w, and x

occurs once with a plus sign and once with a minus sign. So

, each of u, v, w, and x

occurs once with a plus sign and once with a minus sign. So

![]()

This is a dependence relation, so the set is dependent.![]()

If you can't see an "easy" linear combination of a set of vectors that equals 0, you may have to determine independence or dependence by solving a system of equations.

Example. Consider the following sets of

vectors in ![]() . If the set is independent, prove

it. If the set is dependent, find a nontrivial linear combination of

the vectors which is equal to 0.

. If the set is independent, prove

it. If the set is dependent, find a nontrivial linear combination of

the vectors which is equal to 0.

(a) ![]() .

.

(b) ![]() .

.

(a) Write a linear combination of the vectors and set it equal to 0:

![$$a \cdot \left[\matrix{2 \cr 0 \cr -3 \cr}\right] + b \cdot \left[\matrix{1 \cr 1 \cr 1 \cr}\right] + c \cdot \left[\matrix{1 \cr 7 \cr 2 \cr}\right] = \left[\matrix{0 \cr 0 \cr 0 \cr}\right].$$](linear-independence42.png)

I have to determine whether this implies that ![]() .

.

Note: When I convert vectors given in "parenthesis form" to "matrix form", I'll turn the vectors into column vectors as above. This is consistent with the way I've set up systems of linear equations. Thus,

![$$(2, 0, -3) \quad\hbox{became}\quad \left[\matrix{2 \cr 0 \cr -3 \cr}\right].$$](linear-independence44.png)

The vector equation above is equivalent to the matrix equation

![$$\left[\matrix{ 2 & 1 & 1 \cr 0 & 1 & 7 \cr -3 & 1 & 2 \cr}\right] \left[\matrix{a \cr b \cr c \cr}\right] = \left[\matrix{0 \cr 0 \cr 0 \cr}\right].$$](linear-independence45.png)

Row reduce to solve:

![$$\left[\matrix{ 2 & 1 & 1 & 0 \cr 0 & 1 & 7 & 0 \cr -3 & 1 & 2 & 0 \cr}\right] \quad \to \quad \left[\matrix{ 1 & 0 & 0 & 0 \cr 0 & 1 & 0 & 0 \cr 0 & 0 & 1 & 0 \cr}\right]$$](linear-independence46.png)

Note: Row operations won't change the last column of zeros, so you don't actually need to write it when you do the row reduction. I'll put it in to avoid confusion.

The last matrix gives the equations

![]()

Therefore, the vectors are independent.![]()

(b) Write

![$$a \cdot \left[\matrix{1 \cr 2 \cr -1 \cr}\right] + b \cdot \left[\matrix{4 \cr 1 \cr 3 \cr}\right] + c \cdot \left[\matrix{-10 \cr 1 \cr -11 \cr}\right] = \left[\matrix{0 \cr 0 \cr 0 \cr}\right].$$](linear-independence48.png)

This gives the matrix equation

![$$\left[\matrix{ 1 & 4 & -10 \cr 2 & 1 & 1 \cr -1 & 3 & -11 \cr}\right] \left[\matrix{a \cr b \cr c \cr}\right] = \left[\matrix{0 \cr 0 \cr 0 \cr}\right].$$](linear-independence49.png)

Row reduce to solve:

![$$\left[\matrix{ 1 & 4 & -10 & 0 \cr 2 & 1 & 1 & 0 \cr -1 & 3 & -11 & 0 \cr}\right] \quad \to \quad \left[\matrix{ 1 & 0 & 2 & 0 \cr 0 & 1 & -3 & 0 \cr 0 & 0 & 0 & 0 \cr}\right]$$](linear-independence50.png)

This gives the equations

![]()

Thus, ![]() and

and ![]() . I can get a

nontrivial solution by setting c to any nonzero number. I'll use

. I can get a

nontrivial solution by setting c to any nonzero number. I'll use ![]() . This gives

. This gives ![]() and

and ![]() . So

. So

![$$(-2) \cdot \left[\matrix{1 \cr 2 \cr -1 \cr}\right] + 3 \cdot \left[\matrix{4 \cr 1 \cr 3 \cr}\right] + 1 \cdot \left[\matrix{-10 \cr 1 \cr -11 \cr}\right] = \left[\matrix{0 \cr 0 \cr 0 \cr}\right].$$](linear-independence57.png)

This is a linear dependence relation, and the vectors are

dependent.![]()

The same approach works for vectors in ![]() where F is a field other than the real numbers.

where F is a field other than the real numbers.

Example. Consider the set of vectors

![]()

If the set is independent, prove it. If the set is dependent, find a nontrivial linear combination of the vectors which is equal to 0.

Write

![$$a \cdot \left[\matrix{4 \cr 1 \cr 2 \cr}\right] + b \cdot \left[\matrix{3 \cr 3 \cr 0 \cr}\right] + c \cdot \left[\matrix{0 \cr 1 \cr 1 \cr}\right] = \left[\matrix{0 \cr 0 \cr 0 \cr}\right].$$](linear-independence60.png)

This gives the matrix equation

![$$\left[\matrix{ 4 & 3 & 0 \cr 1 & 3 & 1 \cr 2 & 0 & 1 \cr}\right] \left[\matrix{a \cr b \cr c \cr}\right] = \left[\matrix{0 \cr 0 \cr 0 \cr}\right].$$](linear-independence61.png)

Row reduce to solve the system:

![$$\left[\matrix{ 4 & 3 & 0 & 0 \cr 1 & 3 & 1 & 0 \cr 2 & 0 & 1 & 0 \cr}\right] \matrix{\to \cr r_{1} \to 4 r_{1} \cr} \left[\matrix{ 1 & 2 & 0 & 0 \cr 1 & 3 & 1 & 0 \cr 2 & 0 & 1 & 0 \cr}\right] \matrix{\to \cr r_{3} \to r_{3} + 3 r_{1} \cr}$$](linear-independence62.png)

![$$\left[\matrix{ 1 & 2 & 0 & 0 \cr 1 & 3 & 1 & 0 \cr 0 & 1 & 1 & 0 \cr}\right] \matrix{\to \cr r_{2} \to r_{2} + 4 r_{1} \cr} \left[\matrix{ 1 & 2 & 0 & 0 \cr 0 & 1 & 1 & 0 \cr 0 & 1 & 1 & 0 \cr}\right] \matrix{\to \cr r_{1} \to r_{1} + 3 r_{2} \cr}$$](linear-independence63.png)

![$$\left[\matrix{ 1 & 0 & 3 & 0 \cr 0 & 1 & 1 & 0 \cr 0 & 1 & 1 & 0 \cr}\right] \matrix{\to \cr r_{3} \to r_{3} + 4 r_{2} \cr} \left[\matrix{ 1 & 0 & 3 & 0 \cr 0 & 1 & 1 & 0 \cr 0 & 0 & 0 & 0 \cr}\right]$$](linear-independence64.png)

This gives the equations

![]()

Thus, ![]() and

and ![]() . Set

. Set ![]() . This gives

. This gives ![]() and

and ![]() . Hence, the set is dependent, and

. Hence, the set is dependent, and

![$$2 \cdot \left[\matrix{4 \cr 1 \cr 2 \cr}\right] + 4 \cdot \left[\matrix{3 \cr 3 \cr 0 \cr}\right] + 1 \cdot \left[\matrix{0 \cr 1 \cr 1 \cr}\right] = \left[\matrix{0 \cr 0 \cr 0 \cr}\right].\quad\halmos$$](linear-independence71.png)

Example. Consider the following set of vectors

in ![]() :

:

![]()

If the set is independent, prove it. If the set is dependent, find a nontrivial inear combination of the vectors equal to 0.

Write

![$$a \cdot \left[\matrix{1 \cr 0 \cr 1 \cr 2 \cr}\right] + b \cdot \left[\matrix{1 \cr 2 \cr 2 \cr 1 \cr}\right] + c \cdot \left[\matrix{0 \cr 1 \cr 2 \cr 1 \cr}\right] = \left[\matrix{0 \cr 0 \cr 0 \cr 0 \cr}\right].$$](linear-independence74.png)

This gives the matrix equation

![$$\left[\matrix{ 1 & 1 & 0 \cr 0 & 2 & 1 \cr 1 & 2 & 2 \cr 2 & 1 & 1 \cr}\right] \left[\matrix{a \cr b \cr c \cr}\right] = \left[\matrix{0 \cr 0 \cr 0 \cr 0 \cr}\right].$$](linear-independence75.png)

Row reduce to solve the system:

![$$\left[\matrix{ 1 & 1 & 0 & 0 \cr 0 & 2 & 1 & 0 \cr 1 & 2 & 2 & 0 \cr 2 & 1 & 1 & 0 \cr}\right] \quad \to \quad \left[\matrix{ 1 & 0 & 1 & 0 \cr 0 & 1 & 2 & 0 \cr 0 & 0 & 0 & 0 \cr 0 & 0 & 0 & 0 \cr}\right]$$](linear-independence76.png)

This gives the equations

![]()

Hence, ![]() and

and ![]() . Set

. Set ![]() . Then

. Then ![]() and

and ![]() . Therefore, the set is dependent, and

. Therefore, the set is dependent, and

![$$2 \cdot \left[\matrix{1 \cr 0 \cr 1 \cr 2 \cr}\right] + 1 \cdot \left[\matrix{1 \cr 2 \cr 2 \cr 1 \cr}\right] + 1 \cdot \left[\matrix{0 \cr 1 \cr 2 \cr 1 \cr}\right] = \left[\matrix{0 \cr 0 \cr 0 \cr 0 \cr}\right].\quad\halmos$$](linear-independence83.png)

To summarize, to determine whether vectors ![]() ,

, ![]() , ...,

, ..., ![]() in a vector space V are independent, I try to solve

in a vector space V are independent, I try to solve

![]()

If the only solution is ![]() , then the vectors are independent; otherwise, they

are dependent.

, then the vectors are independent; otherwise, they

are dependent.

It's important to understand this general setup, and not just

memorize the special case of vectors in ![]() , as shown in the last few examples. Remember that

vectors don't have to look like things like "

, as shown in the last few examples. Remember that

vectors don't have to look like things like "![]() " ("numbers in slots").

Consider the next example, for instance.

" ("numbers in slots").

Consider the next example, for instance.

Example. ![]() is a vector space

over the reals. Show that the set

is a vector space

over the reals. Show that the set ![]() is independent.

is independent.

Suppose

![]()

That is,

![]()

Two polynomials are equal if and only if their corresponding

coefficients are equal. Hence, ![]() . Therefore,

. Therefore, ![]() is

independent.

is

independent.![]()

In some cases, you can tell by inspection that a set is dependent. I noted earlier that a set containing the zero vector must be dependent. Here's another easy case.

Proposition. If ![]() , a set of n vectors in

, a set of n vectors in ![]() is dependent.

is dependent.

Proof. Suppose ![]() are n vectors in

are n vectors in ![]() , and

, and ![]() . Write

. Write

![]()

This gives the matrix equation

![$$\left[\matrix{ \uparrow & \uparrow & & \uparrow \cr v_1 & v_2 & & v_n \cr \downarrow & \downarrow & & \downarrow \cr}\right] \left[\matrix{a_1 \cr a_2 \cr \vdots \cr a_n \cr}\right] = \left[\matrix{0 \cr 0 \cr \vdots \cr 0 \cr}\right].$$](linear-independence103.png)

To solve, I'd row reduce the matrix

![$$\left[\matrix{ \uparrow & \uparrow & & \uparrow & 0 \cr v_1 & v_2 & & v_n & \vdots \cr \downarrow & \downarrow & & \downarrow & 0 \cr}\right]$$](linear-independence104.png)

Note that this matrix has m rows and ![]() columns, and

columns, and ![]() .

.

The row-reduced echelon form can have at most one leading coefficient

in each row, so there are at most m leading coefficients. These

correspond to the main variables in the solution. Since there are n

variables and ![]() , there must be some parameter

variables. By setting any parameter variables equal to nonzero

numbers, I get a nontrivial solution for

, there must be some parameter

variables. By setting any parameter variables equal to nonzero

numbers, I get a nontrivial solution for ![]() ,

, ![]() , ...

, ... ![]() . This implies that

. This implies that ![]() is dependent.

is dependent.![]()

Example. Is the following set of vectors in

![]() independent or dependent?

independent or dependent?

![]()

Any set of three (or more) vectors in ![]() is dependent.

is dependent.

Notice that we know this by just counting the number of vectors. To

answer the given question, we don't actually have to give a

nontrivial linear combination of the vectors that's equal to 0.![]()

Proposition. Let ![]() be vectors in

be vectors in ![]() , where F is a field.

, where F is a field. ![]() is independent if and only if the

matrix constructed using the vectors as columns is invertible:

is independent if and only if the

matrix constructed using the vectors as columns is invertible:

![$$\left[\matrix{ \uparrow & \uparrow & & \uparrow \cr v_1 & v_2 & & v_n \cr \downarrow & \downarrow & & \downarrow \cr}\right].$$](linear-independence118.png)

Proof. Suppose the set is independent. Consider the system

![$$\left[\matrix{ \uparrow & \uparrow & & \uparrow \cr v_1 & v_2 & & v_n \cr \downarrow & \downarrow & & \downarrow \cr}\right] \left[\matrix{a_1 \cr a_2 \cr \vdots \cr a_n \cr}\right] = \left[\matrix{0 \cr 0 \cr \vdots \cr 0 \cr}\right].$$](linear-independence119.png)

Multiplying out the left side, this gives

![]()

By independence, ![]() . Thus,

the system above has only the zero vector 0 as a solution. An earlier

theorem on invertibility shows that this means the matrix of v's is

invertible.

. Thus,

the system above has only the zero vector 0 as a solution. An earlier

theorem on invertibility shows that this means the matrix of v's is

invertible.

Conversely, suppose the following matrix is invertible:

![$$A = \left[\matrix{ \uparrow & \uparrow & & \uparrow \cr v_1 & v_2 & & v_n \cr \downarrow & \downarrow & & \downarrow \cr}\right].$$](linear-independence122.png)

Let

![]()

Write this as a matrix equation and solve it:

![$$\eqalign{ \left[\matrix{ \uparrow & \uparrow & & \uparrow \cr v_1 & v_2 & & v_n \cr \downarrow & \downarrow & & \downarrow \cr}\right] \left[\matrix{a_1 \cr a_2 \cr \vdots \cr a_n \cr}\right] & = \left[\matrix{0 \cr 0 \cr \vdots \cr 0 \cr}\right] \cr A \cdot \left[\matrix{a_1 \cr a_2 \cr \vdots \cr a_n \cr}\right] & = \left[\matrix{0 \cr 0 \cr \vdots \cr 0 \cr}\right] \cr A^{-1} A \cdot \left[\matrix{a_1 \cr a_2 \cr \vdots \cr a_n \cr}\right] & = A^{-1} \cdot \left[\matrix{0 \cr 0 \cr \vdots \cr 0 \cr}\right] \cr \left[\matrix{a_1 \cr a_2 \cr \vdots \cr a_n \cr}\right] & = \left[\matrix{0 \cr 0 \cr \vdots \cr 0 \cr}\right] \cr}$$](linear-independence124.png)

This gives ![]() . Hence, the

v's are independent.

. Hence, the

v's are independent.![]()

Note that this proposition requires that you have n vectors in ![]() --- the number of vectors must match the dimension of

the space.

--- the number of vectors must match the dimension of

the space.

The result can also be stated in contrapositive form: The set of vectors is dependent if and only if the matrix having the vectors as columns is not invertible. I'll use this form in the next example.

Example. Consider the following set of vectors

in ![]() :

:

![$$\left\{ \left[\matrix{x - 8 \cr 0 \cr 0 \cr}\right], \left[\matrix{-7 \cr x - 1 \cr 0 \cr}\right], \left[\matrix{13 \cr 5 \cr 30 \cr}\right]\right\}.$$](linear-independence128.png)

For what values of x is the set dependent?

I have 3 vectors in ![]() , so the previous

result applies.

, so the previous

result applies.

Construct the matrix having the vectors as columns:

![$$A = \left[\matrix{ x - 8 & -7 & 13 \cr 0 & x - 1 & 5 \cr 0 & 0 & 30 \cr}\right].$$](linear-independence130.png)

The set is dependent when A is not invertible, and A is not invertible when its determinant is equal to 0. Now

![]()

Thus, ![]() for

for ![]() and

and ![]() . For those values of x, the

original set is dependent.

. For those values of x, the

original set is dependent.![]()

The next proposition says that a independent set can be thought of as a set without "redundancy", in the sense that you can't build any one of the vectors out of the others.

Proposition. Let V be a vector space over a

field F, and let ![]() . S is dependent if

and only if some

. S is dependent if

and only if some ![]() can be expressed as a linear

combination of vectors in S.

can be expressed as a linear

combination of vectors in S.

Proof. Suppose ![]() can be written as a linear combination of vectors in

S other than v:

can be written as a linear combination of vectors in

S other than v:

![]()

Here ![]() (where

(where ![]() for all i) and

for all i) and ![]() .

.

Then

![]()

This is a nontrivial linear relation among elements of S. Hence, S is dependent.

Conversely, suppose S is dependent. Then there are elements ![]() (not all 0) and

(not all 0) and ![]() such that

such that

![]()

Since not all the a's are 0, at least one is nonzero. There's no harm

in assuming that ![]() . (If another a was nonzero

instead, just relabel the a's and v's so

. (If another a was nonzero

instead, just relabel the a's and v's so ![]() and start again.)

and start again.)

Since ![]() , its inverse

, its inverse ![]() is defined. So

is defined. So

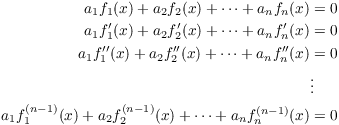

Thus, I've expressed ![]() as a linear

combination of other vectors in S.

as a linear

combination of other vectors in S.![]()

Independence of sets of functions

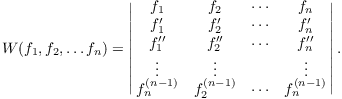

In some cases, we can use a determinant to tell that a finite set of functions is independent. The determinant is called the Wronskian, and its rows are the successive derivatives of the original functions.

Definition. Let ![]() be a set of functions

be a set of functions ![]() which are differentiable

which are differentiable ![]() times. The Wronskian is

times. The Wronskian is

Naturally, this requires that the functions be sufficiently differentiable.

Theorem. Let ![]() be the real vector space of functions

be the real vector space of functions ![]() which are differentiable

which are differentiable ![]() times. Let

times. Let ![]() be a subset of

be a subset of ![]() .If

.If ![]() at some point

at some point ![]() , then S is independent.

, then S is independent.

Thus, if you can find some value of x for which the Wronskian is nonzero, the functions are independent.

The converse is false, and we'll give a counterexample to the converse below. The converse does hold with additional conditions: For example, if the functions are solutions to a linear differential equation.

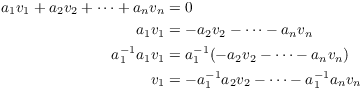

Proof. Let

![]()

I have to show all the a's are 0.

This equation is an identity in x, so I may differentiate it repeatedly to get n equations:

I can write this in matrix form:

![$$\left[\matrix{ f_1(x) & f_2(x) & \cdots & f_n(x) \cr f_1'(x) & f_2'(x) & \cdots & f_n'(x) \cr f_1''(x) & f_2''(x) & \cdots & f_n''(x) \cr \vdots & \vdots & & \vdots \cr f_n^{(n - 1)}(x) & f_2^{(n - 1)}(x) & \cdots & f_n^{(n - 1)}(x) \cr}\right] \left[\matrix{a_1 \cr a_2 \cr \vdots \cr a_n \cr}\right] = \left[\matrix{0 \cr 0 \cr \vdots \cr 0 \cr}\right].$$](linear-independence165.png)

Plug in ![]() :

:

![$$\left[\matrix{ f_1(c) & f_2(c) & \cdots & f_n(c) \cr f_1'(c) & f_2'(c) & \cdots & f_n'(c) \cr f_1''(c) & f_2''(c) & \cdots & f_n''(c) \cr \vdots & \vdots & & \vdots \cr f_n^{(n - 1)}(c) & f_2^{(n - 1)}(c) & \cdots & f_n^{(n - 1)}(c) \cr}\right] \left[\matrix{a_1 \cr a_2 \cr \vdots \cr a_n \cr}\right] = \left[\matrix{0 \cr 0 \cr \vdots \cr 0 \cr}\right].$$](linear-independence167.png)

Let

![$$A = \left[\matrix{ f_1(c) & f_2(c) & \cdots & f_n(c) \cr f_1'(c) & f_2'(c) & \cdots & f_n'(c) \cr f_1''(c) & f_2''(c) & \cdots & f_n''(c) \cr \vdots & \vdots & & \vdots \cr f_n^{(n - 1)}(c) & f_2^{(n - 1)}(c) & \cdots & f_n^{(n - 1)}(c) \cr}\right].$$](linear-independence168.png)

The determinant of this matrix is the Wronskian ![]() , which by assumption is nonzero.

Since the determinant is nonzero, the matrix is invertible. So

, which by assumption is nonzero.

Since the determinant is nonzero, the matrix is invertible. So

![$$\eqalign{ A \cdot \left[\matrix{a_1 \cr a_2 \cr \vdots \cr a_n \cr}\right] & = \left[\matrix{0 \cr 0 \cr \vdots \cr 0 \cr}\right] \cr A^{-1} A \cdot \left[\matrix{a_1 \cr a_2 \cr \vdots \cr a_n \cr}\right] & = A^{-1} \cdot \left[\matrix{0 \cr 0 \cr \vdots \cr 0 \cr}\right] \cr \left[\matrix{a_1 \cr a_2 \cr \vdots \cr a_n \cr}\right] & = \left[\matrix{0 \cr 0 \cr \vdots \cr 0 \cr}\right] \cr}$$](linear-independence170.png)

Since ![]() , the functions are

independent.

, the functions are

independent.![]()

Example. ![]() denotes the

vector space over

denotes the

vector space over ![]() consisting of twice-differentiable

functions

consisting of twice-differentiable

functions ![]() . Demonstrate that the set

of functions

. Demonstrate that the set

of functions ![]() is independent in

is independent in ![]() .

.

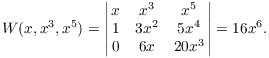

Compute the Wronskian:

I can find values of x for which the Wronskian is nonzero: for

example, if ![]() , then

, then ![]() . Hence,

. Hence, ![]() is independent.

is independent.![]()

The next example shows that the converse of the last theorem is false: You can have a set of independent functions whose Wronskian is always 0 (so there's no point where the Wronskian is nonzero).

Example. ![]() denotes the

vector space over

denotes the

vector space over ![]() consisting of differentiable

functions

consisting of differentiable

functions ![]() . Let

. Let

![]()

Show that ![]() is independent in

is independent in ![]() , but

, but ![]() for all

for all

![]() .

.

Note: You can check that g is differentiable at 0, and ![]() .

.

For independence, suppose that ![]() and

and ![]() for all

for all ![]() . Plugging in

. Plugging in ![]() , I get

, I get

![]()

Plugging in ![]() (and noting that

(and noting that ![]() ), I get

), I get

![]()

Adding ![]() and

and ![]() gives

gives ![]() , so

, so ![]() . Plugging

. Plugging ![]() into

into ![]() gives

gives ![]() . This proves that

. This proves that ![]() is independent.

is independent.

The Wronskian is

![]()

I'll take cases.

Since ![]() and

and ![]() , I have

, I have ![]() .

.

If ![]() , I have

, I have ![]() and

and ![]() , so

, so

![]()

If ![]() , I have

, I have ![]() and

and ![]() , so

, so

![]()

This shows that ![]() for all

for all ![]() .

.![]()

Copyright 2022 by Bruce Ikenaga