Suppose we have a function of several variables ![]() --- a function with possibly multiple

inputs and multiple outputs. What would it mean to be the derivative of such a function?

--- a function with possibly multiple

inputs and multiple outputs. What would it mean to be the derivative of such a function?

We've seen that the partial derivatives of a function ![]() give the rates of change of f in the x- and

y-directions. This is a partial answer to our question. However, what

do we do if we have a function like

give the rates of change of f in the x- and

y-directions. This is a partial answer to our question. However, what

do we do if we have a function like

![]()

A naive approach might be to write the function in component form:

![]()

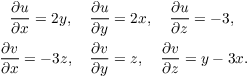

Now we can take partial derivatives:

Well, we have 6 partial derivatives --- do we want to call all of these (collectively) the "derivative" of f? This seems somewhat ad hoc and unsatisfying.

You might think of arranging these partial derivatives in a matrix:

![$$\left[\matrix{ \pder u x = 2 y & \pder u y = 2 x & \pder u z = -3 \cr \pder v x = -3 z & \pder v y = z & \pder v z = y - 3 x \cr}\right].$$](derivatives6.png)

Now we have a single entity, and it is reasonable to say that it contains information about the rate(s) of change of f However, this still seems a bit arbitrary --- for example, why put the u derivatives in the first row (as opposed to, say, the first column)?

Actually, the matrix we've written down above will turn out to be the derivative of f. In order to give a rationale for this, we have to think about derivatives in a conceptual way.

You probably encountered the derivative of a function ![]() as the slope of the tangent line to a curve. What

is the tangent line, in a conceptual sense? It's the best

"flat" approximation to the curve near the point of

tangency. That is, near the point of tangency, the tangent and the

curve are very close.

as the slope of the tangent line to a curve. What

is the tangent line, in a conceptual sense? It's the best

"flat" approximation to the curve near the point of

tangency. That is, near the point of tangency, the tangent and the

curve are very close.

We could generalize this to a function ![]() by considering

the tangent plane to the graph, which is a surface in three

dimensions. And though it's difficult to picture, we can

imagine continuing this procedure to higher dimensions. A

line in the plane looks like this:

by considering

the tangent plane to the graph, which is a surface in three

dimensions. And though it's difficult to picture, we can

imagine continuing this procedure to higher dimensions. A

line in the plane looks like this:

![]()

A plane in space looks like this:

![]()

With more input variables for f, we just keep adding more variables to the equation.

These equations are linear, which means roughly that the variables occur only to the first power, multiplied by constants. Equations of this kind describe things that are "flat".

With multiple inputs and multiple outputs, an equation for a "flat" thing involves a matrix. For example, here's a linear function with 3 inputs and 2 outputs:

![$$\left[\matrix{u \cr v \cr}\right] = \left[\matrix{1 & 3 & -8 \cr 4 & 0 & 3 \cr}\right] \left[\matrix{x \cr y \cr z \cr}\right].$$](derivatives11.png)

Written out, this would be

![]()

Here's the general definition.

Definition. A function ![]() is a linear

transformation if:

is a linear

transformation if:

(a) ![]() for all

for all ![]() .

.

(b) ![]() for all

for all ![]() and

and ![]() .

.

You've seen things which have these properties --- derivatives and antiderivatives, for example.

A linear transformation is a function that behaves like the linear

functions you know: ![]() or

or ![]() , for

instance. The derivative of a function will be the linear

transformation that best approximates the function. Here's the

definition.

, for

instance. The derivative of a function will be the linear

transformation that best approximates the function. Here's the

definition.

Definition. Let ![]() be a

function, where U is an open set in

be a

function, where U is an open set in ![]() , and let

, and let ![]() . The derivative of f at x is

a linear transformation

. The derivative of f at x is

a linear transformation ![]() which

satisfies

which

satisfies

![]()

People sometimes write "![]() " or

"

" or

"![]() to indicate the dependence of

to indicate the dependence of ![]() on x. It is also common to see "

on x. It is also common to see "![]() " instead of

"

" instead of

"![]() ".

".

Notice that this resembles our old difference quotient definition for derivatives:

![]()

Why do we have ![]() 's in the equation? The idea is

that h,

's in the equation? The idea is

that h, ![]() , and

, and ![]() are elements of

are elements of ![]() --- that is, m-dimensional vectors. h is

really

--- that is, m-dimensional vectors. h is

really ![]() . And f is a function which

produces m numbers as its output, so

. And f is a function which

produces m numbers as its output, so ![]() , and

, and ![]() look like

look like ![]() . We can't take the

quotient of two vectors, but we can take the quotient of

their lengths.

. We can't take the

quotient of two vectors, but we can take the quotient of

their lengths.

As with the difference quotient definition for the derivative ![]() of a function

of a function ![]() , the definition we've

given is not so great for actually computing derivatves. The

following result makes it a lot easier.

, the definition we've

given is not so great for actually computing derivatves. The

following result makes it a lot easier.

Theorem. Suppose ![]() is

differentiable at

is

differentiable at ![]() . Write

. Write ![]() and

and ![]() , and

let

, and

let ![]() . Then

. Then

![$$Df(h) = \left[\matrix{ \pder {f_1} {x_1} & \pder {f_1} {x_2} & \cdots & \pder {f_1} {x_n} \cr \pder {f_2} {x_1} & \pder {f_2} {x_2} & \cdots & \pder {f_2} {x_n} \cr & & \vdots & \cr \pder {f_m} {x_1} & \pder {f_m} {x_2} & \cdots & \pder {f_m} {x_n} \cr}\right] \cdot h.$$](derivatives47.png)

In other words, ![]() is represented by the matrix of partial

derivatives of the components of f with respect to the input

variables.

is represented by the matrix of partial

derivatives of the components of f with respect to the input

variables.![]()

The converse isn't true. If the partial derivatives of a function exist, the function might fail to be differentiable (in the sense that the limit above might be undefined). On the other hand, we have the following result.

Theorem. Suppose ![]() .

Write

.

Write ![]() and

and ![]() . If the partial derivatives

. If the partial derivatives ![]() at

at ![]() are continuous for

are continuous for ![]() and

and ![]() , then f is differentiable at

c.

, then f is differentiable at

c.![]()

In this case, by the preceding theorem, ![]() is represented by the

matrix of partial derivatives.

is represented by the

matrix of partial derivatives.

Remark. A function with continuous partial derivatives is called continuously differentiable.

Example. Compute ![]() for

for

![]()

Since f has 3 inputs and 2 outputs, the matrix for ![]() will be

will be ![]() :

:

![]()

Example. Compute ![]() for

for

![]()

We have 2 inputs and 3 outputs, so ![]() will be a

will be a ![]() matrix:

matrix:

![$$Df =\left[\matrix{ 2 s & 2 t \cr 3 t & 3 s \cr 3 s^2 t^3 & 3 s^3 t^2 \cr}\right].\quad\halmos$$](derivatives66.png)

Example. Compute ![]() for

for

![]()

![]()

You can see this is the ordinary derivative of a function of 1

variable. We drop the matrix brackets and just write ![]() , or

, or ![]() like usual.

like usual.![]()

Definition. Let ![]() , where

, where

![]() . The derivative

. The derivative ![]() is called the gradient of f, and is denote

is called the gradient of f, and is denote

![]() .

.

Example. Find the gradient (the derivative) of

![]()

![]()

Notice that the gradient is a row vector ---

that is, a ![]() matrix.

matrix.![]()

Example. Find the gradient (the derivative) of

![]()

![]()

Since you'd expect that the best linear approximation to a linear function is the linear function, and since the derivative of f as the best linear approximation to f, you'd expect the derivative of a linear function to be the linear function.

Proposition. Let ![]() be

a linear function. Then f is differentiable, and

be

a linear function. Then f is differentiable, and ![]() .

.

Proof. Since f is linear, ![]() . Therefore,

. Therefore,

![]()

This shows that ![]() .

.![]()

Corollary. (a) The function ![]() given by

given by ![]() is differentiable.

is differentiable.

Note: ![]() consists of pairs

consists of pairs ![]() where

where ![]() .

.

(b) For ![]() , the function

, the function ![]() given by

given by ![]() is differentiable.

is differentiable.

Proof. Both s and p are linear functions, so

(a) and (b) follow from the proposition.![]()

Example. The following function ![]() is linear:

is linear:

![]()

Verify that it's equal to its derivative.

Computing the partial derivatives directly,

![$$Df = \left[\matrix{ 2 & 3 \cr 5 & -1 \cr 7 & 10 \cr}\right].$$](derivatives96.png)

Writing f as a matrix multiplication, I have

![$$f\left(\left[\matrix{x \cr y \cr z \cr}\right]\right) = \left[\matrix{ 2 & 3 \cr 5 & -1 \cr 7 & 10 \cr}\right] \left[\matrix{x \cr y \cr z \cr}\right].$$](derivatives97.png)

You can see that the matrices are the same.![]()

Here are some other standard and unsurprising results about derivatives. You can prove them using the preceding corollary and the Chain Rule, which I'll discuss later.

Theorem. Let ![]() , let

, let

![]() , and let

, and let ![]() . Suppose f

and g are differentiable at c. Then:

. Suppose f

and g are differentiable at c. Then:

(a) ![]() is differentiable at c, and

is differentiable at c, and

![]()

(b) ![]() is differentiable at c, and

is differentiable at c, and

![]()

Copyright 2018 by Bruce Ikenaga