![$$\left[\matrix{1 & 2 & \pi \cr -41 & \sqrt{2} & 3.7 \cr}\right] \quad \left[\matrix{42 \cr 0 \cr -13 \cr}\right] \quad \left[\matrix{1 & a & a^2 \cr 1 & b & b^2 \cr 1 & c & c^2 \cr}\right]$$](matrices1.png)

A matrix is a rectangular array of numbers:

![$$\left[\matrix{1 & 2 & \pi \cr -41 & \sqrt{2} & 3.7 \cr}\right] \quad \left[\matrix{42 \cr 0 \cr -13 \cr}\right] \quad \left[\matrix{1 & a & a^2 \cr 1 & b & b^2 \cr 1 & c & c^2 \cr}\right]$$](matrices1.png)

Actually, the entries can be more general than numbers, but you can think of the entries as numbers to start. I'll give a rapid account of basic matrix arithmetic; you can find out more in a course in linear algebra.

Definition. (a) If A is a matrix, ![]() is the element in the

is the element in the ![]() row and

row and ![]() column.

column.

(b) If a matrix has m rows and n columns, it is said to be an ![]() matrix, and m and n are the

dimensions. A matrix with the same number of rows and columns is

a square matrix.

matrix, and m and n are the

dimensions. A matrix with the same number of rows and columns is

a square matrix.

(c) If A and B are ![]() matrices, then

matrices, then ![]() if their corresponding entries are equal; that is, if

if their corresponding entries are equal; that is, if ![]() for all i and j.

for all i and j.

Note that matrices of different dimensions can't be equal.

(d) If A and B are ![]() matrices, their

sum

matrices, their

sum ![]() is the matrix obtained by adding corresponding

entries of A and B:

is the matrix obtained by adding corresponding

entries of A and B:

![]()

(e) If A is a matrix and k is a number, the product of A by k is the matrix obtained by multiplying the entries of A by k:

![]()

(f) The ![]() zero matrix is the

matrix all of whose entries are 0.

zero matrix is the

matrix all of whose entries are 0.

(g) If A is a matrix, then ![]() is the matrix obtained by negating

the entries of A.

is the matrix obtained by negating

the entries of A.

(h) If A and B are ![]() matrices, their

difference

matrices, their

difference ![]() is

is

![]()

Example. Suppose

![]()

(a) What are the dimensions of A?

(b) What is ![]() ?

?

(a) A is a ![]() matrix: It has 2 rows and 3 columns.

matrix: It has 2 rows and 3 columns.![]()

(b) ![]() is the element in row 2 and column 1, so

is the element in row 2 and column 1, so ![]() .

.![]()

The following results are unsurprising, in the sense that things work the way you'd expect them to given your experience with numbers. (This is not always the case with matrix multiplication, which I'll discuss later.)

Proposition. Suppose p and q are numbers and

A, B, and C are ![]() matrices.

matrices.

(a) (Associativity) ![]() .

.

(b) (Commutativity) ![]() .

.

(c) (Zero Matrix) If 0 denotes the ![]() zero matrix, then

zero matrix, then

![]()

(d) (Additive Inverses) If 0 denotes the ![]() zero matrix,

then

zero matrix,

then

![]()

(e) (Distributivity)

![]()

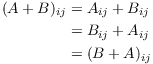

Proof. Matrix equality says that two matrices are equal if their corresponding entries are equal. So the proofs of these results amount to considering the entries of the matrices on the left and right sides of the equations.

By way of example, I'll prove (b). I must show that ![]() and

and ![]() have the same entries.

have the same entries.

The first and third equalities used the definition of matrix

addition. The second equality used commutativity of addition for

numbers.![]()

Matrix multiplication is more complicated. Your first thought might be to multiply two matrices the way you add matrices --- that is, by multiplying corresponding entries. That is not how matrices are multiplied, and the reason is that it isn't that useful. What is useful is a more complicated definition which uses dot products.

Suppose A is an ![]() matrix and B is an

matrix and B is an ![]() matrix. Note that the number of columns of A must

equal the number of rows of B. To form the matrix product

matrix. Note that the number of columns of A must

equal the number of rows of B. To form the matrix product ![]() ,

I have to tell you what the

,

I have to tell you what the ![]() entry of

entry of ![]() is. Here is the description in words:

is. Here is the description in words: ![]() is the dot

product of the

is the dot

product of the ![]() row of A and the

row of A and the ![]() column of B.

column of B.

The resulting matrix ![]() will be an

will be an ![]() matrix.

matrix.

This is best illustrated by examples.

Example. Compute the matrix product

![]()

This is the product of a ![]() matrix and a

matrix and a ![]() matrix. The product should be a

matrix. The product should be a ![]() matrix. I'll show the computation of the entries in

the product one-by-one. For each entry in the product, I take the dot

product of a row of the first matrix and a column of the second

matrix.

matrix. I'll show the computation of the entries in

the product one-by-one. For each entry in the product, I take the dot

product of a row of the first matrix and a column of the second

matrix.

![]() :

:

![]()

![]() :

:

![]()

![]() :

:

![]()

![]() :

:

![]()

![]() :

:

![]()

![]() :

:

![]()

Thus,

![]()

Example. Multiply the following matrices:

![]()

![]()

Example. Multiply the following matrices:

![$$\left[\matrix{ 4 & -6 & -3 & 4 \cr}\right] \left[\matrix{-2 \cr -5 \cr 0 \cr -2 \cr}\right]$$](matrices63.png)

![$$\left[\matrix{ 4 & -6 & -3 & 4 \cr}\right] \left[\matrix{-2 \cr -5 \cr 0 \cr -2 \cr}\right] = \left[\matrix{14 \cr}\right].\quad\halmos$$](matrices64.png)

Note that this is essentially the dot product of two 4-dimensional vectors.

Example. Multiply the following matrices:

![$$\left[\matrix{ -4 & 1 \cr 2 & 5 \cr 1 & 0 \cr}\right] \left[\matrix{ -1 & 0 & -3 \cr -1 & 2 & 4 \cr}\right]$$](matrices65.png)

![$$\left[\matrix{ -4 & 1 \cr 2 & 5 \cr 1 & 0 \cr}\right] \left[\matrix{ -1 & 0 & -3 \cr -1 & 2 & 4 \cr}\right] = \left[\matrix{ 3 & 2 & 16 \cr -7 & 10 & 14 \cr -1 & 0 & -3 \cr}\right].\quad\halmos$$](matrices66.png)

The formal definition of matrix multiplication involves summation

notation. Suppose A is an ![]() matrix and B is an

matrix and B is an ![]() matrix, so the product

matrix, so the product ![]() makes sense. To tell

what

makes sense. To tell

what ![]() is, I have to say what a typical entry of the matrix

is. Here's the definition:

is, I have to say what a typical entry of the matrix

is. Here's the definition:

![]()

Let's relate this to the concrete description I gave above. The

summation variable is k. In ![]() , this is the column index. So

with the row index i fixed, I'm running through the columns from

, this is the column index. So

with the row index i fixed, I'm running through the columns from ![]() to

to ![]() . This means that I'm running down the

. This means that I'm running down the ![]() row of A.

row of A.

Likewise, in ![]() the variable k is the row index. So with

the column index j fixed, I'm running through the rows from

the variable k is the row index. So with

the column index j fixed, I'm running through the rows from ![]() to

to ![]() . This means that I'm running down the

. This means that I'm running down the ![]() column of B.

column of B.

Since I'm forming products ![]() and then adding them up,

this means that I'm taking the dot product of the

and then adding them up,

this means that I'm taking the dot product of the ![]() row of A and the

row of A and the ![]() column of B, as

I described earlier.

column of B, as

I described earlier.

Proofs of matrix multiplication properties involve this summation definition, and as a consequence they are often a bit messy with lots of subscripts flying around. I'll let you see them in a linear algebra course.

Definition. The ![]() identity matrix is the matrix with 1's down the

main diagonal (the diagonal going from upper

left to lower right) and 0's elsewhere.

identity matrix is the matrix with 1's down the

main diagonal (the diagonal going from upper

left to lower right) and 0's elsewhere.

For instance, the ![]() identity matrix is

identity matrix is

![$$\left[\matrix{ 1 & 0 & 0 & 0 \cr 0 & 1 & 0 & 0 \cr 0 & 0 & 1 & 0 \cr 0 & 0 & 0 & 1 \cr}\right].$$](matrices85.png)

Proposition. Suppose A, B, and C are matrices (with dimensions compatible for multiplication in all the products below), and let k be a number.

(a) (Associativity) ![]() .

.

(b) (Identity) ![]() and

and ![]() (where I denotes an

(where I denotes an ![]() identity matrix compatible for multiplication in the

respective products).

identity matrix compatible for multiplication in the

respective products).

(c) (Zero) ![]() and

and ![]() (where 0 denotes a zero matrix

compatible for multiplication in the respective products).

(where 0 denotes a zero matrix

compatible for multiplication in the respective products).

(d) (Scalars) ![]() .

.

(e) (Distributivity)

![]()

I'll omit the proofs, which are routine but a bit messy (as they involve the summation definition of matrix multiplication).

Note that commutativity of multiplication is not listed as a property. In fact, it's false --- and it one of a number of ways in which matrix multiplication does not behave in ways you might expect. It's important to make a note of things which behave in unexpected ways.

Example. Give specific ![]() matrices A and B such that

matrices A and B such that ![]() .

.

There are many examples. For instance,

![]()

![]()

This shows that matrix multiplication is not commutative.

Example. Give specific ![]() matrices A and B such that

matrices A and B such that ![]() ,

, ![]() , but

, but ![]() .

.

There are many possibilities. For instance,

![]()

Example. Give specific ![]() nonzero matrices A. B, and C such that

nonzero matrices A. B, and C such that ![]() but

but ![]() .

.

There are lots of examples. For instance,

![]()

But

![]()

This example shows that you can't "divide" or "cancel" A from both sides of the equation. It works in some cases, but not in all cases.

Definition. Let A be an ![]() matrix. The inverse of A is a

matrix

matrix. The inverse of A is a

matrix ![]() which satisfies

which satisfies

![]()

(I is the ![]() identity matrix.) A matrix which has an

inverse is invertible.

identity matrix.) A matrix which has an

inverse is invertible.

Remark. (a) If a matrix has an inverse, it is unique.

(b) Not every matrix has an inverse.

Determinants provide a criterion for telling whether a matrix is

invertible: An ![]() real matrix is invertible if and only if

its determinant is nonzero.

real matrix is invertible if and only if

its determinant is nonzero.

Proposition. Suppose A and B are invertible

![]() matrices. Then:

matrices. Then:

(a) ![]() .

.

(b) ![]() .

.

Proof. I'll prove (b).

![]()

![]()

This shows that ![]() is the inverse of

is the inverse of ![]() , because it multiplies

, because it multiplies ![]() to the identity matrix in either

order.

to the identity matrix in either

order.![]()

In general, the most efficient way to find the inverse of a matrix is

to use row reduction (

Guassian elimination), which you will learn about in a linear

algebra course. But we can give an easy formula for ![]() matrices.

matrices.

Proposition. Consider the real matrix

![]()

Suppose ![]() . Then

. Then

![]()

Proof. Just compute:

![]()

You can check that you also get the identity if you multiply in the

opposite order.![]()

Example. Find the inverse of ![]() .

.

![]()

Example. Use matrix inversion to solve the system of equations:

![]()

You can write the system in matrix form:

![]()

(Multiply out the left side for yourself and see that you get the

original two equations.) Now the inverse of the ![]() matrix is

matrix is

![]()

Multiply the matrix equation above on the left of both sides by the inverse:

![$$\eqalign{ \dfrac{1}{13} \left[\matrix{10 & -7 \cr -1 & 2 \cr}\right]\left[\matrix{2 & 7 \cr 1 & 10 \cr}\right] \left[\matrix{x \cr y \cr}\right] & = \dfrac{1}{13} \left[\matrix{10 & -7 \cr -1 & 2 \cr}\right] \left[\matrix{3 \cr -1 \cr}\right] \cr \noalign{\vskip2pt} \left[\matrix{1 & 0 \cr 0 & 1 \cr}\right] \left[\matrix{x \cr y \cr}\right] & = \dfrac{1}{13} \left[\matrix{37 \cr -5 \cr}\right] \cr \noalign{\vskip2pt} \left[\matrix{x \cr y \cr}\right] & = \dfrac{1}{13} \left[\matrix{37 \cr -5 \cr}\right] \cr}$$](matrices132.png)

That is, ![]() and

and ![]() .

.![]()

Copyright 2017 by Bruce Ikenaga