A set is independent if, roughly speaking, there is no redundancy in the set: You can't "build" any vector in the set as a linear combination of the others. A set spans if you can "build everything" in the vector space as linear combinations of vectors in the set. Putting these two ideas together, a basis is an independent spanning set: A set with no redundancy out of which you can "build everything".

Definition. Let V be an F-vector space. A

subset ![]() of V is a basis if it is

linearly independent and spans V.

of V is a basis if it is

linearly independent and spans V.

Definition. Let F be a field. The standard basis for ![]() consists of the n

vectors

consists of the n

vectors

![]()

Thus, the ![]() standard basis vector has a "1" in the

standard basis vector has a "1" in the ![]() slot and zeros elsewhere.

slot and zeros elsewhere.

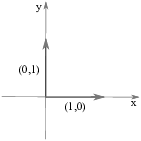

The standard basis vectors in ![]() are

are ![]() and

and ![]() .

They can be pictured as arrows of length 1 pointing along the

positive x-axis and positive y-axis.

.

They can be pictured as arrows of length 1 pointing along the

positive x-axis and positive y-axis.

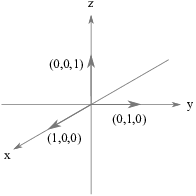

The standard basis vectors in ![]() are

are ![]() ,

, ![]() , and

, and ![]() . They can be pictured as arrows

of length 1 pointing along the positive x-axis, the positive y-axis,

and the positive z-axis.

. They can be pictured as arrows

of length 1 pointing along the positive x-axis, the positive y-axis,

and the positive z-axis.

Since we're calling this a "basis", we'd better check that the name is justified!

Proposition. The standard basis is a basis for

![]() .

.

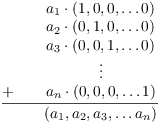

Proof. First, we show that the standard basis

spans ![]() . Let

. Let ![]() . I must

write this vector as a linear combination of the standard basis

vectors. It's easy:

. I must

write this vector as a linear combination of the standard basis

vectors. It's easy:

For independence, suppose

![]()

The computation we did to prove that the set spans shows that the

left side is just ![]() , so

, so

![]()

This implies that ![]() , so the set

is independent.

, so the set

is independent.![]()

Aside from giving us an example of a basis for ![]() , this result shows

that at least one basis for

, this result shows

that at least one basis for ![]() has n elements. We will show later

that every basis for

has n elements. We will show later

that every basis for ![]() has n elements.

has n elements.

Example. Prove that the following set is a

basis for ![]() over

over ![]() :

:

![$$\left\{\left[\matrix{1 \cr 1 \cr 0 \cr}\right], \left[\matrix{0 \cr 1 \cr 1 \cr}\right], \left[\matrix{1 \cr 0 \cr 1 \cr}\right]\right\}$$](bases28.png)

Make a matrix with the vectors as columns and row reduce:

![$$A = \left[\matrix{1 & 0 & 1 \cr 1 & 1 & 0 \cr 0 & 1 & 1 \cr}\right] \quad \to \quad \left[\matrix{1 & 0 & 0 \cr 0 & 1 & 0 \cr 0 & 0 & 1 \cr}\right]$$](bases29.png)

Since A row reduces to the identity, it is invertible,and there are a number of conditions which are equivalent to A being invertible.

First, since A is invertible the following system has a unique

solution for every ![]() :

:

![$$\left[\matrix{1 & 0 & 1 \cr 1 & 1 & 0 \cr 0 & 1 & 1 \cr}\right] \left[\matrix{x \cr y \cr z \cr}\right] = \left[\matrix{a \cr b \cr c \cr}\right].$$](bases31.png)

But this matrix equation can be written as

![$$x \cdot \left[\matrix{1 \cr 1 \cr 0 \cr}\right] + y \cdot \left[\matrix{0 \cr 1 \cr 1 \cr}\right] + z \cdot \left[\matrix{1 \cr 0 \cr 1 \cr}\right] = \left[\matrix{a \cr b \cr c \cr}\right].$$](bases32.png)

In other words, any vector ![]() can be written as a

linear combination of the given vectors. This proves that the given

vectors span

can be written as a

linear combination of the given vectors. This proves that the given

vectors span ![]() .

.

Second, since A is invertible, the following system has only ![]() ,

,

![]() ,

, ![]() as a solution:

as a solution:

![$$\left[\matrix{1 & 0 & 1 \cr 1 & 1 & 0 \cr 0 & 1 & 1 \cr}\right] \left[\matrix{x \cr y \cr z \cr}\right] = \left[\matrix{0 \cr 0 \cr 0 \cr}\right].$$](bases38.png)

This matrix equation can be written as

![$$x \cdot \left[\matrix{1 \cr 1 \cr 0 \cr}\right] + y \cdot \left[\matrix{0 \cr 1 \cr 1 \cr}\right] + z \cdot \left[\matrix{1 \cr 0 \cr 1 \cr}\right] = \left[\matrix{0 \cr 0 \cr 0 \cr}\right].$$](bases39.png)

Since the only solution is ![]() ,

, ![]() ,

, ![]() , the vectors are

independent.

, the vectors are

independent.

Hence, the given set of vectors is a basis for ![]() .

.![]()

We'll generalize the computations we did in the last example.

Proposition. Let F be a field. ![]() is a basis for

is a basis for ![]() if and only if

if and only if

![$$A = \left[\matrix{\uparrow & \uparrow & & \uparrow \cr v_1 & v_2 & \cdots & v_n \cr \downarrow & \downarrow & & \downarrow \cr}\right] \quad\hbox{is invertible}.\quad\halmos$$](bases46.png)

Remark. By earlier results on invertibility, this is equivalent to the following conditions:

1. A row reduces to the identity.

2. ![]() .

.

3. The system ![]() has only

has only ![]() as a solution.

as a solution.

4. For every vector ![]() , the

system

, the

system ![]() has a unique solution.

has a unique solution.

Thus, if you have n vectors in ![]() , this gives you several ways of

checking whether or not the set is a basis.

, this gives you several ways of

checking whether or not the set is a basis.

Proof. Suppose ![]() is a basis for

is a basis for ![]() . Let

. Let

![$$A = \left[\matrix{\uparrow & \uparrow & & \uparrow \cr v_1 & v_2 & \cdots & v_n \cr \downarrow & \downarrow & & \downarrow \cr}\right].$$](bases55.png)

Consider the system

![$$\left[\matrix{\uparrow & \uparrow & & \uparrow \cr v_1 & v_2 & \cdots & v_n \cr \downarrow & \downarrow & & \downarrow \cr}\right] \left[\matrix{x_1 \cr x_2 \cr \vdots \cr x_n \cr}\right] = \left[\matrix{0 \cr 0 \cr \vdots \cr 0 \cr}\right].$$](bases56.png)

Doing the matrix multiplication on the left and writing the result as a vector equation, we have

![]()

Since ![]() is independent, I have

is independent, I have ![]() . This shows that the system above has only

the zero vector as a solution. An earlier result on invertibility

shows that A must be invertible.

. This shows that the system above has only

the zero vector as a solution. An earlier result on invertibility

shows that A must be invertible.

Conversely, suppose the following matrix is invertible:

![$$A = \left[\matrix{\uparrow & \uparrow & & \uparrow \cr v_1 & v_2 & \cdots & v_n \cr \downarrow & \downarrow & & \downarrow \cr}\right].$$](bases60.png)

We will show that ![]() is a basis.

is a basis.

For independence, suppose

![]()

Writing the left side as a matrix multiplication gives the system

![$$\left[\matrix{\uparrow & \uparrow & & \uparrow \cr v_1 & v_2 & \cdots & v_n \cr \downarrow & \downarrow & & \downarrow \cr}\right] \left[\matrix{x_1 \cr x_2 \cr \vdots \cr x_n \cr}\right] = \left[\matrix{0 \cr 0 \cr \vdots \cr 0 \cr}\right].$$](bases63.png)

Since A is invertible, the only solution to this system is ![]() . This shows that

. This shows that ![]() is independent.

is independent.

To show that ![]() spans, let

spans, let ![]() . Consider the system

. Consider the system

![$$\left[\matrix{\uparrow & \uparrow & & \uparrow \cr v_1 & v_2 & \cdots & v_n \cr \downarrow & \downarrow & & \downarrow \cr}\right] \left[\matrix{x_1 \cr x_2 \cr \vdots \cr x_n \cr}\right] = \left[\matrix{b_1 \cr b_2 \cr \vdots \cr b_n \cr}\right].$$](bases68.png)

Since A is invertible, this system has a (unique) solution ![]() . That is,

. That is,

![]()

This shows that ![]() spans.

spans.

Hence, ![]() is a basis for

is a basis for ![]() .

.![]()

A basis for V is a spanning set for V, so every vector in V can be written as a linear combination of basis elements. The next result says that such a linear combination is unique.

Proposition. Let ![]() be a basis for a

vector space V. Every

be a basis for a

vector space V. Every ![]() can be written in exactly one way as

can be written in exactly one way as

![]()

Proof. Let ![]() . Since

. Since ![]() spans V, there

are scalars

spans V, there

are scalars ![]() ,

, ![]() , ...,

, ..., ![]() and vectors

and vectors ![]() such that

such that

![]()

Suppose that there is another way to do this: There are scalars ![]() ,

,

![]() , ...,

, ..., ![]() and vectors

and vectors ![]() such that

such that

![]()

First, note that I can assume that the same set of vectors are involved in both linear combinations --- that is, the v's and w's are the same set of vectors. For if not, I can instead use the vectors in the union

![]()

I can rewrite both of the original linear combinations as linear combinations of vectors in S, using 0 as the coefficient of any vector which doesn't occur in a given combination. Then both linear combinations for v use the same vectors.

I'll assume this has been done and just assume that ![]() is the set of vectors. Thus, I have two

linear combinations

is the set of vectors. Thus, I have two

linear combinations

![]()

Then

![]()

Hence,

![]()

Since ![]() is independent,

is independent,

![]()

Therefore,

![]()

That is, the two linear combinations were actually the same. This

proves that there's only one way to write v as a linear combination

of vectors in ![]() .

.![]()

I want to show that two bases for a vector space must have the same number of elements. I need some preliminary results, which are important in their own right.

Lemma. If A is an ![]() matrix with

matrix with

![]() , the system

, the system ![]() has nontrivial solutions.

has nontrivial solutions.

Proof. Write

![$$A = \left[\matrix{ a_{1 1} & a_{1 2} & \cdots & a_{1 n} \cr a_{2 1} & a_{2 2} & \cdots & a_{2 n} \cr \vdots & \vdots & & \vdots \cr a_{m 1} & a_{m 2} & \cdots & a_{m n} \cr}\right].$$](bases101.png)

The condition ![]() means that the following system has more

variables than equations:

means that the following system has more

variables than equations:

![$$\left[\matrix{ a_{1 1} & a_{1 2} & \cdots & a_{1 n} \cr a_{2 1} & a_{2 2} & \cdots & a_{2 n} \cr \vdots & \vdots & & \vdots \cr a_{m 1} & a_{m 2} & \cdots & a_{m n} \cr}\right] \left[\matrix{x_1 \cr x_2 \cr \vdots \cr x_n \cr}\right] = \left[\matrix{0 \cr 0 \cr \vdots \cr 0 \cr}\right].$$](bases103.png)

If A row reduces to a row reduced echelon matrix R, then R can have

at most m leading coefficients. Therefore, some of the variables ![]() ,

,

![]() , ...,

, ..., ![]() will be free variables (parameters); if I

assign nonzero values to the free variables (e.g. by setting all of

them equal to 1), the resulting solution will be nontrivial.

will be free variables (parameters); if I

assign nonzero values to the free variables (e.g. by setting all of

them equal to 1), the resulting solution will be nontrivial.![]()

Theorem. Let ![]() be a basis for a vector space V over a field F.

be a basis for a vector space V over a field F.

(a) Any subset of V with more than n elements is dependent.

(b) Any subset of V with fewer than n elements cannot span.

Proof. (a) Suppose ![]() is a subset of V, and that

is a subset of V, and that ![]() . I want to show that

. I want to show that ![]() is dependent.

is dependent.

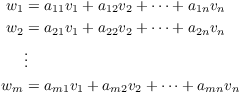

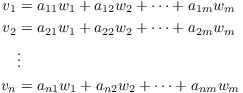

Write each w as a linear combination of the v's:

This can be represented as the following matrix equation:

![$$\left[\matrix{ \uparrow & \uparrow & & \uparrow \cr w_1 & w_2 & \cdots & w_m \cr \downarrow & \downarrow & & \downarrow \cr}\right] = \left[\matrix{ \uparrow & \uparrow & & \uparrow \cr v_1 & v_2 & \cdots & v_n \cr \downarrow & \downarrow & & \downarrow \cr}\right] \left[\matrix{ a_{1 1} & a_{2 1} & \cdots & a_{m 1} \cr a_{1 2} & a_{2 2} & \cdots & a_{m 2} \cr \vdots & \vdots & & \vdots \cr a_{1 n} & a_{2 n} & \cdots & a_{m n} \cr}\right].$$](bases112.png)

Since ![]() , the matrix of a's has more columns than rows.

Therefore, the following system has a nontrivial solution

, the matrix of a's has more columns than rows.

Therefore, the following system has a nontrivial solution ![]() ,

, ![]() , ...

, ... ![]() :

:

![$$\left[\matrix{ a_{1 1} & a_{2 1} & \cdots & a_{m 1} \cr a_{1 2} & a_{2 2} & \cdots & a_{m 2} \cr \vdots & \vdots & & \vdots \cr a_{1 n} & a_{2 n} & \cdots & a_{m n} \cr}\right] \left[\matrix{x_1 \cr x_2 \cr \vdots \cr x_m \cr}\right] = \left[\matrix{0 \cr 0 \cr \vdots \cr 0 \cr}\right].$$](bases117.png)

That is, not all the b's are 0, but

![$$\left[\matrix{ a_{1 1} & a_{2 1} & \cdots & a_{m 1} \cr a_{1 2} & a_{2 2} & \cdots & a_{m 2} \cr \vdots & \vdots & & \vdots \cr a_{1 n} & a_{2 n} & \cdots & a_{m n} \cr}\right] \left[\matrix{b_1 \cr b_2 \cr \vdots \cr b_m \cr}\right] = \left[\matrix{0 \cr 0 \cr \vdots \cr 0 \cr}\right].$$](bases118.png)

But then

![$$\left[\matrix{ \uparrow & \uparrow & & \uparrow \cr w_1 & w_2 & \cdots & w_m \cr \downarrow & \downarrow & & \downarrow \cr}\right] \left[\matrix{b_1 \cr b_2 \cr \vdots \cr b_m \cr}\right] = \left[\matrix{ \uparrow & \uparrow & & \uparrow \cr v_1 & v_2 & \cdots & v_n \cr \downarrow & \downarrow & & \downarrow \cr}\right] \left[\matrix{ a_{1 1} & a_{2 1} & \cdots & a_{m 1} \cr a_{1 2} & a_{2 2} & \cdots & a_{m 2} \cr \vdots & \vdots & & \vdots \cr a_{1 n} & a_{2 n} & \cdots & a_{m n} \cr}\right] \left[\matrix{b_1 \cr b_2 \cr \vdots \cr b_m \cr}\right].$$](bases119.png)

Therefore,

![$$\left[\matrix{ \uparrow & \uparrow & & \uparrow \cr w_1 & w_2 & \cdots & w_m \cr \downarrow & \downarrow & & \downarrow \cr}\right] \left[\matrix{b_1 \cr b_2 \cr \vdots \cr b_m \cr}\right] = \left[\matrix{0 \cr 0 \cr \vdots \cr 0 \cr}\right].$$](bases120.png)

In equation form,

![]()

This is a nontrivial linear combination of the w's which adds up to 0, so the w's are dependent.

(b) Suppose that ![]() is a set of vectors in V

and

is a set of vectors in V

and ![]() . I want to show that

. I want to show that ![]() does not span V.

does not span V.

Suppose on the contrary that the w's span V. Then each v can be written as a linear combination of the w's:

In matrix form, this is

![$$\left[\matrix{ \uparrow & \uparrow & & \uparrow \cr v_1 & v_2 & \cdots & v_n \cr \downarrow & \downarrow & & \downarrow \cr}\right] = \left[\matrix{ \uparrow & \uparrow & & \uparrow \cr w_1 & w_2 & \cdots & w_m \cr \downarrow & \downarrow & & \downarrow \cr}\right] \left[\matrix{ a_{1 1} & a_{2 1} & \cdots & a_{n 1} \cr a_{1 2} & a_{2 2} & \cdots & a_{n 2} \cr \vdots & \vdots & & \vdots \cr a_{1 m} & a_{2 m} & \cdots & a_{n m} \cr}\right].$$](bases126.png)

Since ![]() , the coefficient matrix has more columns than rows.

Hence, the following system has a nontrivial solution

, the coefficient matrix has more columns than rows.

Hence, the following system has a nontrivial solution ![]() ,

, ![]() , ...

, ... ![]() :

:

![$$\left[\matrix{ a_{1 1} & a_{2 1} & \cdots & a_{n 1} \cr a_{1 2} & a_{2 2} & \cdots & a_{n 2} \cr \vdots & \vdots & & \vdots \cr a_{1 m} & a_{2 m} & \cdots & a_{n m} \cr}\right] \left[\matrix{x_1 \cr x_2 \cr \vdots \cr x_n \cr}\right] = \left[\matrix{0 \cr 0 \cr \vdots \cr 0 \cr}\right].$$](bases131.png)

Thus,

![$$\left[\matrix{ a_{1 1} & a_{2 1} & \cdots & a_{n 1} \cr a_{1 2} & a_{2 2} & \cdots & a_{n 2} \cr \vdots & \vdots & & \vdots \cr a_{1 m} & a_{2 m} & \cdots & a_{n m} \cr}\right] \left[\matrix{b_1 \cr b_2 \cr \vdots \cr b_n \cr}\right] = \left[\matrix{0 \cr 0 \cr \vdots \cr 0 \cr}\right].$$](bases132.png)

Multiplying the v and w equation on the right by the b-vector gives

![$$\left[\matrix{ \uparrow & \uparrow & & \uparrow \cr v_1 & v_2 & \cdots & v_n \cr \downarrow & \downarrow & & \downarrow \cr}\right] \left[\matrix{b_1 \cr b_2 \cr \vdots \cr b_n \cr}\right] = \left[\matrix{ \uparrow & \uparrow & & \uparrow \cr w_1 & w_2 & \cdots & w_m \cr \downarrow & \downarrow & & \downarrow \cr}\right] \left[\matrix{ a_{1 1} & a_{2 1} & \cdots & a_{n 1} \cr a_{1 2} & a_{2 2} & \cdots & a_{n 2} \cr \vdots & \vdots & & \vdots \cr a_{1 m} & a_{2 m} & \cdots & a_{n m} \cr}\right] \left[\matrix{b_1 \cr b_2 \cr \vdots \cr b_n \cr}\right].$$](bases133.png)

Hence,

![$$\left[\matrix{ \uparrow & \uparrow & & \uparrow \cr v_1 & v_2 & \cdots & v_n \cr \downarrow & \downarrow & & \downarrow \cr}\right] \left[\matrix{b_1 \cr b_2 \cr \vdots \cr b_n \cr}\right] = \left[\matrix{0 \cr 0 \cr \vdots \cr 0 \cr}\right].$$](bases134.png)

In equation form, this is

![]()

Since not all the b's are 0, this is a nontrivial linear combination of the v's which adds up to 0 --- contradicting the independence of the v's.

This contradiction means that the w's can't span after

all.![]()

Corollary. Let F be a field.

(a) Any subset of ![]() with more than n elements is

dependent.

with more than n elements is

dependent.

(b) Any subset of ![]() with fewer than n elements cannot

span.

with fewer than n elements cannot

span.

Proof. This follows from the Theorem, and the

fact that the standard basis for ![]() has n elements.

has n elements.![]()

As an example, the following set of four vectors in ![]() can't be independent:

can't be independent:

![$$\left\{\left[\matrix{1 \cr 0 \cr -1 \cr}\right], \left[\matrix{2 \cr -3 \cr 10 \cr}\right], \left[\matrix{1 \cr 1 \cr 1 \cr}\right], \left[\matrix{0 \cr 11 \cr -7 \cr}\right]\right\}$$](bases140.png)

Likewise, the following set of two vectors can't span ![]() :

:

![$$\left\{\left[\matrix{-2 \cr 1 \cr 2 \cr}\right], \left[\matrix{3 \cr 1 \cr 5 \cr}\right]\right\}\quad\halmos$$](bases142.png)

Corollary. If ![]() is a

basis for a vector space V, then every basis for V has n elements.

is a

basis for a vector space V, then every basis for V has n elements.

Proof. If ![]() is another basis

for V, then m can't be less than n or

is another basis

for V, then m can't be less than n or ![]() couldn't span. Likewise, m can't be greater than n or

couldn't span. Likewise, m can't be greater than n or ![]() couldn't be independent. Therefore,

couldn't be independent. Therefore, ![]() .

.![]()

The Corollary allows us to define the dimension of a vector space.

Definition. The number of elements in a basis

for V is called the dimension of V, and is

denoted ![]() .

.

The definition makes sense, since in a finite-dimensional vector space, any two bases have the same number of elements. This is true in general; I'll state the relevant results without proof.

(a) Every vector space has a basis. The proof requires a set-theoretic result called Zorn's Lemma.

(b) Two bases for any vector space have the same cardinality. Specifically, if ![]() and

and ![]() are bases for a vector space V, there is a

bijective function

are bases for a vector space V, there is a

bijective function ![]() .

.

Here's an example of a basis with an infinite number of elements.

Example. Let ![]() denote the

denote the ![]() -vector space of polynomials with coefficients in

-vector space of polynomials with coefficients in ![]() .

.

Show that ![]() is a basis for

is a basis for ![]() .

.

First, a polynomial has the form ![]() . This is a linear combination of elements

of

. This is a linear combination of elements

of ![]() . Hence, the set spans

. Hence, the set spans ![]() .

.

To show that the set is independent, suppose there are numbers ![]() such that

such that

![]()

This equation is an identity --- it's true for all ![]() . Setting

. Setting ![]() , I get

, I get ![]() . Then plugging

. Then plugging

![]() back in gives

back in gives

![]()

Since this is an identity, I can differentiate both sides to obtain

![]()

Setting ![]() gives

gives ![]() . Plugging

. Plugging ![]() back in gives

back in gives

![]()

Continuing in this way, I get ![]() . Hence, the

set is independent.

. Hence, the

set is independent.

Therefore, ![]() is a basis for

is a basis for ![]() .

.![]()

The next result shows that, in principle, you can construct a basis by:

(a) Starting with an independent set and adding vectors.

(b) Starting with a spanning set and removing vectors.

Theorem. Let V be a finite-dimensional vector space.

(a) Any set of independent vectors is a subset of a basis for V.

(b) Any spanning set for V contains a subset which is a basis.

Part (a) means that if S is an independent set, then there is a basis

T such that ![]() . (If S was a basis to begin with, then

. (If S was a basis to begin with, then

![]() .) Part (b) means that if S is a spanning set, then

there is a basis T such that

.) Part (b) means that if S is a spanning set, then

there is a basis T such that ![]() .

.

I'm only proving the result in the case where V has finite dimension n, but it is true for vector spaces of any dimension.

Proof.

(a) Let ![]() be independent. If this set spans V, it's a

basis, and I'm done. Otherwise, there is a vector

be independent. If this set spans V, it's a

basis, and I'm done. Otherwise, there is a vector ![]() which is not in the span of

which is not in the span of ![]() .

.

I claim that ![]() is independent. Suppose

is independent. Suppose

![]()

Suppose ![]() . Then I can write

. Then I can write

![]()

Since v has been expressed as a linear combination of the ![]() 's,

it's in the span of the

's,

it's in the span of the ![]() 's, contrary to assumption. Therefore, this

case is ruled out.

's, contrary to assumption. Therefore, this

case is ruled out.

The only other possibility is ![]() . Then

. Then ![]() , so independence of the

, so independence of the ![]() 's implies

's implies ![]() . Therefore,

. Therefore, ![]() is independent.

is independent.

I can continue adding vectors in this way until I get a set which is independent and spans --- a basis. The process must terminate, since no independent set in V can have more than n elements.

(b) Suppose ![]() spans V. I want to show that some

subset of

spans V. I want to show that some

subset of ![]() is a basis.

is a basis.

If ![]() is independent, it's a basis, and I'm done.

Otherwise, there is a nontrivial linear combination

is independent, it's a basis, and I'm done.

Otherwise, there is a nontrivial linear combination

![]()

Assume without loss of generality that ![]() . Then

. Then

![]()

Since ![]() is a linear combination of the other v's, I can

remove it and still have a set which spans V; that is,

is a linear combination of the other v's, I can

remove it and still have a set which spans V; that is, ![]() .

.

I continue throwing out vectors in this way until I reach a set which

spans and is independent --- a basis. The process must terminate,

because no set containing fewer than n vectors can span V.![]()

It's possible to carry out the "adding vectors" and "removing vectors" procedures in some specific cases. The algorithms are related to those for finding bases for the row space and column space of a matrix, which I'll discuss later.

Suppose you know a basis should have n elements, and you have a set S with n elements ("the right number"). To show S is a basis, you only need to check either that it is independent or that it spans --- not both. I'll justify this statement, then show by example how you can use it. I need a preliminary result.

Proposition. Let V be a finite dimensional

vector space over a field F, and let W be a subspace of V. If ![]() , then

, then ![]() .

.

Proof. Suppose ![]() , but

, but

![]() . I'll show that this leads to a contradiction.

. I'll show that this leads to a contradiction.

Let ![]() be a basis for W. Suppose this is

not a basis for V. Since it's an independent set, the previous result

shows that I can add vectors

be a basis for W. Suppose this is

not a basis for V. Since it's an independent set, the previous result

shows that I can add vectors ![]() ,

, ![]() , ...

, ... ![]() to

make a basis for V:

to

make a basis for V:

![]()

But this is a basis for V with more than n elements, which is impossible.

Therefore, ![]() is also a basis for V. Let

is also a basis for V. Let ![]() . Since

. Since ![]() spans V, I can write x as a

linear combination of the elements of

spans V, I can write x as a

linear combination of the elements of ![]() :

:

![]()

But since ![]() ,

, ![]() , ...

, ... ![]() are in W and W is a subspace, any

linear combination of

are in W and W is a subspace, any

linear combination of ![]() ,

, ![]() , ...

, ... ![]() must be in W. Thus,

must be in W. Thus,

![]() .

.

Since x was an arbitrary element of V, I get ![]() , so

, so ![]() .

.![]()

You might be thinking that this result is obvious: W and V have the same dimension, and you can't have one thing inside another, with both things having the "same size", unless the things are equal. This is intuitively what is going on here, but this kind of intuitive reasoning doesn't always work. For example, the even integers are a subset of the integers and, as infinite sets, both are of the same "order of infinity" ( cardinality). But the even integers aren't all of the integers: There are odd integers as well.

Corollary. Let S be a set of n vectors in an n-dimensional vector space V.

(a) If S is independent, then S is a basis for V.

(b) If S spans V, then S is a basis for V.

Proof. (a) Suppose S is independent. Consider

W, the span of S. Then S is independent and spans W, so S is a basis

for W. Since S has n elements, ![]() . But

. But ![]() and

and ![]() . By the preceding result,

. By the preceding result, ![]() .

.

Hence, S spans V, and S is a basis for V.

(b) Suppose S spans V. Suppose S is not independent. By an earlier result, I can remove some elements of S to get a set T which is a basis for V. But now I have a basis T for V with fewer than n elements (since I removed elements from S, which had n elements).

This is a contradiction, and hence S must be independent.![]()

Example. (a) Determine whether ![]() is a basis for the

is a basis for the ![]() vector space

vector space ![]() .

.

(b) Determine whether ![]() is a basis

for the

is a basis

for the ![]() vector space

vector space ![]() .

.

(a) Form the matrix with the vectors as columns and row reduce:

![$$\left[\matrix{ 1 & 2 & 2 \cr 2 & 1 & 0 \cr 1 & 1 & 1 \cr}\right] \quad \to \quad \left[\matrix{ 1 & 0 & 0 \cr 0 & 1 & 0 \cr 0 & 0 & 1 \cr}\right]$$](bases233.png)

Since the matrix row reduces to the identity, it is invertible. Since

the matrix is invertible, the vectors are independent. Since we have

3 vectors in a 3-dimensional vector space, the Corollary says that

the set is a basis.![]()

(b) Form the matrix with the vectors as columns and row reduce:

![$$\left[\matrix{ 1 & 1 & 2 \cr 1 & 2 & 2 \cr 0 & 2 & 0 \cr}\right] \quad \to \quad \left[\matrix{ 1 & 0 & 2 \cr 0 & 1 & 0 \cr 0 & 0 & 0 \cr}\right]$$](bases234.png)

The matrix did not row reduce to the identity, so it is not

invertible. Since the matrix is not invertible, the vectors aren't

independent. Hence, the vectors are not a basis.![]()

Copyright 2022 by Bruce Ikenaga