Let V be a vector space and let ![]() be a basis for V.

Every vector

be a basis for V.

Every vector ![]() can be uniquely expressed as a linear

combination of elements of

can be uniquely expressed as a linear

combination of elements of ![]() :

:

![]()

(Let me remind you of why this is true. Since a basis spans, every

![]() can be written in this way. On the other hand, if

can be written in this way. On the other hand, if

![]() are two

ways of writing a given vector, then

are two

ways of writing a given vector, then ![]() , and by independence

, and by independence ![]() , ...,

, ..., ![]() --- that is,

--- that is, ![]() , ...,

, ..., ![]() . So the representation of

a vector in this way is unique.)

. So the representation of

a vector in this way is unique.)

Consider the situation where ![]() is a finite ordered basis --- that is, fix a numbering

is a finite ordered basis --- that is, fix a numbering

![]() of the elements of

of the elements of ![]() . If

. If ![]() , the

ordered list of coefficients

, the

ordered list of coefficients ![]() is uniquely

associated with v. The

is uniquely

associated with v. The ![]() are the

components of v with respect to the (ordered) basis

are the

components of v with respect to the (ordered) basis ![]() ; I will use the notation

; I will use the notation

![]()

It is easy to confuse a vector with the representation of

the vector in terms of its components relative to a basis. This

confusion arises because representation of vectors which is most

familiar is that of a vector as an ordinary n-tuple in ![]() :

:

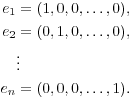

![]()

This amounts to identifying the elements of ![]() with their representation relative to the standard basis

with their representation relative to the standard basis

Example. (a) Show that

![$${\cal B} = \left\{\left[\matrix{1 \cr 0 \cr 1 \cr}\right], \left[\matrix{2 \cr -1 \cr 1 \cr}\right], \left[\matrix{3 \cr 1 \cr 0 \cr}\right]\right\}$$](changeofbasis24.png)

is a basis for ![]() .

.

These are three vectors in ![]() , which has

dimension 3. Hence, it suffices to check that they're independent.

Form the matrix with the elements of

, which has

dimension 3. Hence, it suffices to check that they're independent.

Form the matrix with the elements of ![]() as its rows and

row reduce:

as its rows and

row reduce:

![$$\left[\matrix{1 & 0 & 1 \cr 2 & -1 & 1 \cr 3 & 1 & 0 \cr}\right] \quad \rightarrow \quad \left[\matrix{1 & 0 & 0 \cr 0 & 1 & 0 \cr 0 & 0 & 1 \cr}\right]$$](changeofbasis28.png)

The vectors are independent. Three independent vectors in ![]() must form a basis.

must form a basis.![]()

(b) Find the components of ![]() relative to

relative to ![]() .

.

I must find numbers a, b, and c such that

![$$\left[\matrix{15 \cr -1 \cr 2 \cr}\right] = a \cdot \left[\matrix{1 \cr 0 \cr 1 \cr}\right] + b\cdot \left[\matrix{2 \cr -1 \cr 1 \cr}\right] + c\cdot \left[\matrix{3 \cr 1 \cr 0 \cr}\right].$$](changeofbasis32.png)

This is equivalent to the matrix equation

![$$\left[\matrix{1 & 2 & 3 \cr 0 & -1 & 1 \cr 1 & 1 & 0 \cr}\right] \left[\matrix{a \cr b \cr c \cr}\right] = \left[\matrix{15 \cr -1 \cr 2 \cr}\right].$$](changeofbasis33.png)

Set up the matrix for the system and row reduce to solve:

![$$\left[\matrix{1 & 2 & 3 & 15 \cr 0 & -1 & 1 & -1 \cr 1 & 1 & 0 & 2 \cr}\right] \quad \to \quad \left[\matrix{1 & 0 & 0 & -2 \cr 0 & 1 & 0 & 4 \cr 0 & 0 & 1 & 3 \cr}\right]$$](changeofbasis34.png)

This says ![]() ,

, ![]() , and

, and ![]() . Therefore,

. Therefore, ![]() .

.![]()

(c) Write ![]() in terms of the standard basis.

in terms of the standard basis.

I'll write ![]() for v relative to

for v relative to ![]() and

and ![]() for v relative to the

standard basis. The matrix equation in (b)

for v relative to the

standard basis. The matrix equation in (b)

![$$\left[\matrix{1 & 2 & 3 \cr 0 & -1 & 1 \cr 1 & 1 & 0 \cr}\right] \left[\matrix{a \cr b \cr c \cr}\right] = \left[\matrix{15 \cr -1 \cr 2 \cr}\right]$$](changeofbasis43.png)

says

![$$\left[\matrix{1 & 2 & 3 \cr 0 & -1 & 1 \cr 1 & 1 & 0 \cr}\right] v_{\cal B} = v_{\rm std}.$$](changeofbasis44.png)

In (b), I knew ![]() and I wanted

and I wanted ![]() ; this time it's the other way around. So I simply

put

; this time it's the other way around. So I simply

put ![]() into the

into the ![]() spot and multiply:

spot and multiply:

![$$\left[\matrix{1 & 2 & 3 \cr 0 & -1 & 1 \cr 1 & 1 & 0 \cr}\right] \left[\matrix{7 \cr -2 \cr 2 \cr}\right] = \left[\matrix{9 \cr 4 \cr 5 \cr}\right].\quad\halmos$$](changeofbasis49.png)

Let me generalize the observation I made in (c).

![]()

I'll write ![]() for M, and call it a translation matrix. Again,

for M, and call it a translation matrix. Again, ![]() translates vectors written in terms of

translates vectors written in terms of ![]() to vectors written in terms of the standard basis.

to vectors written in terms of the standard basis.

The inverse of a square matrix M is a matrix

![]() such that

such that ![]() ,

where I is the identity matrix. If I multiply the last equation on

the left by

,

where I is the identity matrix. If I multiply the last equation on

the left by ![]() , I get

, I get

![]()

In words, this means:

This means that ![]() . Dispensing

with M, I can say that

. Dispensing

with M, I can say that

![]()

In the example above, left multiplication by the following matrix

translates vectors from ![]() to the standard basis:

to the standard basis:

![$$[{\cal B} \to {\rm std}] = \left[\matrix{ 1 & 2 & 3 \cr 0 & -1 & 1 \cr 1 & 1 & 0 \cr}\right].$$](changeofbasis67.png)

The inverse of is

![$$[{\rm std} \to {\cal B}] = [{\cal B} \to {\rm std}]^{-1} = \left[\matrix{-\dfrac{1}{4} & \dfrac{3}{4} & \dfrac{5}{4} \cr \noalign{\vskip2pt} \dfrac{1}{4} & -\dfrac{3}{4} & -\dfrac{1}{4} \cr \noalign{\vskip2pt} \dfrac{1}{4} & \dfrac{1}{4} & -\dfrac{1}{4} \cr}\right].$$](changeofbasis68.png)

Left multiplication by this matrix translates vectors from the

standard basis to ![]() .

.

Example. (Translating vectors from one basis to another) The translation analogy is a useful one, since it makes it easy to see how to set up arbitrary changes of basis.

For example, suppose

![$${\cal B}' = \left\{\left[\matrix{1 \cr -1 \cr 2 \cr}\right], \left[\matrix{2 \cr 0 \cr 1 \cr}\right], \left[\matrix{1 \cr 1 \cr 1 \cr}\right]\right\}$$](changeofbasis70.png)

is another basis for ![]() .

.

Here's how to translate vectors from ![]() to

to ![]() :

:

![]()

Remember that the product ![]() is read from right

to left! Thus, the composite operation

is read from right

to left! Thus, the composite operation ![]() translates a

translates a

![]() vector to a standard vector, and then translates the

resulting standard vector to a

vector to a standard vector, and then translates the

resulting standard vector to a ![]() vector. Moreover,

I have matrices which perform each of the right-hand operations.

vector. Moreover,

I have matrices which perform each of the right-hand operations.

This matrix translates vectors from ![]() to the standard

basis:

to the standard

basis:

![$$\left[\matrix{1 & 2 & 1 \cr -1 & 0 & 1 \cr 2 & 1 & 1 \cr}\right].$$](changeofbasis80.png)

This matrix translates vectors from the standard basis to ![]() :

:

![$$\left[\matrix{-\dfrac{1}{4} & \dfrac{3}{4} & \dfrac{5}{4} \cr \noalign{\vskip2pt} \dfrac{1}{4} & -\dfrac{3}{4} & -\dfrac{1}{4} \cr \noalign{\vskip2pt} \dfrac{1}{4} & \dfrac{1}{4} & -\dfrac{1}{4} \cr}\right].$$](changeofbasis82.png)

Therefore, multiplication by the following matrix will translate

vectors from ![]() to

to ![]() :

:

![$$\left[\matrix{-\dfrac{1}{4} & \dfrac{3}{4} & \dfrac{5}{4} \cr \noalign{\vskip2pt} \dfrac{1}{4} & -\dfrac{3}{4} & -\dfrac{1}{4} \cr \noalign{\vskip2pt} \dfrac{1}{4} & \dfrac{1}{4} & -\dfrac{1}{4} \cr}\right] \left[\matrix{1 & 2 & 1 \cr -1 & 0 & 1 \cr 2 & 1 & 1 \cr}\right] = \left[\matrix{\dfrac{3}{2} & \dfrac{3}{4} & \dfrac{7}{4} \cr \noalign{\vskip2pt} \dfrac{1}{2} & \dfrac{1}{4} & -\dfrac{3}{4} \cr \noalign{\vskip2pt} -\dfrac{1}{2} & \dfrac{1}{4} & \dfrac{1}{4} \cr}\right]. \quad\halmos$$](changeofbasis85.png)

Copyright 2008 by Bruce Ikenaga