Definition. Let A be an ![]() matrix. The column vectors of

A are the vectors in

matrix. The column vectors of

A are the vectors in ![]() corresponding to the columns of A.

The column space of A is the subspace of

corresponding to the columns of A.

The column space of A is the subspace of ![]() spanned by the column vectors of A.

spanned by the column vectors of A.

For example, consider the real matrix

![$$A = \left[\matrix{1 & 0 \cr 0 & 1 \cr 0 & 0 \cr}\right].$$](column-space4.png)

The column vectors are ![]() and

and ![]() . The column

space is the subspace of

. The column

space is the subspace of ![]() spanned by these vectors. Thus,

the column space consists of all vectors of the form

spanned by these vectors. Thus,

the column space consists of all vectors of the form

![]()

We've seen how to find a basis for the row space of a matrix. We'll now give an algorithm for finding a basis for the column space.

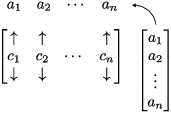

First, here's a reminder about matrix multiplication. If A is an ![]() matrix and

matrix and ![]() , then you can think of the

multiplication

, then you can think of the

multiplication ![]() as multiplying the columns of A by the

components of v:

as multiplying the columns of A by the

components of v:

This means that if ![]() is the i-th column of A and

is the i-th column of A and ![]() , the product

, the product ![]() is a linear

combination of the columns of A:

is a linear

combination of the columns of A:

![$$\left[\matrix{ \uparrow & \uparrow & & \uparrow \cr c_1 & c_2 & \cdots & c_n \cr \downarrow & \downarrow & & \downarrow \cr}\right] \left[\matrix{a_1 \cr a_2 \cr \vdots \cr a_n \cr}\right] = a_1 c_1 + a_2 c_2 + \cdots + a_n c_n.$$](column-space16.png)

Proposition. Let A be a matrix, and let R be

the row reduced echelon matrix which is row equivalent to A. Suppose

the leading entries of R occur in columns ![]() , where

, where ![]() , and let

, and let ![]() denote the i-th column of A. Then

denote the i-th column of A. Then ![]() is independent.

is independent.

Proof. Suppose that

![]()

Form the vector ![]() , where

, where

![]()

The equation above implies that ![]() .

.

It follows that v is in the solution space of the system ![]() . Since

. Since ![]() has the same solution space,

has the same solution space, ![]() . Let

. Let ![]() denote the i-th column of R. Then

denote the i-th column of R. Then

![]()

However, since R is in row reduced echelon form, ![]() is a vector with 1 in the k-th row and 0's elsewhere.

Hence,

is a vector with 1 in the k-th row and 0's elsewhere.

Hence, ![]() is independent, and

is independent, and

![]() .

.![]()

The proof provides an algorithm for finding a basis for the column

space of a matrix. Specifically, row reduce the matrix A to a row

reduced echelon matrix R. If the leading entries of R occur in

columns ![]() , then consider the columns

, then consider the columns ![]() of A. These columns form a basis for the

column space of A.

of A. These columns form a basis for the

column space of A.![]()

Example. Find a basis for the column space of the real matrix

![$$\left[\matrix{ 1 & -2 & 3 & 1 & 1 \cr 2 & 1 & 0 & 3 & 1 \cr 0 & -5 & 6 & -1 & 1 \cr 7 & 1 & 3 & 10 & 4 \cr}\right].$$](column-space35.png)

Row reduce the matrix:

![$$\left[\matrix{ 1 & -2 & 3 & 1 & 1 \cr 2 & 1 & 0 & 3 & 1 \cr 0 & -5 & 6 & -1 & 1 \cr 7 & 1 & 3 & 10 & 4 \cr}\right] \quad \to \quad \left[\matrix{ 1 & 0 & 0.6 & 1.4 & 0.6 \cr 0 & 1 & -1.2 & 0.2 & -0.2 \cr 0 & 0 & 0 & 0 & 0 \cr 0 & 0 & 0 & 0 & 0 \cr}\right]$$](column-space36.png)

The leading entries occur in columns 1 and 2. Therefore, ![]() and

and ![]() form a basis for the column

space of A.

form a basis for the column

space of A.![]()

Note that if A and B are row equivalent, they don't necessarily have the same column space. For example,

![]()

However, all the elements of the column space of the second matrix have their second component equal to 0; this is obviously not true of elements of the column space of the first matrix.

Example. Find a basis for the column space of

the following matrix over ![]() :

:

![$$A = \left[\matrix{ 0 & 1 & 1 & 0 \cr 1 & 2 & 1 & 0 \cr 2 & 1 & 2 & 1 \cr}\right].$$](column-space41.png)

Row reduce the matrix:

![$$\left[\matrix{ 0 & 1 & 1 & 0 \cr 1 & 2 & 1 & 0 \cr 2 & 1 & 2 & 1 \cr}\right] \matrix{\to \cr r_{1} \leftrightarrow r_{2} \cr} \left[\matrix{ 1 & 2 & 1 & 0 \cr 0 & 1 & 1 & 0 \cr 2 & 1 & 2 & 1 \cr}\right] \matrix{\to \cr r_{3} \to r_{3} + r_{1} \cr}$$](column-space42.png)

![$$\left[\matrix{ 1 & 2 & 1 & 0 \cr 0 & 1 & 1 & 0 \cr 0 & 0 & 0 & 1 \cr}\right] \matrix{\to \cr r_{1} \to r_{1} + r_{2} \cr} \left[\matrix{ 1 & 0 & 2 & 0 \cr 0 & 1 & 1 & 0 \cr 0 & 0 & 0 & 1 \cr}\right]$$](column-space43.png)

The leading entries occur in columns 1, 2, and 4. Hence, columns 1, 2, and 4 of A are independent and form a basis for the column space of A:

![$$\left\{\left[\matrix{0 \cr 1 \cr 2 \cr}\right], \left[\matrix{1 \cr 2 \cr 1 \cr}\right], \left[\matrix{0 \cr 0 \cr 1 \cr}\right]\right\} \quad\halmos$$](column-space44.png)

I showed earlier that you can add vectors to an independent set to get a basis. The column space basis algorithm shows how to remove vectors from a spanning set to get a basis.

Example. Find a subset of the following set of

vectors which forms a basis for ![]() .

.

![$$\left\{ \left[\matrix{1 \cr 2 \cr 1 \cr}\right], \left[\matrix{-1 \cr 1 \cr -1 \cr}\right], \left[\matrix{1 \cr 1 \cr 1 \cr}\right], \left[\matrix{4 \cr -1 \cr 2 \cr}\right]\right\}$$](column-space47.png)

Make a matrix with the vectors as columns and row reduce:

![$$\left[\matrix{ 1 & -1 & 1 & 4 \cr 2 & 1 & 1 & -1 \cr 1 & -1 & 1 & 2 \cr}\right] \quad \to \quad \left[\matrix{ 1 & 0 & \dfrac{2}{3} & 0 \cr \noalign{\vskip2pt} 0 & 1 & -\dfrac{1}{3} & 0 \cr \noalign{\vskip2pt} 0 & 0 & 0 & 1 \cr}\right]$$](column-space48.png)

The leading entries occur in columns 1, 2, and 4. Therefore, the

corresponding columns of the original matrix are independent, and

form a basis for ![]() :

:

![$$\left\{ \left[\matrix{1 \cr 2 \cr 1 \cr}\right], \left[\matrix{-1 \cr 1 \cr -1 \cr}\right], \left[\matrix{4 \cr -1 \cr 2 \cr}\right]\right\}.\quad\halmos$$](column-space50.png)

Definition. Let A be a matrix. The column rank of A is the dimension of the column space of A.

This is really just a temporary definition, since we'll show that the column rank is the same as the rank we define earlier (the dimension of the row space).

Proposition. Let A be a matrix. Then

![]()

Proof. Let R be the row reduced echelon matrix

which is row equivalent to A. Suppose the leading entries of R occur

in columns ![]() , where

, where ![]() , and let

, and let ![]() denote the i-th column

of A. By the preceding lemma,

denote the i-th column

of A. By the preceding lemma, ![]() is independent. There is one vector in this set for

each leading entry, and the number of leading entries equals the row

rank. Therefore,

is independent. There is one vector in this set for

each leading entry, and the number of leading entries equals the row

rank. Therefore,

![]()

Now consider ![]() . This is A with the rows and columns

swapped, so

. This is A with the rows and columns

swapped, so

![]()

![]()

Applying the first part of the proof to ![]() ,

,

![]()

Therefore,

![]()

Proposition. Let A, B, P and Q be matrices,

where P and Q are invertible. Suppose ![]() . Then

. Then

![]()

Proof. I showed earlier that ![]() . This was row rank; a similar proof shows

that

. This was row rank; a similar proof shows

that

![]()

Since row rank and column rank are the same, ![]() .

.

Now

![]()

But ![]() , so repeating the computation

gives

, so repeating the computation

gives ![]() . Therefore,

. Therefore, ![]() .

.![]()

Copyright 2023 by Bruce Ikenaga