Determinants are functions which take matrices as inputs and produce numbers. They are of enormous importance in linear algebra, but perhaps you've also seen them in other courses. They're used to define the cross product of two 3-dimensional vectors. They appear in Jacobians which occur in the change-of-variables formula for multiple integrals.

Determinants take as inputs ![]() (square)

matrices with entries in R, where R is a commutative ring with

identity. The set of such matrices is denoted

(square)

matrices with entries in R, where R is a commutative ring with

identity. The set of such matrices is denoted ![]() . (I will be careful to prove everything for a

commutative ring with identity --- but for many things, you can

pretend that R is just the real numbers

. (I will be careful to prove everything for a

commutative ring with identity --- but for many things, you can

pretend that R is just the real numbers ![]() if it helps you to understand.)

if it helps you to understand.)

In this section, I'll define determinants as functions satisfying three axioms. Mathematicians often proceed in this way: Define an object by identifying properties which characterize it, rather than simply writing down a formula.

Definition. A determinant

function is a function ![]() which satisfies the following axioms:

which satisfies the following axioms:

1. D is a linear function in each row. That is, if ![]() and

and ![]() ,

,

![$$D \left[\matrix{ \leftarrow & r_1 & \rightarrow \cr & \vdots & \cr \leftarrow & a x + y & \rightarrow \cr & \vdots & \cr \leftarrow & r_n & \rightarrow \cr}\right] = a \cdot D \left[\matrix{ \leftarrow & r_1 & \rightarrow \cr & \vdots & \cr \leftarrow & x & \rightarrow \cr & \vdots & \cr \leftarrow & r_n & \rightarrow \cr}\right] + D \left[\matrix{ \leftarrow & r_1 & \rightarrow \cr & \vdots & \cr \leftarrow & y & \rightarrow \cr & \vdots & \cr \leftarrow & r_n & \rightarrow \cr}\right].$$](determinants-axioms7.png)

2. A matrix with two equal rows has determinant 0:

![$$D \left[\matrix{ \leftarrow & r_1 & \rightarrow \cr & \vdots & \cr \leftarrow & x & \rightarrow \cr & \vdots & \cr \leftarrow & x & \rightarrow \cr & \vdots & \cr \leftarrow & r_n & \rightarrow \cr}\right] = 0.$$](determinants-axioms8.png)

3. ![]() , where I is the

, where I is the ![]() identity matrix.

identity matrix.

Note: Later on, you'll see the following standard notations instead of "D" for determinants. You can either write "det" in place of D, or put vertical bars around the matrix:

![]()

For now, I'll use "D".

If you've never seen something defined this way, you might be a bit uneasy. Am I going to give you a formula? Not yet.

First, a formula may be fine for computing things, but it doesn't really tell you what the thing is. In that respect, it's superficial, like judging someone by their appearance. Giving axioms for a thing can give a deeper view of what the thing is, and does --- its essence

Second, defining determinants using axioms makes it a lot easier to prove many of the important properties of determinants --- for example, that the determinant of a product of matrices is the product of the determinants.

You still want a formula or a recipe for computing determinants --- right? Well, in some of the examples below, we'll see how you can compute determinants just using the axioms, or using row reduction. Be patient, and you'll feel better shortly!

Let's see how the axioms look like in particular cases.

The first axiom (linearity) is probably the hardest to understand. It allows you to add or subtract, or move constants in and out, in a single row, assuming that all the other rows stay the same. It is easier to show you what this means than to describe it in words.

For example, here are two determinants being combined into one. The two third rows are added, and the other two rows (which must be the same in both matrices) are unchanged:

![$$D \left[\matrix{ a & b & c \cr d & e & f \cr x_1 & x_2 & x_3 \cr}\right] + D \left[\matrix{ a & b & c \cr d & e & f \cr y_1 & y_2 & y_3 \cr}\right] = D \left[\matrix{ a & b & c \cr d & e & f \cr x_1 + y_1 & x_2 + y_2 & x_3 + y_3 \cr}\right].$$](determinants-axioms12.png)

In row 3, I used the fact that

![]()

You can also take a single determinant apart into two determinants. In this example, we have subtraction instead of addition. All the action takes place in row 2; the first and third rows are the same in all of the matrices.

![$$D \left[\matrix{ a & b & c \cr x_1 - y_1 & x_2 - y_2 & x_3 - y_3 \cr d & e & f \cr}\right] = D \left[\matrix{ a & b & c \cr x_1 & x_2 & x_3 \cr d & e & f \cr}\right] - D \left[\matrix{ a & b & c \cr y_1 & y_2 & y_3 \cr d & e & f \cr}\right].$$](determinants-axioms14.png)

Linearity also allows you to factor a constant out of a single row, in this case row 1:

![$$D \left[\matrix{ k x_1 & k x_2 & k x_3 \cr a & b & c \cr d & e & f \cr}\right] = k \cdot D \left[\matrix{ x_1 & x_2 & x_3 \cr a & b & c \cr d & e & f \cr}\right].$$](determinants-axioms15.png)

You can do the opposite: Multiply a constant outside the determinant into a single row:

![]()

You could do this as well:

![]()

Here's an example where you "take apart" a determinant using linearity. Notice that the first two rows are the same in all the matrices; all the action takes place in the third row:

![$$D \left[\matrix{ a & b & c \cr d & e & f \cr x_1 + k y_1 & x_2 + k y_2 & x_3 + k y_3\cr}\right] = D \left[\matrix{ a & b & c \cr d & e & f \cr x_1 & x_2 & x_3 \cr}\right] + D \left[\matrix{ a & b & c \cr d & e & f \cr k y_1 & k y_2 & k y_3\cr}\right] =$$](determinants-axioms18.png)

![$$D \left[\matrix{ a & b & c \cr d & e & f \cr x_1 & x_2 & x_3 \cr}\right] + k \cdot D \left[\matrix{ a & b & c \cr d & e & f \cr y_1 & y_2 & y_3\cr}\right].$$](determinants-axioms19.png)

First, I used linearity to break the given determinant up into two determinants. Then I factored k out of the third row of the second determinant.

Perhaps you feel that I haven't really told you what a determinant is, because I haven't given you a formula or recipe for computing a determinant. Those axioms seem pretty abstract. What you'd like is to start with a matrix and produce a number. In fact, the three axioms above are enough to be able to compute determinants (though not very efficiently). Here's an example.

Suppose I have a determinant function D for ![]() real matrices --- so D satisfies the three axioms

above. Using only the axioms, I'll compute

real matrices --- so D satisfies the three axioms

above. Using only the axioms, I'll compute

![]()

First, I'll break up the second row into a sum of a multiple of the first row and another vector:

![]()

Then I can use linearity to break the determinant up into two pieces.

![]()

![]()

Notice that in the second equality the first row stays the same,

while the new second rows are ![]() and

and ![]() .

.

Notice that linearity also allows me to factor 3 and 5 out of the second rows for the third equality.

The alternating axiom says that a matrix with two equal rows has determinant 0, and that gave me the fifth equality.

Now I do a similar trick with the first row:

![]()

![]()

The second equality used linearity applied to the first row: ![]() . The third equality used

the fact that the determinant of the identity matrix is 1, and used

linearity to factor -1 out of the second row. The fourth equality

used the fact that the determinant of a matrix with two equal rows is

0.

. The third equality used

the fact that the determinant of the identity matrix is 1, and used

linearity to factor -1 out of the second row. The fourth equality

used the fact that the determinant of a matrix with two equal rows is

0.

Thus,

![]()

Notice that we computed a determinant using only the axioms for a

determinant. We don't have a formula at the moment (though you

may have seen a formula for ![]() determinants

before). It's true that the computation took a lot of steps, and this

is not the best way to do this --- but this example gives some

evidence that our axioms actually tell what determinants are.

determinants

before). It's true that the computation took a lot of steps, and this

is not the best way to do this --- but this example gives some

evidence that our axioms actually tell what determinants are.

The next two results are often useful in computations.

First, you might suspect that a matrix with an all-zero row has determinant 0, and it's easy to prove using linearity. Rather than give a formal proof, I'll illustrate the idea with a particular example.

![$$D \left[\matrix{ 0 & 0 & 0 \cr 2 & 0 & 17 \cr 7 & -6 & 1 \cr}\right] = D \left[\matrix{ 0 + 0 & 0 + 0 & 0 + 0 \cr 2 & 0 & 17 \cr 7 & -6 & 1 \cr}\right] = D \left[\matrix{ 0 & 0 & 0 \cr 2 & 0 & 17 \cr 7 & -6 & 1 \cr}\right] + D \left[\matrix{ 0 & 0 & 0 \cr 2 & 0 & 17 \cr 7 & -6 & 1 \cr}\right],$$](determinants-axioms32.png)

The last equation says "![]() ". This means that

". This means that ![]() . So

. So

![$$D \left[\matrix{ 0 & 0 & 0 \cr 2 & 0 & 17 \cr 7 & -6 & 1 \cr}\right] = 0.$$](determinants-axioms35.png)

The same idea can be used to prove the result in general.

The next result tells us what happens to a determinant when we swap two rows of the matrix.

Lemma. If ![]() is a

function which is linear in the rows (Axiom 1) and is 0 when a matrix

has equal rows (Axiom 2), then swapping two rows multiplies the value

of D by -1:

is a

function which is linear in the rows (Axiom 1) and is 0 when a matrix

has equal rows (Axiom 2), then swapping two rows multiplies the value

of D by -1:

![$$D \left[\matrix{ & \vdots & \cr \leftarrow & r_i & \rightarrow \cr & \vdots & \cr \leftarrow & r_j & \rightarrow \cr & \vdots & \cr}\right] = -D \left[\matrix{ & \vdots & \cr \leftarrow & r_j & \rightarrow \cr & \vdots & \cr \leftarrow & r_i & \rightarrow \cr & \vdots & \cr}\right].$$](determinants-axioms37.png)

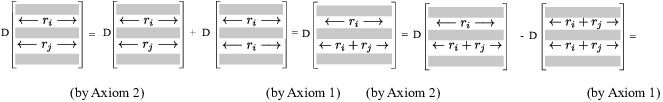

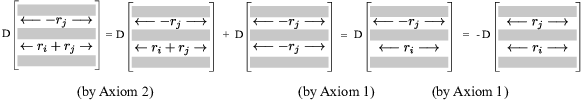

Proof. The proof will use the first and second axioms repeatedly. The idea is to swap rows i and j by adding or subtracting rows.

In the diagrams below, the gray rectangles represent rows which are unchanged and the same in all of the matrices. All the "action" takes place in row i and row j.

Notice that in each addition or subtraction step (the steps that use

Axiom 1), only one of row i or row j changes at a time.![]()

Remarks. (a) I'll show later that it's enough

to assume (instead of Axiom 2) that ![]() vanishes whenever two adjacent rows of A are

equal. (This is a technical point which you can forget about until we

need it.)

vanishes whenever two adjacent rows of A are

equal. (This is a technical point which you can forget about until we

need it.)

(b) Suppose that ![]() is a function

satisfying Axioms 1 and 3, and suppose that swapping two rows

multiplies the value of D by -1. Must D satisfy Axiom 2? In other

words, is "swapping multiplies the value by -1"

equivalent to "equal rows means determinant 0"?

is a function

satisfying Axioms 1 and 3, and suppose that swapping two rows

multiplies the value of D by -1. Must D satisfy Axiom 2? In other

words, is "swapping multiplies the value by -1"

equivalent to "equal rows means determinant 0"?

Assuming that swapping two rows multiplies the value of D by -1, I have

![$$D(A) = D \left[\matrix{ \leftarrow & r_1 & \rightarrow \cr & \vdots & \cr \leftarrow & x & \rightarrow \cr & \vdots & \cr \leftarrow & x & \rightarrow \cr & \vdots & \cr \leftarrow & r_n & \rightarrow \cr}\right] = -D \left[\matrix{ \leftarrow & r_1 & \rightarrow \cr & \vdots & \cr \leftarrow & x & \rightarrow \cr & \vdots & \cr \leftarrow & x & \rightarrow \cr & \vdots & \cr \leftarrow & r_n & \rightarrow \cr}\right] = -D(A).$$](determinants-axioms42.png)

(I swapped the two equal x-rows, which is why the matrix didn't change. But by assumption, this useless swap multiplies D by -1.)

Hence, ![]() .

.

If R is ![]() ,

, ![]() ,

, ![]() , or

, or ![]() for n prime and

not equal to 2, then

for n prime and

not equal to 2, then ![]() implies

implies ![]() . However, if

. However, if ![]() , then

, then ![]() for all x. Hence,

for all x. Hence, ![]() , no matter what

, no matter what ![]() is. I can't conclude that

is. I can't conclude that ![]() in this case. Therefore, Axiom 2 need not hold. You

can see, however, that it will hold if R is a field of characteristic

other than 2.

in this case. Therefore, Axiom 2 need not hold. You

can see, however, that it will hold if R is a field of characteristic

other than 2.

Fortunately, since I took "equal rows means determinant 0"

as an axiom for determinants, and since the lemma shows that

this implies that "swapping rows multiplies the

determinant by -1", I know that both of these

properties will hold for determinant functions.![]()

Example. ( Computing

determinants using the axioms) Suppose that ![]() is a determinant function and

is a determinant function and

![$$D \left[\matrix{ \leftarrow & a_1 & \rightarrow \cr \leftarrow & b & \rightarrow \cr \leftarrow & c & \rightarrow \cr}\right] = 5 \quad\hbox{and}\quad D \left[\matrix{ \leftarrow & a_2 & \rightarrow \cr \leftarrow & b & \rightarrow \cr \leftarrow & c & \rightarrow \cr}\right] = -3.$$](determinants-axioms56.png)

Compute

![$$D \left[\matrix{ \leftarrow & c & \rightarrow \cr \leftarrow & b & \rightarrow \cr \leftarrow & a_1 + 4 a_2 & \rightarrow \cr}\right].$$](determinants-axioms57.png)

![$$D \left[\matrix{ \leftarrow & c & \rightarrow \cr \leftarrow & b & \rightarrow \cr \leftarrow & a_1 + 4 a_2 & \rightarrow \cr}\right] = -D \left[\matrix{ \leftarrow & a_1 + 4 a_2 & \rightarrow \cr \leftarrow & b & \rightarrow \cr \leftarrow & c & \rightarrow \cr}\right] =$$](determinants-axioms58.png)

![$$-\left(D \left[\matrix{ \leftarrow & a_1 & \rightarrow \cr \leftarrow & b & \rightarrow \cr \leftarrow & c & \rightarrow \cr}\right] + 4 D \left[\matrix{ \leftarrow & a_2 & \rightarrow \cr \leftarrow & b & \rightarrow \cr \leftarrow & c & \rightarrow \cr}\right]\right) = -(5 + 4\cdot (-3)) = 7.\quad\halmos$$](determinants-axioms59.png)

Determinants and elementary row operations.

Elementary row operations are used to reduce a matrix to row reduced echelon form, and as a consequence, to solve systems of linear equations. We can use them to compute determinants with more ease than using the axioms directly --- and, even when we have some better algorithms (like expansion by cofactors), row operations will be useful in simplifying computations. How are determinants affected by elementary row operations?

Adding a multiple of a row to another row does not change the

determinant. Suppose, for example, I'm performing the operation ![]() . Let

. Let

![$$A = \left[\matrix{ & \vdots & \cr \leftarrow & r_i & \rightarrow \cr & \vdots & \cr \leftarrow & r_j & \rightarrow \cr & \vdots & \cr}\right].$$](determinants-axioms61.png)

Then

![$$D \left[\matrix{ & \vdots & \cr \leftarrow & r_i + a \cdot r_j & \rightarrow \cr & \vdots & \cr \leftarrow & r_j & \rightarrow \cr & \vdots & \cr}\right] = D \left[\matrix{ & \vdots & \cr \leftarrow & r_i & \rightarrow \cr & \vdots & \cr \leftarrow & r_j & \rightarrow \cr & \vdots & \cr}\right] + a \cdot D \left[\matrix{ & \vdots & \cr \leftarrow & r_j & \rightarrow \cr & \vdots & \cr \leftarrow & r_j & \rightarrow \cr & \vdots & \cr}\right] = D \left[\matrix{ & \vdots & \cr \leftarrow & r_i & \rightarrow \cr & \vdots & \cr \leftarrow & r_j & \rightarrow \cr & \vdots & \cr}\right] = D(A).$$](determinants-axioms62.png)

Therefore, this kind of row operation leaves the determinant unchanged.

The alternating property implies that swapping two rows multiplies the determinant by -1. For example,

![]()

Our third kind of row operation involves multiplying a row by a number (which must be invertible in the ring from which the entries of the matrix come). So if I wanted to multiply the second row of a real matrix by 19, I could do this:

![]()

Thus, multiplying the second row by 19 leaves a factor of ![]() outside.

outside.

However, when you're using row operations to compute a determinant, you usually want to factor a number out of a row, which you can do using the linearity axiom. Thus:

![]()

Example. ( Computing a

determinant using row operations) Suppose D is a determinant

function on ![]() real matrices. Use row operations

to compute the following determinant:

real matrices. Use row operations

to compute the following determinant:

![]()

![]()

![]()

Example. ( Computing a

determinant using row operations) Suppose D is a determinant

function on ![]() matrices with entries in

matrices with entries in ![]() . Use row operations to compute the following

determinant:

. Use row operations to compute the following

determinant:

![]()

In ![]() , I have

, I have ![]() . So

. So

![]()

![]()

I used the fact that a matrix with an all-zero row has determinant

0.![]()

Your experience with row reducing matrices tells you that either the row reduced echelon form will be the identity, or it will have an all-zero row at the bottom. In the second case, we've seen that the determinant is 0. In the first case, there may be constants multiplying the determinant, and the determinant of the identity is 1 --- and so, you know the value of the determinant by multiplying everything together.

Row reduction gives you a way of computing determinants that is a little more practical than applying the axioms directly. It should also convince you that, starting with the three determinant axioms, we now have something which takes a square matrix and produces a number.

There are still some questions we need to address. We need to prove that there really are functions which satisfy the three axioms. (Just being able to compute in particular cases is not a proof.) This is called an existence question. We will answer this question by producing an algorithm which gives a determinant function for square matrices. It is called expansion by cofactors.

Could there be multiple determinant functions? Could more than one function on square matrices satisfy the axioms? This seems unlikely given that we were able to start with numerical matrices and compute specific numbers --- but maybe a different approach might produce a different answer. This is called a uniqueness question.

We will show that, in fact, there is only one determinant function --- a function satisfying the three axioms --- on square matrices. It can be computed in various ways, but you'll get the same answer in all cases.

Along the way, we'll find another approach which uses permutations to compute determinants. We'll also prove some important properties of determinants, such as the rule for products.

Copyright 2021 by Bruce Ikenaga