I gave the axioms for determinant functions, and it seems from our examples that we could compute determinants using only the axioms, or using row operations. But this doesn't prove that there actually are any functions which satisfy the axioms; maybe our computations were just luck.

We can settle this question by actually constructing a function which

satisfies the axioms for a determinant. I'll do this using expansion by cofactors, which also gives us

another way of computing determinants. The construction is inductive: We'll get started by giving formulas

for the determinants of ![]() and

and ![]() matrices. Note that it's not enough to give

a formula; we also have to check that the formula gives a function

which satisfies the determinant axioms.

matrices. Note that it's not enough to give

a formula; we also have to check that the formula gives a function

which satisfies the determinant axioms.

Next, the cofactor construction will allow us to go from a

determinant function on ![]() matrices to a determinant function on

matrices to a determinant function on ![]() matrices (matrices "one size

bigger"). By induction, this will give a determinant function on

matrices (matrices "one size

bigger"). By induction, this will give a determinant function on

![]() matrices for all

matrices for all ![]() .

.

Let's get started with the easy cases.

For a ![]() matrix, the determinant is just

the single entry:

matrix, the determinant is just

the single entry:

![]()

The axioms are easy to check. If ![]() , then

, then

![]()

Thus, the linearity axiom holds.

Since the matrix only has one row, it can't have two equal rows, and

the second axiom holds vacuously. Finally, the ![]() identity matrix has determinant 1.

identity matrix has determinant 1.

I could start the induction at this point, but it's useful to give an

explicit formula for the determinant of a ![]() matrix. You may have seen this in (say) a

multivariable calculus course.

matrix. You may have seen this in (say) a

multivariable calculus course.

Proposition. Define ![]() by

by

![]()

Then D is a determinant function on ![]() .

.

Proof. I will check that the function is linear in each row.

![]()

![$$D \left[\matrix{ a & b \cr k c + c' & k d + d' \cr}\right] = a (k d + d') - b (k c + c') = k (a d - b c) + (a d' - b c') = k D \left[\matrix{ a & b \cr c & d \cr}\right] + D \left[\matrix{ a & b \cr c' & d' \cr}\right].$$ This proves linearity.](expansion-by-cofactors17.png)

Suppose the two rows are equal. Then

![]()

Therefore, Axiom 2 holds.

Finally,

![]()

All three axioms have been verified, so D is a determinant

function.![]()

To save writing, we often write

![]()

This is okay, since we'll eventually find out that there is only one

determinant function on ![]() matrices. You

can also write "det" for the determinant function.

matrices. You

can also write "det" for the determinant function.

For example, on ![]() ,

,

![]()

Let's move on to the inductive step for the main result. Earlier I discussed the connection between "swapping two rows multiplies the determinant by -1" and "when two rows are equal the determinant is 0". The next lemma is another piece of this picture. It says for a function which is linear in the rows, if "when two adjacent rows are equal the determinant is 0", then "swapping two rows multiplies the determinant by -1". (Axiom 2 does not require that the two equal rows be adjacent.) It's a technical result which is used in the proof of the main theorem and nowhere else, and the proof is rather technical as well. You could skip it and refer to the result when it's needed in the main theorem proof.

Lemma. Let ![]() be a function which is linear in

each row and satisfies

be a function which is linear in

each row and satisfies ![]() whenever two

adjacent rows are equal. Then swapping any two rows

multiplies the value of f by -1.

whenever two

adjacent rows are equal. Then swapping any two rows

multiplies the value of f by -1.

Proof. First, I'll show that swapping two adjacent rows multiplies the value of f by -1. I'll show the required manipulations in schematic form. The adjacent rows are those with the x's and y's; as usual, the vertical dots represent the other rows, which are the same in all of the matrices.

![$$f\left[\matrix{& \vdots & \cr \leftarrow & x & \rightarrow \cr \leftarrow & y & \rightarrow \cr & \vdots & \cr}\right] = f\left[\matrix{& \vdots & \cr \leftarrow & y + (x - y) & \rightarrow \cr \leftarrow & y & \rightarrow \cr & \vdots & \cr}\right] = f\left[\matrix{& \vdots & \cr \leftarrow & y & \rightarrow \cr \leftarrow & y & \rightarrow \cr & \vdots & \cr}\right] + f\left[\matrix{& \vdots & \cr \leftarrow & x - y & \rightarrow \cr \leftarrow & y & \rightarrow \cr & \vdots & \cr}\right] =$$](expansion-by-cofactors26.png)

![$$f\left[\matrix{& \vdots & \cr \leftarrow & x - y & \rightarrow \cr \leftarrow & y & \rightarrow \cr & \vdots & \cr}\right] = f\left[\matrix{& \vdots & \cr \leftarrow & x - y & \rightarrow \cr \leftarrow & x + (y - x) & \rightarrow \cr & \vdots & \cr}\right] = f\left[\matrix{& \vdots & \cr \leftarrow & x - y & \rightarrow \cr \leftarrow & x & \rightarrow \cr & \vdots & \cr}\right] + f\left[\matrix{& \vdots & \cr \leftarrow & x - y & \rightarrow \cr \leftarrow & y - x & \rightarrow \cr & \vdots & \cr}\right] =$$](expansion-by-cofactors27.png)

![$$f\left[\matrix{& \vdots & \cr \leftarrow & x & \rightarrow \cr \leftarrow & x & \rightarrow \cr & \vdots & \cr}\right] - f\left[\matrix{& \vdots & \cr \leftarrow & y & \rightarrow \cr \leftarrow & x & \rightarrow \cr & \vdots & \cr}\right] - f\left[\matrix{& \vdots & \cr \leftarrow & x - y & \rightarrow \cr \leftarrow & x - y & \rightarrow \cr & \vdots & \cr}\right] = -f\left[\matrix{& \vdots & \cr \leftarrow & y & \rightarrow \cr \leftarrow & x & \rightarrow \cr & \vdots & \cr}\right].$$](expansion-by-cofactors28.png)

Next, I'll show that you can swap any two rows by swapping

adjacent rows and odd number of times. Then since each swap

multiplies f by -1, and since ![]() , it follows that swapping two non-adjacent rows

multiplies the value of f by -1.

, it follows that swapping two non-adjacent rows

multiplies the value of f by -1.

To illustrate the idea, suppose the rows to be swapped are rows 1 and

n. I'll indicate how to do the swaps by just displaying the row

numbers. First, I swap row 1 with the adjacent row below it ![]() times to move it from the top of the matrix to the

n-th (bottom) position:

times to move it from the top of the matrix to the

n-th (bottom) position:

![$$\left[\matrix{ \longleftarrow r_1 \longrightarrow \cr \longleftarrow r_2 \longrightarrow \cr \longleftarrow r_3 \longrightarrow \cr \vdots \longleftarrow r_{n - 2} \longrightarrow \cr \longleftarrow r_{n - 1} \longrightarrow \cr \longleftarrow r_n \longrightarrow \cr}\right] \to \left[\matrix{ \longleftarrow r_2 \longrightarrow \cr \longleftarrow r_1 \longrightarrow \cr \longleftarrow r_3 \longrightarrow \cr \vdots \longleftarrow r_{n - 2} \longrightarrow \cr \longleftarrow r_{n - 1} \longrightarrow \cr \longleftarrow r_n \longrightarrow \cr}\right] \to \left[\matrix{ \longleftarrow r_2 \longrightarrow \cr \longleftarrow r_3 \longrightarrow \cr \longleftarrow r_1 \longrightarrow \cr \vdots \longleftarrow r_{n - 2} \longrightarrow \cr \longleftarrow r_{n - 1} \longrightarrow \cr \longleftarrow r_n \longrightarrow \cr}\right] \to \cdots \to$$](expansion-by-cofactors31.png)

![$$\left[\matrix{ \longleftarrow r_2 \longrightarrow \cr \longleftarrow r_3 \longrightarrow \cr \longleftarrow r_4 \longrightarrow \cr \vdots \longleftarrow r_1 \longrightarrow \cr \longleftarrow r_{n - 1} \longrightarrow \cr \longleftarrow r_n \longrightarrow \cr}\right] \to \left[\matrix{ \longleftarrow r_2 \longrightarrow \cr \longleftarrow r_3 \longrightarrow \cr \longleftarrow r_4 \longrightarrow \cr \vdots \longleftarrow r_{n - 1} \longrightarrow \cr \longleftarrow r_1 \longrightarrow \cr \longleftarrow r_n \longrightarrow \cr}\right] \to \left[\matrix{ \longleftarrow r_2 \longrightarrow \cr \longleftarrow r_3 \longrightarrow \cr \longleftarrow r_4 \longrightarrow \cr \vdots \longleftarrow r_{n - 1} \longrightarrow \cr \longleftarrow r_n \longrightarrow \cr \longleftarrow r_1 \longrightarrow \cr}\right]$$](expansion-by-cofactors32.png)

Next, I swap (the old) row n with the adjacent row above it ![]() times to move it to the top of the matrix:

times to move it to the top of the matrix:

![$$\left[\matrix{ \longleftarrow r_2 \longrightarrow \cr \longleftarrow r_3 \longrightarrow \cr \longleftarrow r_4 \longrightarrow \cr \vdots \longleftarrow r_{n - 1} \longrightarrow \cr \longleftarrow r_n \longrightarrow \cr \longleftarrow r_1 \longrightarrow \cr}\right] \to \left[\matrix{ \longleftarrow r_2 \longrightarrow \cr \longleftarrow r_3 \longrightarrow \cr \longleftarrow r_4 \longrightarrow \cr \vdots \longleftarrow r_n \longrightarrow \cr \longleftarrow r_{n - 1} \longrightarrow \cr \longleftarrow r_1 \longrightarrow \cr}\right] \to \left[\matrix{ \longleftarrow r_2 \longrightarrow \cr \longleftarrow r_3 \longrightarrow \cr \longleftarrow r_4 \longrightarrow \cr \vdots \longleftarrow r_{n - 2} \longrightarrow \cr \longleftarrow r_{n - 1} \longrightarrow \cr \longleftarrow r_1 \longrightarrow \cr}\right] \to \cdots \to$$](expansion-by-cofactors34.png)

![$$\left[\matrix{ \longleftarrow r_2 \longrightarrow \cr \longleftarrow r_3 \longrightarrow \cr \longleftarrow r_n \longrightarrow \cr \vdots \longleftarrow r_{n - 2} \longrightarrow \cr \longleftarrow r_{n - 1} \longrightarrow \cr \longleftarrow r_1 \longrightarrow \cr}\right] \to \left[\matrix{ \longleftarrow r_2 \longrightarrow \cr \longleftarrow r_n \longrightarrow \cr \longleftarrow r_3 \longrightarrow \cr \vdots \longleftarrow r_{n - 2} \longrightarrow \cr \longleftarrow r_{n - 1} \longrightarrow \cr \longleftarrow r_1 \longrightarrow \cr}\right] \to \left[\matrix{ \longleftarrow r_n \longrightarrow \cr \longleftarrow r_2 \longrightarrow \cr \longleftarrow r_3 \longrightarrow \cr \vdots \longleftarrow r_{n - 2} \longrightarrow \cr \longleftarrow r_{n - 1} \longrightarrow \cr \longleftarrow r_1 \longrightarrow \cr}\right]$$](expansion-by-cofactors35.png)

The original rows 1 and n have swapped places, and I needed ![]() swaps of adjacent rows to do

this. Now

swaps of adjacent rows to do

this. Now ![]() is an odd number, and

is an odd number, and ![]() .

.

In general, if you want to swap row i and row j, where ![]() , following the procedure above will require that you

swap

, following the procedure above will require that you

swap ![]() adjacent rows, an odd number. Once

again,

adjacent rows, an odd number. Once

again, ![]() .

.

Thus, swapping two non-adjacent rows multiplies the value of f by

-1.![]()

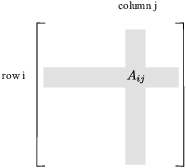

Definition. Let ![]() . Let

. Let ![]() be the

be the ![]() matrix obtained by deleting the

i-th row and j-th column of A. If D is a determinant function, then

matrix obtained by deleting the

i-th row and j-th column of A. If D is a determinant function, then

![]() is called the

is called the ![]() minor of A.

minor of A.

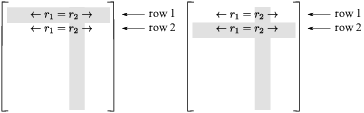

In the picture below, the i-th row and j-th column are shown in gray;

they will be deleted, and the remaining ![]() matrix is

matrix is ![]() . Its determinant is the

. Its determinant is the ![]() minor.

minor.

The ![]() cofactor of

A is

cofactor of

A is ![]() times the

times the ![]() minor, i.e.

minor, i.e. ![]() .

.

Example. Consider the real matrix

![$$A = \left[\matrix{1 & 2 & 3 \cr 4 & 5 & 6 \cr 7 & 8 & 9 \cr}\right].$$](expansion-by-cofactors55.png)

Find the ![]() minor and the

minor and the ![]() cofactor.

cofactor.

To find the ![]() minor, remove the

minor, remove the ![]() row and the

row and the ![]() column (i.e. the row and column containing

the

column (i.e. the row and column containing

the ![]() element):

element):

![$$\left[\matrix{1 & 2 & * \cr * & * & * \cr 7 & 8 & * \cr}\right].$$](expansion-by-cofactors62.png)

The ![]() minor is the determinant of what's

left:

minor is the determinant of what's

left:

![]()

To get the ![]() cofactor, multiply this by

cofactor, multiply this by

![]() . The

. The ![]() cofactor is

cofactor is ![]() .

.![]()

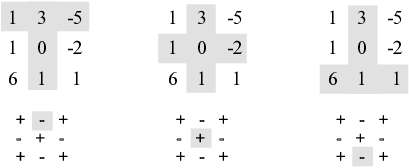

Note: The easy way to remember whether to

multiply by ![]() or -1 is to make a checkboard

pattern of +'s and -'s:

or -1 is to make a checkboard

pattern of +'s and -'s:

![$$\left[\matrix{+ & - & + \cr - & + & - \cr + & - & + \cr}\right].$$](expansion-by-cofactors70.png)

Use the sign in the ![]() position. For

example, there's a minus sign in the

position. For

example, there's a minus sign in the ![]() position, which agrees with the sign I

computed using

position, which agrees with the sign I

computed using ![]() .

.

The main result says that we may use cofactors to extend a

determinant function on ![]() matrices to a determinant function on

matrices to a determinant function on ![]() matrices.

matrices.

Theorem. ( Expansion by

cofactors) Let R be commutative ring with identity, and let C be

a determinant function on ![]() . Let

. Let ![]() . For any

. For any ![]() , define

, define

![]()

Then D is a determinant function on ![]() .

.

Notice that the summation is on i, which is the row index. The index

j is fixed, and it indexes columns. This means you're moving down the

![]() column as you sum. Consequently, this is a

cofactor expansion by columns.

column as you sum. Consequently, this is a

cofactor expansion by columns.

Proof. C is the "known" determinant

function on ![]() matrices (

matrices (![]() ), whereas D is the determinant function on

), whereas D is the determinant function on

![]() matrices (

matrices (![]() ) that we're trying to construct. We're using C to

build D, and the problem here is to verify that D satisfies the

determinant axioms. Thus, I need to show that D is linear in each

row, D is alternating, and

) that we're trying to construct. We're using C to

build D, and the problem here is to verify that D satisfies the

determinant axioms. Thus, I need to show that D is linear in each

row, D is alternating, and ![]() .

.

This proof is moderately difficult; I've tried to write out the details and illustrate with pictures, but don't get discouraged if you find it challenging to follow.

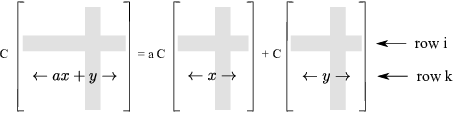

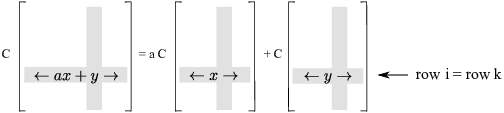

Linearity: I'll prove linearity in row k. Let

![]() ,

, ![]() . I want to

prove that

. I want to

prove that

![$$D \left[\matrix{ \leftarrow & r_1 & \rightarrow \cr & \vdots & \cr \leftarrow & a x + y & \rightarrow \cr & \vdots & \cr \leftarrow & r_n & \rightarrow \cr}\right] = a \cdot D \left[\matrix{ \leftarrow & r_1 & \rightarrow \cr & \vdots & \cr \leftarrow & x & \rightarrow \cr & \vdots & \cr \leftarrow & r_n & \rightarrow \cr}\right] + D \left[\matrix{ \leftarrow & r_1 & \rightarrow \cr & \vdots & \cr \leftarrow & y & \rightarrow \cr & \vdots & \cr \leftarrow & r_n & \rightarrow \cr}\right].$$](expansion-by-cofactors89.png)

All the action is taking place in the k-th row --- the one with the x's and y's --- and the other rows are the same in the three matrices.

Label the three matrices above:

![$$P = \left[\matrix{ \leftarrow & r_1 & \rightarrow \cr & \vdots & \cr \leftarrow & a x + y & \rightarrow \cr & \vdots & \cr \leftarrow & r_n & \rightarrow \cr}\right], \quad Q = \left[\matrix{ \leftarrow & r_1 & \rightarrow \cr & \vdots & \cr \leftarrow & x & \rightarrow \cr & \vdots & \cr \leftarrow & r_n & \rightarrow \cr}\right], \quad R = \left[\matrix{ \leftarrow & r_1 & \rightarrow \cr & \vdots & \cr \leftarrow & y & \rightarrow \cr & \vdots & \cr \leftarrow & r_n & \rightarrow \cr}\right].$$](expansion-by-cofactors90.png)

The equation to be proved is

![]()

If I expand the ![]() terms by

cofactors in this equation, I get

terms by

cofactors in this equation, I get

![]()

I'm going to show that the ![]() term in the sum

on the left equals the sum of the

term in the sum

on the left equals the sum of the ![]() terms of the sums on the right. To to this,

I will consider two cases:

terms of the sums on the right. To to this,

I will consider two cases: ![]() and

and ![]() .

.

First, consider a term in the cofactor sum where ![]() --- that is, where the row that is deleted is

not the

--- that is, where the row that is deleted is

not the ![]() row. The

row. The ![]() row and

row and ![]() column are deleted from each matrix, as

shown in gray in the picture below. Since C is a determinant

function, I can apply linearity to the

column are deleted from each matrix, as

shown in gray in the picture below. Since C is a determinant

function, I can apply linearity to the ![]() row, and I get the following equation:

row, and I get the following equation:

The matrices in this picture are, from left to right, ![]() ,

, ![]() , and

, and ![]() . The matrices are the same except in the

. The matrices are the same except in the

![]() row (the one with the x's and y's).

row (the one with the x's and y's).

Thus, the terms of the summation on the two sides agree for ![]() :

:

![]()

Next, consider the case where ![]() , so the row that is deleted is the

, so the row that is deleted is the ![]() row. Now P, Q, and R only differ in row k,

which is the row which is deleted.

row. Now P, Q, and R only differ in row k,

which is the row which is deleted.

Once it's deleted, the resulting matrices are the same:

![]()

Therefore,

![]()

Thus, the terms on the left and right are the same for all i, and D is linear.

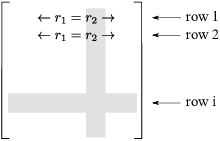

Alternating: I have to show that if two rows

of A are the same, then ![]() .

.

First, I'll show that if rows 1 and 2 are equal, then ![]() .

.

Suppose then that rows 1 and 2 are equal. Here's a typical term in the cofactor expansion:

![]()

Suppose row i, the row that is deleted, is a row other than row 1 or row 2.

Then the matrix that results after deletion will have two equal rows,

since row 1 and row 2 were equal. Therefore, ![]() , and the term in the

cofactor expansion is 0.

, and the term in the

cofactor expansion is 0.

Thus, all the terms in the cofactor expansion are 0 except the first

and second (![]() and

and ![]() ). These terms are

). These terms are

![]()

Now ![]() , since the first and second

rows are equal. And since row 1 and row 2 are equal, I get the same

matrix by deleting either row 1 or row 2:

, since the first and second

rows are equal. And since row 1 and row 2 are equal, I get the same

matrix by deleting either row 1 or row 2:

That is, ![]() . Therefore,

. Therefore, ![]() .

.

Thus, the only way in which the two terms above differ is in the

signs ![]() and

and ![]() . But

. But ![]() and

and ![]() are consecutive integers, so

one must be even and the other must be odd. Hence,

are consecutive integers, so

one must be even and the other must be odd. Hence, ![]() and

and ![]() are either

are either ![]() and -1 or -1 and

and -1 or -1 and ![]() . In either case, the terms cancel, and the sum of

the two terms is 0.

. In either case, the terms cancel, and the sum of

the two terms is 0.

Hence, the cofactor expansion is equal to 0, and ![]() , as I wished to prove.

, as I wished to prove.

Tou can give a similar argument if A has two adjacent rows equal other than rows 1 and 2 (so, for instance, if rows 4 and 5 are equal). I will skip the details.

Thus, I know that ![]() if two adjacent rows of A

are equal. Since I proved that D satisfies the linearity axiom, the

hypotheses of the previous technical lemma are satisfied, and I can

apply it. It says that swapping two rows multiplies the determinant

by -1.

if two adjacent rows of A

are equal. Since I proved that D satisfies the linearity axiom, the

hypotheses of the previous technical lemma are satisfied, and I can

apply it. It says that swapping two rows multiplies the determinant

by -1.

Now take the general case: Two rows of A are equal, but they aren't necessarily adjacent.

I can swap the rows of A until the two equal rows are

adjacent, and each swap multiplies the value of the determinant by

-1. Let's say that k swaps are needed to get the two equal rows to be

adjacent. That is, after k row swaps, I get a matrix B which has

adjacent equal rows. Then ![]() by the adjacent

row case above, so

by the adjacent

row case above, so

![]()

This completes the proof that ![]() if A has two equal rows, and the

Alternating Axiom has been verified.

if A has two equal rows, and the

Alternating Axiom has been verified.

The identity has determinant 1: Suppose ![]() . Since the entries of the identity matrix are 0

except on the main diagonal, I have

. Since the entries of the identity matrix are 0

except on the main diagonal, I have ![]() unless

unless ![]() . When

. When ![]() , I have

, I have ![]() . Therefore, the cofactor expansion of

. Therefore, the cofactor expansion of ![]() has only one nonzero term, which is

has only one nonzero term, which is

![]()

(I know ![]() because C is a determinant

function.)

because C is a determinant

function.)

I've verified that D satisfies the 3 axioms. Thus, D is a determinant

function.![]()

The theorem says that if I have a determinant function on matrices of

a given size, I can use it to construct a determinant function on

matrices "one size bigger". So a determinant function on

![]() matrices can be used to construct

a determinant function on

matrices can be used to construct

a determinant function on ![]() matrices, and

so on.

matrices, and

so on.

Corollary. Let R be a commutative ring with

identity. There is a determinant function on ![]() matrices over R for

matrices over R for ![]() .

.

Proof. I constructed a determinant function on

![]() and on

and on ![]() matrices. So applying the theorem, from the

matrices. So applying the theorem, from the

![]() determinant function I get a

determinant function I get a ![]() determinant function, from the

determinant function, from the ![]() determinant function I get a

determinant function I get a ![]() determinant function, and so on. By

induction, I get a determinant function on

determinant function, and so on. By

induction, I get a determinant function on ![]() matrices for all

matrices for all ![]() .

.![]()

I now know that there is at least one determinant function

on ![]() matrices. In fact, there is

only one, but that will require a separate discussion.

Anticipating that result, I'll refer to the determinant

function (rather than a determinant function).

matrices. In fact, there is

only one, but that will require a separate discussion.

Anticipating that result, I'll refer to the determinant

function (rather than a determinant function).

Since we'll prove that there's only one determinant function, if A is

an ![]() matrix, the determinant

of A will be denoted

matrix, the determinant

of A will be denoted ![]() or

or ![]() .

.

I'll show later on that ![]() . Since

transposing sends rows to columns and columns to rows, this means

that you can expand by cofactors of rows as well as columns.

We'll use this result now in computations.

. Since

transposing sends rows to columns and columns to rows, this means

that you can expand by cofactors of rows as well as columns.

We'll use this result now in computations.

While you can expand along any row or column you want, but it's usually good to pick one with lots of 0's.

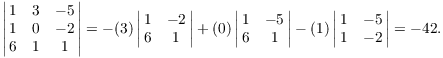

Example. ( Computing a determinant by cofactors) Compute the determinant of the following real matrix:

![$$\left[\matrix{1 & 3 & -5 \cr 1 & 0 & -2 \cr 6 & 1 & 1 \cr}\right]$$](expansion-by-cofactors165.png)

Expanding by cofactors of the second column, I get

This diagram shows where the terms in the cofactor expansion come from:

For each element (3, 0, 1) in the second column, compute the cofactor

for that element by crossing out the row and column containing the

element (cross-outs shown in gray), computing the determinant of the

![]() matrix that is left, and multiplying by the

sign (+ or -) that comes from the "checkerboard pattern").

Then multiply the cofactor by the column element.

matrix that is left, and multiplying by the

sign (+ or -) that comes from the "checkerboard pattern").

Then multiply the cofactor by the column element.

So for the first term, the element is 3, the sign is "-",

and after crossing out the first row and second column, the ![]() determinant that is left is

determinant that is left is

![]()

As usual, this is harder to describe in words than it is to actually do. Try a few computations yourself.

Finally, I computed the ![]() determinants

using the

determinants

using the ![]() determinant formula I derived

earlier.

determinant formula I derived

earlier.![]()

You can often simplify a cofactor expansion by doing row operations first. For instance, if you can produce a row or a column with lots of zeros, you can expand by cofactors of that row or column.

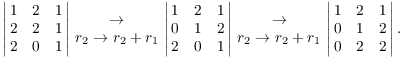

Example. ( Computing a

determinant using row operations and cofactors) Compute the

determinant of the following matrix in ![]() :

:

![$$\left[\matrix{1 & 2 & 1 \cr 2 & 2 & 1 \cr 2 & 0 & 1 \cr}\right]$$](expansion-by-cofactors174.png)

I'll do a couple of row operations first to make some zeros in the first column. Remember that adding a multiple of a row to another row does not change the determinant.

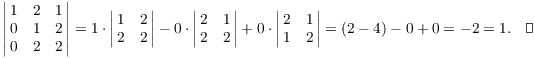

Now I expand by cofactors of column 1. The two zeros make the computation easy; I'll write out those terms just so you can see the cofactors:

Copyright 2021 by Bruce Ikenaga