Definition. Let V and W be vector spaces

over a field F. A linear transformation ![]() is a function which satisfies

is a function which satisfies

![]()

Note that u and v are vectors, whereas k is a scalar (number).

You can break the definition down into two pieces:

![]()

![]()

Conversely, it is clear that if these two equations are satisfied then f is a linear transformation.

The notation ![]() means that f is a function which takes a

vector in

means that f is a function which takes a

vector in ![]() as input and produces a vector in

as input and produces a vector in ![]() as

output.

as

output.

Example. The function

![]()

is a function ![]() .

.

Sometimes, the outputs are given variable names, e.g.

![]()

This is the same as saying

![]()

You plug numbers into f in the obvious way:

![]()

Since you can think of f as taking a 2-dimensional vector as its input and producing a 3-dimensional vector as its output, you could write

![]()

But I'll supress some of the angle brackets when there's no danger of

confusion.![]()

Example. Define ![]() by

by

![]()

I'll show that f is a linear transformation the hard way. First, I need two 2-dimensional vectors:

![]()

I also need a real number k.

Notice that I don't pick specific numerical vectors

like ![]() or a specific number for k, like

or a specific number for k, like ![]() . I have to do the proof with general vectors and numbers.

. I have to do the proof with general vectors and numbers.

I must show that

![]()

I'll compute the left side and the right side, then show that they're equal. Here's the left side:

![]()

![]()

![]()

And here's the right side:

![]()

![]()

Therefore, ![]() , so f is a linear

transformation.

, so f is a linear

transformation.

This was a pretty disgusting computation, and it would be a shame to

have to go through this every time. I'll come up with a better way of

recognizing linear transformations shortly.![]()

Example. The function

![]()

is not a linear transformation from ![]() to

to ![]() .

.

To prove that a function is not a linear transformation --- unlike proving that it is --- you must come up with specific, numerical vectors u and v and a number k for which the defining equation is false. There is often no systematic way to come up with such a counterexample; you simply try some numbers till you get what you want.

I'll take ![]() ,

, ![]() , and

, and ![]() . This time, I

want to show

. This time, I

want to show ![]() ; again, I compute the two

sides.

; again, I compute the two

sides.

![]()

![]()

![]()

Since ![]() , f is not a linear

transformation.

, f is not a linear

transformation.![]()

Example. Let ![]() be

a function. f is differentiable at a point

be

a function. f is differentiable at a point

![]() if there is a linear transformation

if there is a linear transformation ![]() such that

such that

![]()

In this definition, if ![]() , then

, then ![]() is the length of v:

is the length of v:

![]()

Since f produces outputs in ![]() , you can think of f as being

built out of m component functions. Suppose that

, you can think of f as being

built out of m component functions. Suppose that ![]() .

.

It turns out that the matrix of ![]() (relative to the

standard bases on

(relative to the

standard bases on ![]() and

and ![]() ) is the

) is the ![]() matrix whose

matrix whose ![]() entry is

entry is

![]()

This matrix is called the Jacobian matrix of f at a.

For example, suppose ![]() is given by

is given by

![]()

Then

![]()

The next lemma gives an easy way of constructing --- or recognizing --- linear transformations.

Theorem. Let F be a field, and let A be an

![]() matrix over F. The function

matrix over F. The function ![]() given by

given by

![]()

is a linear transformation.

Proof. This is pretty easy given the rules

for matrix arithmetic. Let ![]() and let

and let ![]() . Then

. Then

![]()

Therefore, f is a linear transformation.![]()

This result says that any function which is defined by matrix multiplication is a linear transformation. Later on, I'll show that for finite-dimensional vector spaces, any linear transformation can be thought of as multiplication by a matrix.

Example. Define ![]() by

by

![]()

I'll show that f is a linear transformation the easy way. Observe that

![$$f((x, y)) = \left[\matrix{1 & 2 \cr 1 & -1 \cr -2 & 3 \cr}\right] \left[\matrix{x \cr y \cr}\right].$$](lintrans63.png)

f is given by multiplication by a matrix of numbers, exactly as in

the lemma. (![]() is taking the place of u.) So the lemma

implies that f is a linear transformation.

is taking the place of u.) So the lemma

implies that f is a linear transformation.![]()

Lemma. Let F be a field, and let V and W be

vector spaces over F. Suppose that ![]() is a linear

transformation. Then:

is a linear

transformation. Then:

Proof. (a) Put ![]() ,

, ![]() , and

, and ![]() in the defining equation for a

linear transformation. Then

in the defining equation for a

linear transformation. Then

![]()

Subtracting ![]() from both sides, I get

from both sides, I get ![]() .

.

(b) I know that ![]() , so

, so

![]()

The lemma gives a quick way of showing a function is not a linear transformation.

Example. Define ![]() by

by

![]()

Then

![]()

Since g does not take the zero vector to the zero vector, it is not a linear transformation.

Be careful! If ![]() , you can't conclude that

f is a linear transformation. For example, I showed that the

function

, you can't conclude that

f is a linear transformation. For example, I showed that the

function ![]() is not a linear transformation

from

is not a linear transformation

from ![]() to

to ![]() . But

. But ![]() , so

it does take the zero vector to the zero vector.

, so

it does take the zero vector to the zero vector.![]()

Next, I want to prove the result I mentioned earlier: Every linear transformation on a finite-dimensional vector space can be represented by matrix multiplication. I'll begin by reviewing some notation.

Definition. The standard

basis vectors for ![]() are

are

![]()

Thus, ![]() is an m-dimensional vector with a 1 in the

is an m-dimensional vector with a 1 in the ![]() position and 0's elsewhere. For instance, in

position and 0's elsewhere. For instance, in ![]() ,

,

![]()

I showed earlier that ![]() is a basis for

is a basis for

![]() . This implies the following result.

. This implies the following result.

Lemma. Every vector ![]() can be written uniquely as

can be written uniquely as

![]()

where ![]() .

.![]()

Example. In ![]() ,

,

![]()

Now here is the converse to the Theorem I proved earlier.

Theorem. If ![]() is

a linear transformation, there is an

is

a linear transformation, there is an ![]() matrix A such

that

matrix A such

that

![]()

Proof. Regard the standard basis vectors

![]() ,

, ![]() , ...,

, ..., ![]() as m-dimensional

column vectors. Then

as m-dimensional

column vectors. Then ![]() ,

, ![]() , ...,

, ..., ![]() are n-dimensional column vectors, because f produces

outputs in

are n-dimensional column vectors, because f produces

outputs in ![]() . Take these m n-dimensional column vectors

. Take these m n-dimensional column vectors ![]() ,

, ![]() , ...,

, ..., ![]() and build a matrix:

and build a matrix:

![$$A = \left[\matrix{\uparrow & \uparrow & & \uparrow \cr f(e_1) & f(e_2) & \cdots & f(e_m) \cr \downarrow & \downarrow & & \downarrow \cr}\right].$$](lintrans111.png)

I claim that A is the matrix I want. To see this, take a vector ![]() and write it in component form:

and write it in component form:

![$$u = (u_1, u_2, \ldots, u_m) = \left[\matrix{u_1 \cr u_2 \cr \vdots \cr u_m \cr}\right].$$](lintrans113.png)

By the standard basis vector lemma above,

![]()

Then I can use the fact that f is a linear transformation --- so f of

a sum is the sum of the f's, and constants (like the ![]() 's) can be pulled out --- to write

's) can be pulled out --- to write

![]()

On the other hand,

![$$A\cdot u = \left[\matrix{\uparrow & \uparrow & & \uparrow \cr f(e_1) & f(e_2) & \cdots & f(e_m) \cr \downarrow & \downarrow & & \downarrow \cr}\right] \left[\matrix{u_1 \cr u_2 \cr \vdots \cr u_m \cr}\right] = u_1f(e_1) + u_2f(e_2) + \cdots + u_mf(e_m).$$](lintrans117.png)

To get the last equality, think about how matrix multiplication works.

Therefore, ![]() .

.![]()

Linear transformations and matrices are not quite identical. If a linear transformation is like a person, then a matrix for the transformation is like a picture of the person --- the point being that there can be many different pictures of the same person. You get different "pictures" of a linear transformation by changing coordinates --- something I'll discuss later.

Example. Define ![]() by

by

![]()

I already know that f is a linear transformation, and I found its matrix by inspection. Here's how it would work using the theorem. I feed the standard basis vectors into f:

![]()

I construct a matrix with these vectors as the columns:

![$$A = \left[\matrix{1 & 2 \cr 1 & -1 \cr -2 & 3 \cr}\right].$$](lintrans122.png)

It's the same matrix as I found by inspection.![]()

You can combine linear transformations to obtain other linear transformations. First, I'll consider sums and scalar multiplication.

Definition. Let ![]() be linear

transformations of vector spaces over a field F, and let

be linear

transformations of vector spaces over a field F, and let ![]() .

.

![]()

![]()

Lemma. Let ![]() be linear

transformations of vector spaces over a field F, and let

be linear

transformations of vector spaces over a field F, and let ![]() .

.

Proof. I'll prove the first part by way of example and leave the proof of the second part to you.

Let ![]() and let

and let ![]() . Then

. Then

![]()

![]()

Therefore, ![]() is a linear transformation.

is a linear transformation.![]()

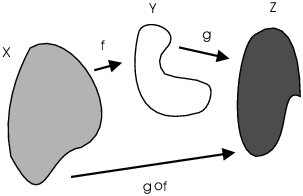

If ![]() and

and ![]() are functions, the composite of f and g is

are functions, the composite of f and g is

![]()

The ![]() between the g and f does not mean multiplication, but

it's so easy to confuse that I'll usually just write "

between the g and f does not mean multiplication, but

it's so easy to confuse that I'll usually just write "![]() " when I want to compose functions.

" when I want to compose functions.

Note that things go from left to right in the picture, but that they

go right to left in "![]() ". The effect is to do f

first, then g.

". The effect is to do f

first, then g.

Lemma. Let ![]() and

and ![]() be linear transformations of vector spaces over a

field F. Then

be linear transformations of vector spaces over a

field F. Then ![]() is a linear transformation.

is a linear transformation.

Proof. Let ![]() and let

and let

![]() . Then

. Then

![]()

Hence, ![]() is a linear transformation.

is a linear transformation.![]()

Now suppose ![]() and

and ![]() are linear

transformations.

are linear

transformations.

![]()

f and g can be represented by matrices; I'll use ![]() for the matrix of f and

for the matrix of f and ![]() for the matrix of g, so

for the matrix of g, so

![]()

![]()

What's the matrix for the composite ![]() ?

?

![]()

The matrix for ![]() is

is ![]() , the product

of the matrices for f and g.

, the product

of the matrices for f and g.

Example. Suppose

![]()

are linear transformations. Thus, ![]() and

and ![]() .

.

Write them in matrix form:

![$$f\left(\left[\matrix{x \cr y \cr}\right]\right) = \left[\matrix{1 & 1 \cr 2 & 5 \cr 0 & -3 \cr}\right] \left[\matrix{x \cr y \cr}\right], \quad g\left(\left[\matrix{u \cr v \cr w \cr}\right]\right) = \left[\matrix{1 & 1 & -1 \cr 2 & 0 & 3 \cr -1 & -1 & 6 \cr}\right] \left[\matrix{u \cr v \cr w \cr}\right].$$](lintrans168.png)

The matrix for the composite transformation ![]() is

is

![$$\left[\matrix{1 & 1 & -1 \cr 2 & 0 & 3 \cr -1 & -1 & 6 \cr}\right] \left[\matrix{1 & 1 \cr 2 & 5 \cr 0 & -3 \cr}\right] = \left[\matrix{3 & 9 \cr 2 & -7 \cr -3 & -24 \cr}\right].$$](lintrans170.png)

To write it out in equation form, multiply:

![$$(g\circ f)\left(\left[\matrix{x \cr y \cr}\right]\right) = \left[\matrix{3 & 9 \cr 2 & -7 \cr -3 & -24 \cr}\right] \left[\matrix{x \cr y \cr}\right] = \left[\matrix{3x + 9y \cr 2x - 7y \cr -3x - 24y \cr}\right].$$](lintrans171.png)

That is,

![]()

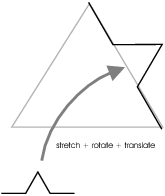

Example. The idea of composing transformations can be extended to affine transformations. For the sake of this example, you can think of an affine transformation as a linear transformation plus a translation (a constant). This provides a powerful way of doing various geometric constructions.

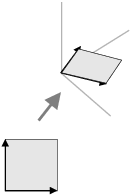

For example, I wanted to write a program to generate self-similar fractals. There are many self-similar fractals, but I was interested in those that can be constructed in the following way. Start with an initiator, which is a collection of segments in the plane. For example, this initiator is a square:

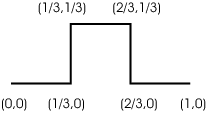

Next, I need a generator. It's a collection of

segments which start at the point ![]() and end at the point

and end at the point

![]() . Here's a generator shaped like a square hump:

. Here's a generator shaped like a square hump:

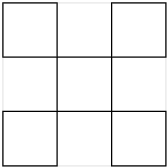

The construction proceeds in stages. Start with the initiator and replace each segment with a scaled copy of the generator. (There is an issue here of which way you "flip" the generator when you copy it, but I'll ignore this for simplicity.) Here's what you get by replacing the segments of the square with copies of the square hump:

Now keep going. Take the current figure and replace all of its segments with copies of the generator. And so on. Here's what you get after around 4 steps:

Roughly, self-similarity means that if you enlarge a piece of the figure, the enlarged piece looks like the original. If you imagine carrying out infinitely many steps of the construction above, you'd get a figure which would look "the same" no matter how much you enlarged it --- which is a very crude definition of a fractal. If you're interested in this stuff, you should look at Benoit Mandelbrot's classic book ([1]) --- it has great pictures!

What does this have to do with transformations? The idea is that to replace a segment of the current figure with a scaled copy of the generator, you need to stretch the generator, rotate it, then translate it. Here's a picture with a different initiator and generator:

Stretching by a factor of k amounts to multiplying by the matrix

![]()

This is a linear transformation.

Rotation through an angle ![]() is accomplished by multiplying by

is accomplished by multiplying by

![]()

This is also a linear transformation.

Finally, translation by a vector ![]() amounts to adding

the vector

amounts to adding

the vector ![]() . This is not a linear transformation

(unless the vector is zero).

. This is not a linear transformation

(unless the vector is zero).

Thus, to stretch by k, rotate through ![]() , and then

translate by

, and then

translate by ![]() , you should do this:

, you should do this:

![]()

By thinking of the operations as linear or affine transformations, it

is very easy to write down the formula.![]()

If ![]() is a linear transformation, the

inverse of f is a linear transformation

is a linear transformation, the

inverse of f is a linear transformation ![]() which satisfies

which satisfies

![]()

![]()

That is, ![]() is a linear transformation which "undoes"

the effect of f. If f can be represented by matrix multiplication,

it's natural to suppose that if A is the matrix of f, then

is a linear transformation which "undoes"

the effect of f. If f can be represented by matrix multiplication,

it's natural to suppose that if A is the matrix of f, then ![]() is the matrix of

is the matrix of ![]() --- and it's true.

--- and it's true.

Lemma. If A and B are ![]() matrices and

matrices and

![]()

then A and B are inverses --- that is, ![]() .

.

Proof. Since ![]() for any vector u,

for any vector u,

![$$AB\left[\matrix{1 \cr 0 \cr \vdots \cr 0 \cr}\right] = \left[\matrix{1 \cr 0 \cr \vdots \cr 0 \cr}\right],$$](lintrans199.png)

![$$AB\left[\matrix{0 \cr 1 \cr \vdots \cr 0 \cr}\right] = \left[\matrix{0 \cr 1 \cr \vdots \cr 0 \cr}\right],$$](lintrans200.png)

![]()

![$$AB\left[\matrix{0 \cr 0 \cr \vdots \cr 1 \cr}\right] = \left[\matrix{0 \cr 0 \cr \vdots \cr 1 \cr}\right].$$](lintrans202.png)

Put the vectors in the equations above into the columns of a matrix. They form the identity:

![$$AB\left[\matrix{1 & 0 & \cdots & 0 \cr 0 & 1 & \cdots & 0 \cr \vdots & \vdots & & \vdots \cr 0 & 0 & \cdots & 1 \cr}\right] = \left[\matrix{1 & 0 & \cdots & 0 \cr 0 & 1 & \cdots & 0 \cr \vdots & \vdots & & \vdots \cr 0 & 0 & \cdots & 1 \cr}\right], \quad\hbox{or}\quad ABI = I, \quad\hbox{so}\quad AB = I.$$](lintrans203.png)

The same reasoning shows ![]() , so A and B are inverses.

, so A and B are inverses.![]()

Proposition. If ![]() is a linear

transformation whose matrix is A and

is a linear

transformation whose matrix is A and ![]() is the inverse of

f, then the matrix of

is the inverse of

f, then the matrix of ![]() is

is ![]() .

.

Remark. If I use ![]() to denote the matrix

of the linear transformation f, this result can be expressed more

concisely as

to denote the matrix

of the linear transformation f, this result can be expressed more

concisely as

![]()

Proof. Let A be the matrix of f and let B be

the matrix of ![]() . Since f and

. Since f and ![]() are inverses, for

all

are inverses, for

all ![]() ,

,

![]()

Similarly, ![]() gives

gives ![]() for all

for all ![]() . By the lemma, A and B are inverses, which is what I

wanted to prove.

. By the lemma, A and B are inverses, which is what I

wanted to prove.![]()

Of course, a linear transformation may not be invertible, and the last result gives an easy test --- a linear transformation is invertible if and only if its matrix is invertible. You should know almost half a dozen conditions which are equivalent to the invertibility of a matrix.

Example. Suppose ![]() is

given by

is

given by

![]()

The matrix of f is

![]()

Therefore, the matrix of ![]() is

is

![]()

Hence, the inverse transformation is

![]()

So, for example,

![]()

You can check that ![]() as well.

as well.![]()

Example. Let ![]() denote the set

of polynomials with real coefficients of degree 2 or less. Thus,

denote the set

of polynomials with real coefficients of degree 2 or less. Thus,

![]()

Here are some elements of ![]() :

:

![]()

You can represent polynomials in ![]() by vectors. For

example, here is how to represent

by vectors. For

example, here is how to represent ![]() as a vector:

as a vector:

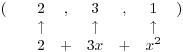

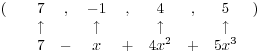

I'm writing the coefficients with the powers increasing to make it easy to extend this to higher powers. For example,

Returning to ![]() , there is a function

, there is a function ![]() defined by

defined by

![]()

Do you see what it is?

If I switch back to polynomial notation, it is

![]()

Of course, D is just differentiation.

Now you know from calculus that:

This means that D is a linear transformation ![]() . What is its matrix?

. What is its matrix?

To find the matrix of a linear transformation (relative to the standard basis), apply the transformation to the standard basis vectors. Use the results as the columns of your matrix.

In vector form, ![]() is just

is just ![]() , so the standard

basis vectors are

, so the standard

basis vectors are

![]()

As I apply D, I'll translate to polynomial notation to make it easier for you to follow:

![]()

![]()

![]()

Therefore, the matrix of D is

![$$\left[\matrix{0 & 1 & 0 \cr 0 & 0 & 2 \cr 0 & 0 & 0 \cr}\right]. \quad\halmos$$](lintrans245.png)

Example. Construct a linear transformation

which maps the unit square ![]() ,

, ![]() to the parallelogram in

to the parallelogram in ![]() determined by the

vectors

determined by the

vectors ![]() and

and ![]() .

.

The idea is to send vectors for the square's sides --- namely, ![]() and

and ![]() --- to the target vectors

--- to the target vectors ![]() and

and ![]() . If I do this with a linear

transformation, the rest of the square will go with them.

. If I do this with a linear

transformation, the rest of the square will go with them.

The following matrix does it:

![$$\left[\matrix{2 & 1 \cr -3 & -1 \cr 6 & 1 \cr}\right] \left[\matrix{1 \cr 0 \cr}\right] = \left[\matrix{2 \cr -3 \cr 6 \cr}\right], \quad \left[\matrix{2 & 1 \cr -3 & -1 \cr 6 & 1 \cr}\right] \left[\matrix{0 \cr 1 \cr}\right] = \left[\matrix{1 \cr -1 \cr 1 \cr}\right].$$](lintrans256.png)

Therefore, the transformation is

![$$f\left(\left[\matrix{x \cr y \cr}\right]\right) = \left[\matrix{2 & 1 \cr -3 & -1 \cr 6 & 1 \cr}\right] \left[\matrix{x \cr y \cr}\right].\quad\halmos$$](lintrans257.png)

Copyright 2008 by Bruce Ikenaga