I've given examples which illustrate how you can do arithmetic with matrices. Now I'll give precise definitions of the various matrix operations. This will allow me to prove some useful properties of these operations. If you look at the definitions, you'll see the ideas we showed earlier by example.

The proofs will probably look pretty abstract to you, somewhat like our earlier proofs for properties of number systems. How you approach them depends on what you intend to learn. If you're mainly interested in doing computations, you could skim these properties and definitions without worrying about the proofs. At least try to understand, by example if necessary, what they say. Get an idea of what kinds of manipulations "are allowed" with matrices.

If you're trying to learn this material in some depth (particularly if you intend to do more advanced math), try to read at least some of the proofs. Perhaps challenge yourself by trying to write some of the proofs yourself, for example the proofs that I've omitted.

If A is a matrix, the element in the ![]() row and

row and ![]() column will be

denoted

column will be

denoted ![]() . (Sometimes I'll switch to lower-case

letters and use

. (Sometimes I'll switch to lower-case

letters and use ![]() instead of

instead of ![]() ). Thus,

). Thus,

![]() is the

is the ![]() entry of

entry of

![]() .

.

![]() is the

is the ![]() entry of

entry of

![]() .

.

![]() is the

is the ![]() entry of

entry of

![]() .

.

![]() is the

is the ![]() entry of

entry of

![]() .

.

Keep in mind that a thing with an "![]() " subscript is a number --- without the

subscript, you have a matrix.

" subscript is a number --- without the

subscript, you have a matrix.

Remark. To avoid confusion, use a comma

between the indices where appropriate. "![]() " clearly means the entry in row 2, column 4.

However, for the entry in row 13, column 6, write "

" clearly means the entry in row 2, column 4.

However, for the entry in row 13, column 6, write "![]() ", not "

", not "![]() ".

".![]()

Here are the formal definitions of the matrix operations. When I

write something like "for all i and j", you should take

this to mean "for all i such that ![]() , where m is the number of rows, and for

all j such that

, where m is the number of rows, and for

all j such that ![]() , where n is the number of

columns".

, where n is the number of

columns".

Definition. ( Equality)

Let A and B be matrices. Then ![]() if and only if A and

B have the same dimensions and

if and only if A and

B have the same dimensions and ![]() for all i

and j.

for all i

and j.

This definition says that two matrices are equal if they have the same dimensions and corresponding entries are equal.

Definition. ( Sums and

Differences) Let A and B be matrices. If A and B have the same

dimensions, then the sum ![]() and the difference

and the difference ![]() are defined, and their entries are given by

are defined, and their entries are given by

![]()

This definition says that if two matrices have the same dimensions, you can add or subtract them by adding or subtracting corresponding entries.

Definition. The ![]() zero matrix 0 is the

zero matrix 0 is the ![]() matrix whose

matrix whose ![]() entry is

given by

entry is

given by

![]()

Proposition. Let A, B, and C be ![]() matrices, and let 0 denote the

matrices, and let 0 denote the ![]() zero matrix. Then:

zero matrix. Then:

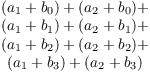

(a) ( Associativity of Addition)

![]()

(b) ( Commutativity of Addition)

![]()

(c) ( Identity for Addition)

![]()

Remark. You'll see that the idea in many of these proofs for matrices is to reduce the proof to a known property of numbers (such as associativity or commutativity) by looking at the entries of the matrices. This is what the definition of equality of matrices allows us to do.

You can also see that the properties in this Proposition look "obvious" --- they are statements that you'd "expect" to be true, even if no one told you. That is typical for most of the material in this section, which is why you should not be intimidated by all the symbols.

Most of the proofs that follow are fairly simple. Hence, I won't

write out all of them, and I won't always do them in step-by-step

detail like the ones above.![]()

Proof. Each of the properties is a matrix equation. The definition of matrix equality says that I can prove that two matrices are equal by proving that their corresponding entries are equal. I'll follow this strategy in each of the proofs that follows.

(a) To prove that ![]() , I have to

show that their corresponding entries are equal:

, I have to

show that their corresponding entries are equal:

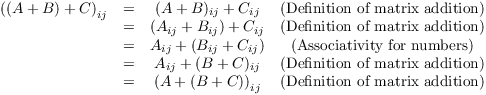

![]()

(Do you understand what this says? ![]() is the

is the ![]() entry of

entry of ![]() , while

, while ![]() is the

is the ![]() entry of

entry of ![]() .)

.)

Since this is the first proof of this kind that I've done, I'll show the justification for each step.

"Associativity" refers to associativity of addition for numbers.

Therefore, ![]() , because their

corresponding elements are equal.

, because their

corresponding elements are equal.

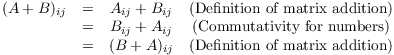

(b) To prove that ![]() , I have to show that their

corresponding entries are equal:

, I have to show that their

corresponding entries are equal:

![]()

By definition of matrix addition,

"Commutativity" refers to commutativity of addition of numbers.

Therefore,

![]()

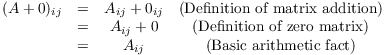

(c) To prove that ![]() , I have to show that their

corresponding entries are equal:

, I have to show that their

corresponding entries are equal:

![]()

By definition of matrix addition and the zero matrix,

"Basic arithmetic fact" refers to the fact that if x is a

number, then ![]() .

.

Therefore, ![]() .

.

In part (b), I showed that addition is commutative. Therefore,

![]()

Definition. ( Multiplication

by Numbers) If A is a matrix and k is a number, then ![]() is the matrix having the same dimensions as A, and

whose entries are given by

is the matrix having the same dimensions as A, and

whose entries are given by

![]()

(It's considered ugly to write a number on the right side of

a matrix if you want to multiply. For the record, I'll define ![]() to be the same as

to be the same as ![]() .)

.)

This definition says that to multiply a matrix by a number, multiply each entry by the number.

Definition. If A is a matrix, then ![]() is the matrix having the same dimensions as A, and

whose entries are given by

is the matrix having the same dimensions as A, and

whose entries are given by

![]()

Proposition. Let A and B be matrices with the same dimensions, and let k be a number. Then:

(a) ![]() and

and ![]() .

.

(b) ![]() .

.

(c) ![]() .

.

(d) ![]() .

.

(e) ![]() .

.

Note that in (b), the 0 on the left is the number 0, while the 0 on the right is the zero matrix.

Proof. I'll prove (a) and (c) by way of example and leave the proofs of the other parts to you.

First, I want to show that ![]() . I

have to show that corresponding entries are equal, which means

. I

have to show that corresponding entries are equal, which means

![]()

I apply the definitions of matrix addition and multiplication of a matrix by a number:

![]()

![]()

Therefore, ![]() , so

, so ![]() .

.

Next, I want to show that ![]() . I

just repeat the last proof with "-" in place of

"+".

. I

just repeat the last proof with "-" in place of

"+".

I have to show that corresponding entries are equal, which means

![]()

I apply the definitions of matrix subtraction and multiplication of a matrix by a number:

![]()

![]()

Therefore, ![]() , so

, so ![]() .

.

For (c), I want to show that ![]() . As usual,

this means I must show that they have the same

. As usual,

this means I must show that they have the same ![]() entries:

entries:

![]()

I use the definition of multiplying a matrix by a number:

![]()

This proves that ![]() .

.![]()

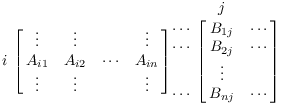

Suppose A and B are matrices with compatible dimensions for

multiplication. Where does the ![]() entry of

entry of

![]() come from? It comes from multiplying the

come from? It comes from multiplying the ![]() row of A with the

row of A with the ![]() column of B:

column of B:

Corresponding elements are multiplied, and then the products are summed:

![]()

Look at the pattern of the subscripts in this sum. You can see that

the "inner" matching subscripts are going from 1 to n,

while the "outer" "i" and "j" don't

change. Hence, I can write the ![]() entry of

the product in summation form as

entry of

the product in summation form as

![]()

That is

![]()

Definition. (

Multiplication) Let A be an ![]() matrix and let

B be an

matrix and let

B be an ![]() matrix. The

product

matrix. The

product ![]() is the

is the ![]() matrix whose

matrix whose

![]() entry is given by

entry is given by

![]()

It's often useful to have a symbol which you can use to compare two

quantities i and j --- specifically, a symbol which equals 1 when

![]() and equals 0 when

and equals 0 when ![]() .

.

Definition. The Kronecker delta is defined by

![]()

For example,

![]()

Lemma. ![]() .

.

Proof. To see what's happening, write out the sum:

![]()

By definition, each ![]() with unequal

subscripts is 0. The only

with unequal

subscripts is 0. The only ![]() that is not 0 is

the one with equal subscripts. Since i is fixed, the

that is not 0 is

the one with equal subscripts. Since i is fixed, the ![]() that is not 0 is

that is not 0 is ![]() , which equals

1. Thus,

, which equals

1. Thus,

![]()

Definition. The ![]() identity matrix

identity matrix ![]() is the

is the ![]() matrix whose

matrix whose ![]() entry is given by

entry is given by

![]()

I will write I instead of ![]() if there's no risk of

confusion.

if there's no risk of

confusion.

Before I give the next result, I'll discuss a technique which is often used when you have two summations, one inside the other. For example, suppose you have

![]()

If I write out the terms, they look like this:

![]()

You can see I "cycled" through the inner ("j")

index from 0 to 3 first, while holding ![]() . Then I changed i to 2, and cycled through the j

index again. To interchange the order of summation means

that I will get the same sum if I cycle through i first, then j. That

is,

. Then I changed i to 2, and cycled through the j

index again. To interchange the order of summation means

that I will get the same sum if I cycle through i first, then j. That

is,

![]()

Here is how ![]() looks if I write out the terms:

looks if I write out the terms:

You can see that I get exactly the same terms, just in a different order. Therefore, the sums are the same.

In general, if ![]() is some expression involving i and

j, then

is some expression involving i and

j, then

![]()

A similar result holds for three or more sums.

I will use interchanging the order of summation in proving associativity of matrix multiplication.

Proposition.

(a) ( Associativity of Matrix Multiplication) If A, B, and C are matrices which are compatible for multiplication, then

![]()

(b) ( Distributivity of Multiplication over Addition) If A, B, C, D, E, and F are matrices compatible for addition and multiplication, then

![]()

(c) If j and k are numbers and A and B are matrices which are compatible for multiplication, then

![]()

(d) ( Identity for Multiplication) If A is an

![]() matrix, then

matrix, then

![]()

The "compatible for addition" and "compatible for multiplication" assumptions mean that the matrices should have dimensions which make the operations in the equations legal --- but otherwise, there are no restrictions on what the dimensions can be.

Proof. I'll prove (a) and part of (d) by way of example, and leave the proofs of the other parts to you.

Before starting, I should say that this proof is rather technical, but try to follow along as best you can. I'll use i, j, k, and l as subscripts.

Suppose that A is an ![]() matrix, B is an

matrix, B is an ![]() matrix, and C is a

matrix, and C is a ![]() matrix. I want to prove that

matrix. I want to prove that ![]() . I have to show that corresponding entries

are equal, i.e.

. I have to show that corresponding entries

are equal, i.e.

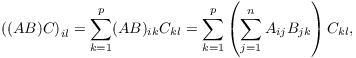

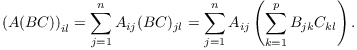

![]()

By definition of matrix multiplication,

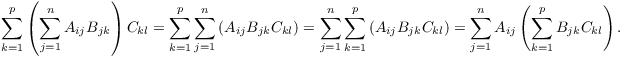

If you stare at those two terrible double sums for a while, you can see that they involve the same A, B, and C terms, and they involve the same summations --- but in different orders. I'm allowed to convert one into the other by interchanging the order of summation, and using the distributive law:

Therefore, ![]() , and so

, and so ![]() . Wow!

. Wow!

Next, I'll prove the second part of (d), namely that ![]() . As usual, I must show that corresponding entries

are equal:

. As usual, I must show that corresponding entries

are equal:

![]()

By definition of matrix multiplication and the identity matrix,

![]()

Using the lemma I proved on the Kronecker delta, I get

![]()

Thus, ![]() , and so

, and so ![]() .

.![]()

Definition. Let A be an ![]() matrix. The transpose of A is

the

matrix. The transpose of A is

the ![]() matrix whose

matrix whose ![]() entry is given by

entry is given by

![]()

Proposition. Let A and B be matrices of the same dimension, and let k be a number. Then:

(a) ![]() .

.

(b) ![]() .

.

(c) ![]() .

.

Proof. I'll prove (b) by way of example and leave the proofs of the other parts for you.

I want to show that ![]() . I have to

show the corresponding entries are equal:

. I have to

show the corresponding entries are equal:

![]()

Now

![]()

![]()

Thus, ![]() , so

, so ![]() .

.![]()

Proposition. Suppose A and B are matrices which are compatible for multiplication. Then

![]()

Proof. I'll derive this using the matrix multiplication formula.

![]()

Let ![]() denote the

denote the ![]() entry of

entry of ![]() , and likewise for B and

, and likewise for B and ![]() . Then

. Then

![]()

The product on the right is the ![]() entry of

entry of

![]() , while

, while ![]() is the

is the ![]() entry of

entry of ![]() . Therefore,

. Therefore, ![]() , since

their corresponding entries are equal.

, since

their corresponding entries are equal.![]()

Definition.

(a) A matrix X is symmetric if ![]() .

.

(b) A matrix X is skew symmetric if ![]() .

.

Both definitions imply that X is a square matrix.

Using the definition of transpose, I can express these definitions in terms of elements.

X is symmetric if

![]()

X is skew symmetric if

![]()

Visually, a symmetric matrix is symmetric across its main diagonal (the diagonal running from northwest to southeast). For example, this real matrix is symmetric:

![$$\left[\matrix{ 0 & 2 & -9 \cr 2 & \sqrt{3} & 4 \cr -9 & 4 & 5 \cr}\right].$$](matrix-properties178.png)

Here's a skew symmetric real matrix:

![$$\left[\matrix{ 0 & -3 & -2 \cr 3 & 0 & 17 \cr 2 & -17 & 0 \cr}\right].$$](matrix-properties179.png)

Entries which are symmetrically located across the main diagonals are negatives of one another. The entries on the main diagonal must be 0, since they must be equal to their negatives.

The next result is pretty easy, but it illustrates how you can use the definitions of symmetry and skew symmetry in writing proofs. In these proofs, in contrast to earlier proofs, I don't need to write out the entries of the matrices, since I can use properties I've already proved.

Proposition.

(a) The sum of symmetric matrices is symmetric.

(b) The sum of skew symmetric matrices is skew symmetric.

Proof. (a) Let A and B be symmetric. I must

show that ![]() is symmetric. Now

is symmetric. Now

![]()

The first equality follows from a property I proved for transposes.

The second equality follows from the fact that A is symmetric (so

![]() ) and B is symmetric (so

) and B is symmetric (so ![]() ).

).

Since ![]() , it follows that

, it follows that ![]() is symmetric.

is symmetric.

(b) Let A and B be skew symmetric, so ![]() and

and ![]() . I must show that

. I must show that ![]() is skew symmetric. Now

is skew symmetric. Now

![]()

Therefore, ![]() is skew symmetric.

is skew symmetric.![]()

Copyright 2020 by Bruce Ikenaga