We will look at 3 subspaces associated to a matrix: The row space, the column space, and the null space. They provide important information about the matrix and the linear transformation associated to it. In this section, we'll discuss the row space of a matrix. We'll also discuss algorithms for finding a basis for a subspace spanned by a set of vectors, determining whether a set of vectors is independent, and adding vectors to an independent set to get a basis.

A lot of what follows generalizes to matrices with entries in a commutative ring with identity, but we will almost always stick to matrices over a field in this section.

Definition. Let A be an ![]() matrix with entries in a field F. The row vectors of A are the vectors in

matrix with entries in a field F. The row vectors of A are the vectors in ![]() corresponding to the rows of A. The row space

of A is the subspace of

corresponding to the rows of A. The row space

of A is the subspace of ![]() spanned by the row vectors of A.

spanned by the row vectors of A.

For example, consider the real matrix

![$$A = \left[\matrix{1 & 0 \cr 0 & 1 \cr 0 & 0 \cr}\right].$$](row-space4.png)

The row vectors are ![]() ,

, ![]() , and

, and ![]() . The row space is

the subspace of

. The row space is

the subspace of ![]() spanned by these vectors. Since the first

two vectors are the standard basis vectors for

spanned by these vectors. Since the first

two vectors are the standard basis vectors for ![]() , the row space is

, the row space is ![]() .

.

Lemma. Let A be a matrix with entries in a

field. If E is an elementary row operation, then ![]() has the same row space as A.

has the same row space as A.

Proof. If E is an operation of the form ![]() , then

, then ![]() and A have the same

rows (except for order), so it's clear that their row vectors have

the same span. Hence, the matrices have the same row space.

and A have the same

rows (except for order), so it's clear that their row vectors have

the same span. Hence, the matrices have the same row space.

If E is an operation of the form ![]() where

where ![]() , then A and

, then A and ![]() agree except in the i-th row. We

have

agree except in the i-th row. We

have

![]()

Note that the vectors ![]() , ...,

, ..., ![]() , ...,

, ..., ![]() are the rows of

are the rows of ![]() . So this equation says any linear

combination of the rows

. So this equation says any linear

combination of the rows ![]() , ...,

, ..., ![]() of A is a linear

combination of the rows of

of A is a linear

combination of the rows of ![]() . This means that the row space of

A is contained in the row space of

. This means that the row space of

A is contained in the row space of ![]() .

.

Going the other way, a linear combination of the rows ![]() , ...,

, ..., ![]() , ...,

, ..., ![]() of

of ![]() looks like this:

looks like this:

![]()

But this is a linear combination of the rows ![]() , ...,

, ..., ![]() of A, so the row space of

of A, so the row space of ![]() is contained in the

row space of A. Hence, A and

is contained in the

row space of A. Hence, A and ![]() have the same row space.

have the same row space.

Finally, suppose E is a row operation of the form ![]() , where

, where ![]() . Then

. Then

![]()

This shows that the row space of A is contained in the row space of

![]() .

.

Conversely,

![]()

Hence, the row space of ![]() is contained in the row space of

A.

is contained in the row space of

A.![]()

Definition. Two matrices over a field are row equivalent if one can be obtained from the other via elementary row operations.

Since row operations preserve row space, row equivalent matrices have the same row space. In particular, a matrix and its row reduced echelon form have the same row space.

The next proposition describes some of the components of a vector in the row space of a row-reduced echelon matrix R. Such a vector is a linear combination of the nonzero rows of R.

Proposition. Let ![]() be a row

reduced echelon matrix over a field with nonzero rows

be a row

reduced echelon matrix over a field with nonzero rows ![]() , ...,

, ..., ![]() . Suppose the leading entries of R occur at

. Suppose the leading entries of R occur at

![]()

Suppose ![]() and

and

![]()

Then ![]() for all k.

for all k.

Proof.

![]()

But the only nonzero element in column ![]() is the leading entry

is the leading entry

![]() . Therefore, the only nonzero term in the sum is

. Therefore, the only nonzero term in the sum is ![]() .

.![]()

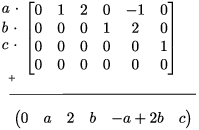

This result looks a bit technical, but it becomes obvious if you

consider an example. Here's a row reduced echelon matrix over ![]() :

:

![$$\left[\matrix{ 0 & 1 & 2 & 0 & -1 & 0 \cr 0 & 0 & 0 & 1 & 2 & 0 \cr 0 & 0 & 0 & 0 & 0 & 1 \cr 0 & 0 & 0 & 0 & 0 & 0 \cr}\right].$$](row-space53.png)

Here's a vector in the row space, a linear combination of the nonzero rows:

The leading entries occur in columns ![]() ,

, ![]() , and

, and ![]() . The

. The ![]() ,

, ![]() , and

, and ![]() components of the vector are

components of the vector are

![]()

You can see from the picture why this happens. The coefficients a, b, c multiply the leading entries. The leading entries are all 1's, and they're the only nonzero elements in their columns. So in the components of the vector corresponding to those columns, you get a, b, and c.

Corollary. The nonzero rows of a row reduced echelon matrix over a field are independent.

Proof. Suppose R is a row reduced echelon

matrix with nonzero rows ![]() , ...,

, ..., ![]() . Suppose the leading

entries of R occur at

. Suppose the leading

entries of R occur at ![]() , where

, where ![]() . Suppose

. Suppose

![]()

The proposition implies that ![]() for all k.

Therefore,

for all k.

Therefore, ![]() are independent.

are independent.![]()

Corollary. The nonzero rows of a row reduced echelon matrix over a field form a basis for the row space of the matrix.

Proof. The nonzero rows span the row space,

and are independent, by the preceding corollary.![]()

Algorithm. Let V be a finite-dimensional

vector space, and let ![]() be vectors in V. Find a

basis for

be vectors in V. Find a

basis for ![]() , the subspace

spanned by the

, the subspace

spanned by the ![]() .

.

Let M be the matrix whose i-th row is ![]() . The row space of M

is W. Let R be a row-reduced echelon matrix which is row equivalent

to M. Then R and M have the same row space W, and the nonzero rows of

R form a basis for W.

. The row space of M

is W. Let R be a row-reduced echelon matrix which is row equivalent

to M. Then R and M have the same row space W, and the nonzero rows of

R form a basis for W.![]()

Example. Consider the vectors ![]() ,

, ![]() , and

, and ![]() in

in ![]() . Find a basis for the subspace

. Find a basis for the subspace

![]() spanned by the vectors.

spanned by the vectors.

Construct a matrix with the ![]() as its rows and row reduce:

as its rows and row reduce:

![$$\left[\matrix{ 1 & 0 & 1 & 1 \cr -2 & 1 & 1 & 0 \cr 7 & -2 & 1 & 3 \cr}\right] \quad \to \quad \left[\matrix{ 1 & 0 & 1 & 1 \cr 0 & 1 & 3 & 2 \cr 0 & 0 & 0 & 0 \cr}\right]$$](row-space79.png)

The vectors ![]() and

and ![]() form a basis for

form a basis for ![]() .

.![]()

Example. Determine the dimension of the

subspace of ![]() spanned by

spanned by ![]() ,

, ![]() , and

, and ![]() .

.

Form a matrix using the vectors as the rows and row reduce:

![$$\left[\matrix{ 1 & 2 & -1 \cr 1 & 1 & 1 \cr 2 & -2 & 1 \cr}\right] \quad \to \quad \left[\matrix{ 1 & 0 & 0 \cr 0 & 1 & 0 \cr 0 & 0 & 1 \cr}\right]$$](row-space87.png)

The subspace has dimension 3, since the row reduced echelon matrix

has 3 nonzero rows.![]()

Definition. The rank of a matrix over a field is the dimension of its row space.

Example. Find the rank of the following matrix

over ![]() :

:

![$$\left[\matrix{ 1 & 4 & 2 & 1 \cr 3 & 3 & 1 & 2 \cr 0 & 1 & 0 & 4 \cr}\right].$$](row-space89.png)

Row reduce the matrix:

![$$\left[\matrix{ 1 & 4 & 2 & 1 \cr 3 & 3 & 1 & 2 \cr 0 & 1 & 0 & 4 \cr}\right] \quad \to \quad \left[\matrix{ 1 & 0 & 2 & 0 \cr 0 & 1 & 0 & 4 \cr 0 & 0 & 0 & 0 \cr}\right]$$](row-space90.png)

The row reduced echelon matrix has 2 nonzero rows. Therefore, the

original matrix has rank 2.![]()

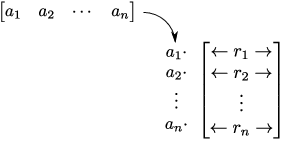

I'll need the following fact about matrix multiplication for the proof of the next lemma. Consider the following multiplication:

![$$\left[\matrix{a_1 & a_2 & \cdots & a_n \cr}\right] \left[\matrix{\leftarrow & r_1 & \rightarrow \cr \leftarrow & r_2 & \rightarrow \cr & \vdots & \cr \leftarrow & r_n & \rightarrow \cr}\right].$$](row-space91.png)

In doing the multiplication, each ![]() multiplies the

corresponding row

multiplies the

corresponding row ![]() . The result is a linear combination of the

. The result is a linear combination of the

![]() 's with the

's with the ![]() 's as coefficients. Here's the

picture:

's as coefficients. Here's the

picture:

Therefore,

![$$\left[\matrix{ a_1 & a_2 & \cdots & a_n \cr}\right] \left[\matrix{\leftarrow & r_1 & \rightarrow \cr \leftarrow & r_2 & \rightarrow \cr & \vdots & \cr \leftarrow & r_n & \rightarrow \cr}\right] = \left[\matrix{a_1 r_1 + a_2 r_2 + \cdots + a_n r_n \cr}\right].$$](row-space97.png)

If instead of a single row vector on the left I have an entire matrix, here's what I get:

![$$\left[\matrix{ a_{1 1} & a_{1 2} & \cdots & a_{1 n} \cr a_{2 1} & a_{2 2} & \cdots & a_{2 n} \cr \vdots & \vdots & & \vdots \cr a_{m 1} & a_{m 2} & \cdots & a_{mn } \cr}\right] \left[\matrix{ \leftarrow & r_1 & \rightarrow \cr \leftarrow & r_2 & \rightarrow \cr & \vdots & \cr \leftarrow & r_n & \rightarrow \cr}\right] = \left[\matrix{ \leftarrow & a_{1 1} r_1 + a_{1 2} r_2 + \cdots + a_{1 n} r_n & \rightarrow \cr \leftarrow & a_{2 1} r_1 + a_{2 2} r_2 + \cdots + a_{2 n} r_n & \rightarrow \cr & \vdots & \cr \leftarrow & a_{m 1} r_1 + a_{m 2} r_2 + \cdots + a_{m n} r_n & \rightarrow \cr}\right].$$](row-space98.png)

Hence, the rows of the product are linear

combinations of the rows ![]() ,

, ![]() , ...

, ... ![]() .

.

Proposition. Let M and N be matrices over a field F which are compatible for multiplication. Then

![]()

Proof. The preceding discussion shows that the

rows of ![]() are linear combinations of the rows of N. Therefore,

the rows of

are linear combinations of the rows of N. Therefore,

the rows of ![]() are all contained in the row space of N.

are all contained in the row space of N.

The row space of N is a subspace, so it's closed under taking linear

combinations of vectors. Hence, any linear combination of the rows of

![]() is in the row space of N. Therefore, the row space of

is in the row space of N. Therefore, the row space of

![]() is contained in the row space of N.

is contained in the row space of N.

From this, it follows that the dimension of the row space of ![]() is less than or equal to the dimension of the row space of N --- that

is,

is less than or equal to the dimension of the row space of N --- that

is, ![]() .

.![]()

I already have one algorithm for testing whether a set of vectors in

![]() is independent. That algorithm involves constructing

a matrix with the vectors as the columns, then

row reducing. The algorithm will also produce a linear combination of

the vectors which adds up to the zero vector if the set is dependent.

is independent. That algorithm involves constructing

a matrix with the vectors as the columns, then

row reducing. The algorithm will also produce a linear combination of

the vectors which adds up to the zero vector if the set is dependent.

If all you care about is whether or not a set of vectors in ![]() is independent --- i.e. you don't care about a possible dependence

relation --- the results on rank can be used to give an alternative

algorithm. In this approach, you construct a matrix with the given

vectors as the rows.

is independent --- i.e. you don't care about a possible dependence

relation --- the results on rank can be used to give an alternative

algorithm. In this approach, you construct a matrix with the given

vectors as the rows.

Algorithm. Let V be a finite-dimensional

vector space, and let ![]() be vectors in V. Determine

whether the set

be vectors in V. Determine

whether the set ![]() is independent.

is independent.

Let M be the matrix whose i-th row is ![]() . Let R be a row

reduced echelon matrix which is row equivalent to M. If R has m

nonzero rows, then

. Let R be a row

reduced echelon matrix which is row equivalent to M. If R has m

nonzero rows, then ![]() is independent. Otherwise,

the set is dependent.

is independent. Otherwise,

the set is dependent.

If R has p nonzero rows, then R and M have rank p. (They have the

same rank, because they have the same row space.) Suppose ![]() . Since

. Since ![]() spans, some subset of

spans, some subset of ![]() is a basis. However, a basis must contain

is a basis. However, a basis must contain ![]() elements. Therefore,

elements. Therefore, ![]() must be

independent.

must be

independent.

Any independent subset of the row space must contain ![]() elements. Hence, if

elements. Hence, if ![]() ,

, ![]() must be dependent.

must be dependent.![]()

Example. Determine whether the vectors ![]() ,

, ![]() , and

, and ![]() in

in ![]() are independent.

are independent.

Form a matrix with the vectors as the rows and row reduce:

![$$\left[\matrix{ 1 & 0 & 1 & 1 \cr -2 & 1 & 1 & 0 \cr 7 & -2 & 1 & 3 \cr}\right] \quad \to \quad \left[\matrix{ 1 & 0 & 1 & 1 \cr 0 & 1 & 3 & 2 \cr 0 & 0 & 0 & 0 \cr}\right]$$](row-space127.png)

The row reduced echelon matrix has only two nonzero rows. Hence, the

vectors are dependent.![]()

I already know that every matrix can be row reduced to a row reduced echelon matrix. The next result completes the discussion by showing that the row reduced echelon form is unique

Proposition. Every matrix over a field can be row reduced to a unique row reduced echelon matrix.

Proof. Suppose M row reduces to R, a row

reduced echelon matrix with nonzero rows ![]() . Suppose

the leading coefficients of R occur at

. Suppose

the leading coefficients of R occur at ![]() , where

, where ![]() .

.

Let W be the row space of R and let ![]() . Since

. Since ![]() , ...

, ... ![]() span the row space W,

we have

span the row space W,

we have

![]()

Claim: The first nonzero component of v must

occur in column ![]() , for some

, for some ![]() .

.

Suppose ![]() is the first

is the first ![]() which is nonzero. Since the

which is nonzero. Since the ![]() terms before

terms before ![]() are zero, we have

are zero, we have

![]()

The first nonzero element of ![]() is a 1 at

is a 1 at ![]() . The first nonzero element in

. The first nonzero element in ![]() lies to the right of column

lies to the right of column ![]() . Thus,

. Thus, ![]() for

for ![]() , and

, and ![]() . Evidently, this is the first nonzero component of

v. This proves the claim.

. Evidently, this is the first nonzero component of

v. This proves the claim.

This establishes that if a row reduced echelon matrix ![]() is row equivalent to M, its leading coefficients must lie in the same

columns as those of R. For the rows of

is row equivalent to M, its leading coefficients must lie in the same

columns as those of R. For the rows of ![]() are elements of W, and

the claim applies.

are elements of W, and

the claim applies.

Next, I'll show that the nonzero rows of ![]() are the same as the

nonzero row of R.

are the same as the

nonzero row of R.

Consider, for instance, the first nonzero rows of R and ![]() . Their first nonzero components are 1's lying in column

. Their first nonzero components are 1's lying in column ![]() . Moreover, both

. Moreover, both ![]() and

and ![]() have zeros in columns

have zeros in columns

![]() ,

, ![]() , ... .

, ... .

Suppose ![]() . Then

. Then ![]() is a nonzero

vector in W whose first nonzero component is not in column

is a nonzero

vector in W whose first nonzero component is not in column ![]() ,

, ![]() , ..., which is a contradiction.

, ..., which is a contradiction.

The same argument applies to show that ![]() for all k.

Therefore,

for all k.

Therefore, ![]() .

.![]()

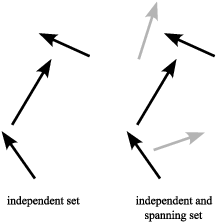

In my discussion of bases, I showed that every independent set is a subset of a basis. To put it another way, you can add vectors to an independent set to get a basis.

Here's how to find specific vectors to add to an independent set to get a basis.

Example. The following set of vectors in ![]() is independent:

is independent:

![]()

Add vectors to the set to make a basis for ![]() .

.

If I make a matrix with these vectors as rows and row reduce, the row reduced echelon form will have the same row space (i.e. the same span) as the original set of vectors:

![$$\left[\matrix{ 2 & -4 & 1 & 0 & 8 \cr -1 & 2 & -1 & -1 & -4 \cr 2 & -4 & 1 & 1 & 7 \cr}\right] \quad \to \quad \left[\matrix{ 1 & -2 & 0 & 0 & 3 \cr 0 & 0 & 1 & 0 & 2 \cr 0 & 0 & 0 & 1 & -1 \cr}\right]$$](row-space168.png)

Since there are three nonzero rows and the original set had three vectors, the original set of vectors is indeed independent.

By examining the row reduced echelon form, I see that the vectors

![]() and

and ![]() will not be linear

combinations of the others. Reason: A nonzero linear combination of

the rows of the row reduced echelon form must have a nonzero entry in

at least one of the first, third, or fourth columns, since those are

the columns containing the leading entries.

will not be linear

combinations of the others. Reason: A nonzero linear combination of

the rows of the row reduced echelon form must have a nonzero entry in

at least one of the first, third, or fourth columns, since those are

the columns containing the leading entries.

In other words, I'm choosing standard basis vectors with a 1's in

positions not occupied by leading entries in the row reduced echelon

form. Therefore, I can add ![]() and

and ![]() to the set and get a new independent set:

to the set and get a new independent set:

![]()

There are 5 vectors in this set, so it is a basis for ![]() .

.![]()

Copyright 2023 by Bruce Ikenaga