If you take a set of vectors in a vector space, there's no reason why

that set of vectors should be a subspace. For instance, consider the

following vectors in the ![]() -vector space

-vector space ![]() :

:

![]()

Now ![]() , which is in the set. But

, which is in the set. But ![]() is not in the set, so the set is

not closed under vector addition. And

is not in the set, so the set is

not closed under vector addition. And ![]() is

not in the set, so the set is not closed under scalar multiplication.

The set is not a subspace of

is

not in the set, so the set is not closed under scalar multiplication.

The set is not a subspace of ![]() .

.

Suppose we try to fix this by finding a subspace of ![]() which contains the three vectors. Well, that's easy: We could take

which contains the three vectors. Well, that's easy: We could take

![]() ! This would work for any set of vectors in

! This would work for any set of vectors in

![]() , but for that reason, it isn't a very interesting

answer.

, but for that reason, it isn't a very interesting

answer.

It might be better to ask for the "smallest" subspace of

![]() which contains the three vectors. This set will be

called the span of the given set of vectors.

We might approach this by asking: What vectors do we need to add to

the set of three vectors to make it a subspace? Since subspaces are

closed under vector addition, we'll need to throw in all the vectors

which you get by adding the three vectors. You can check that we'd

need to throw in two more vectors, so we now have 5. But subspaces

are also closed under scalar multiplication, so we need to throw in

multiples of these 5 vectors by every element of

which contains the three vectors. This set will be

called the span of the given set of vectors.

We might approach this by asking: What vectors do we need to add to

the set of three vectors to make it a subspace? Since subspaces are

closed under vector addition, we'll need to throw in all the vectors

which you get by adding the three vectors. You can check that we'd

need to throw in two more vectors, so we now have 5. But subspaces

are also closed under scalar multiplication, so we need to throw in

multiples of these 5 vectors by every element of ![]() .

Now we have an infinite number of vectors. Oops! We need to ensure

that the set is closed under addition, so we need to go back and

throw in all sums of the infinitely many vectors we have so far.

Then, since we threw in some additional vectors, we need to take

scalar multiples of those, and ... .

.

Now we have an infinite number of vectors. Oops! We need to ensure

that the set is closed under addition, so we need to go back and

throw in all sums of the infinitely many vectors we have so far.

Then, since we threw in some additional vectors, we need to take

scalar multiples of those, and ... .

Our approach is a reasonable one, but we clearly need a more systematic way of carrying it out. We will see how to do it in this section.

Building subspaces involves forming sums and scalar multiples. Rather than thinking of doing these two operations separately, we'll define a concept which does both at once.

Definition. If ![]() ,

, ![]() ,

...,

,

..., ![]() are vectors in a vector space V over a field F, a linear combination of the v's is a vector

are vectors in a vector space V over a field F, a linear combination of the v's is a vector

![]()

Notice that if you take two vectors ![]() and

and ![]() and take the scalars

to be

and take the scalars

to be ![]() and

and ![]() , you get

, you get ![]() , which is vector addition.

And if you just take one vector

, which is vector addition.

And if you just take one vector ![]() , then

, then ![]() represents a

scalar multiple of that vector. Thus, the idea of a linear

combination contains the operations of vector addition and scalar

multiplication, but allows us to do many such operations all at once.

represents a

scalar multiple of that vector. Thus, the idea of a linear

combination contains the operations of vector addition and scalar

multiplication, but allows us to do many such operations all at once.

Let's see a numerical example. Take ![]() and

and ![]() in

in ![]() .

Here is a linear combination of u and v:

.

Here is a linear combination of u and v:

![]()

![]() is also a linear combination of u

and v. And u and v are themselves linear combinations of u and v, as

is the zero vector:

is also a linear combination of u

and v. And u and v are themselves linear combinations of u and v, as

is the zero vector:

![]()

On the other hand, there are vectors in ![]() which are

not linear combinations of

which are

not linear combinations of ![]() and

and ![]() . Do you see how this pair is different from the

first?

. Do you see how this pair is different from the

first?

Definition. If S is a set of vectors in a

vector space V, the span ![]() of S is

the set of all linear combinations of vectors in S.

of S is

the set of all linear combinations of vectors in S.

Remark. ![]() is also referred to as

the subspace generated by S.

is also referred to as

the subspace generated by S.

If S is a subset of a subspace W, then S spans

W (or S is a spanning set for W, or S generates W) if ![]() .

.

If S is a finite set of vectors, we could just describe ![]() as the set of all linear combinations of the

elements of S. If S is infinite, a particular linear combination of

vectors from S involves finitely many vectors from S --- we

"grab" finitely many vectors from S at a time --- but we're

doing this in all possible ways.

as the set of all linear combinations of the

elements of S. If S is infinite, a particular linear combination of

vectors from S involves finitely many vectors from S --- we

"grab" finitely many vectors from S at a time --- but we're

doing this in all possible ways.

Note that ![]() . For if s is a vector in S, then

. For if s is a vector in S, then

![]() is a linear combination of a vector from S, so it is

in

is a linear combination of a vector from S, so it is

in ![]() .

.

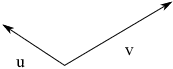

Here's a pictorial example in ![]() . Take two vectors u and v:

. Take two vectors u and v:

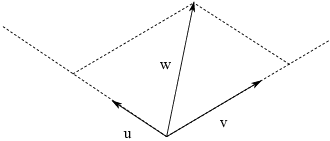

It turns out that the span of u and v is all of ![]() ; that is, if

; that is, if ![]() , then w can be written as a linear combination of u

and v. To see why this is reasonable, take a vector w and

"project" w onto the lines containing the vectors u and v:

, then w can be written as a linear combination of u

and v. To see why this is reasonable, take a vector w and

"project" w onto the lines containing the vectors u and v:

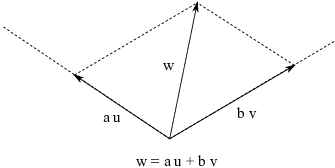

I can scale u up by multiplying by an appropriate number to get a

vector ![]() which is the projection of w on the line of u.

Likewise, I can scale v up by multiplying by an appropriate number to

get a vector

which is the projection of w on the line of u.

Likewise, I can scale v up by multiplying by an appropriate number to

get a vector ![]() which is the projection of w on the line of v.

which is the projection of w on the line of v.

As you can see in the picture, w is the diagonal of the parallelogram

whose sides are ![]() and

and ![]() , so

, so ![]() .

.

Try this with some other vectors in place of w. Of course, a picture isn't a proof, but this example should help you get an idea of what the span of a set of vectors means geometrically.

Theorem. If S is a subset of a vector space V,

the span ![]() of S is a subspace of V which contains S.

of S is a subspace of V which contains S.

Proof. We already observed that ![]() .

.

Here are typical elements of the span of S:

![]()

Here the j's and k's are scalars and the u's and v's are elements of S.

Take two elements of the span and add them:

![]()

This sum is an element of the span, because it's a sum of vectors in S, each multiplied by a scalar --- that is, a linear combination of elements of S. Thus, the span is closed under taking sums.

Take an element of the span and multiply it by a scalar:

![]()

This is an element of the span, because it's a linear combination of elements of S. Thus, the span is closed under scalar multiplication.

Therefore, the span is a subspace.![]()

Example. Prove that the span of ![]() and

and ![]() in

in ![]() is

is

![]()

To show that two sets are equal, you need to show that each is contained in the other. To do this, take a typical element of the first set and show that it's in the second set. Then take a typical element of the second set and show that it's in the first set.

Let W be the span of ![]() and

and ![]() in

in ![]() . A typical

element of W is a linear combination of the two vectors:

. A typical

element of W is a linear combination of the two vectors:

![]()

Since the sum is a vector of the form ![]() for

for ![]() , it is in

V. This proves that

, it is in

V. This proves that ![]() .

.

Now let ![]() . I have to show that this vector is a

linear combination of

. I have to show that this vector is a

linear combination of ![]() and

and ![]() . This

means that I have to find real numbers x and y such that

. This

means that I have to find real numbers x and y such that

![]()

If I write the vectors as column vectors, I get

![$$x \cdot \left[\matrix{3 \cr 1 \cr 0 \cr}\right] + y \cdot \left[\matrix{2 \cr 1 \cr 0 \cr}\right] = \left[\matrix{a \cr b \cr 0 \cr}\right].$$](span71.png)

I can write this as the matrix equation

![$$\left[\matrix{ 3 & 2 \cr 1 & 1 \cr 0 & 0 \cr}\right] \left[\matrix{x \cr y \cr}\right] = \left[\matrix{a \cr b \cr 0 \cr}\right].$$](span72.png)

If I solve this system by row reduction, I get

![$$\left[\matrix{ 3 & 2 & a \cr 1 & 1 & b \cr 0 & 0 & 0 \cr}\right] \quad \to \quad \left[\matrix{ 1 & 0 & a - 2 b \cr 0 & 1 & -a + 3 b \cr 0 & 0 & 0 \cr}\right]$$](span73.png)

The solution is

![]()

In other words,

![]()

Since ![]() is a linear combination of

is a linear combination of ![]() and

and ![]() , it follows that

, it follows that ![]() . This proves that

. This proves that ![]() .

.

Since ![]() and

and ![]() , I have

, I have ![]() .

.![]()

Example. Let

![]()

(a) Prove or disprove: ![]() is in the span of S.

is in the span of S.

(b) Prove or disprove: ![]() is in the span of S.

is in the span of S.

(a) The vector ![]() is in the span of

is in the span of ![]() and

and ![]() if it can be written as a linear combination of

if it can be written as a linear combination of ![]() and

and ![]() . So I try to find numbers a and b such that

. So I try to find numbers a and b such that

![]()

I'll convert this to a matrix equation by writing the vectors as column vectors:

![$$a \cdot \left[\matrix{1 \cr 1 \cr 0 \cr}\right] + b \cdot \left[\matrix{0 \cr 1 \cr 1 \cr}\right] = \left[\matrix{3 \cr -1 \cr -4 \cr}\right].$$](span93.png)

By using column vectors (rather than row vectors), we get a familiar kind of matrix equation:

![$$\left[\matrix{1 & 0 \cr 1 & 1 \cr 0 & 1 \cr}\right] \left[\matrix{a \cr b \cr}\right] = \left[\matrix{3 \cr -1 \cr -4 \cr}\right].$$](span94.png)

We can solve for a and b by row reducing the augmented matrix:

![$$\left[\matrix{ 1 & 0 & 3 \cr 1 & 1 & -1 \cr 0 & 1 & -4 \cr}\right] \quad \to \quad \left[\matrix{ 1 & 0 & 3 \cr 0 & 1 & -4 \cr 0 & 0 & 0 \cr}\right]$$](span95.png)

The solution is ![]() ,

, ![]() . That is,

. That is,

![]()

The vector ![]() is in the span of S.

is in the span of S.

(b) Following the approach we took in part (a), we'll convert the vectors to column vectors to get the equation

![$$a \cdot \left[\matrix{1 \cr 1 \cr 0 \cr}\right] + b \cdot \left[\matrix{0 \cr 1 \cr 1 \cr}\right] = \left[\matrix{5 \cr -2 \cr 6 \cr}\right].$$](span100.png)

This is equivalent to the matrix equation

![$$\left[\matrix{1 & 0 \cr 1 & 1 \cr 0 & 1 \cr}\right] \left[\matrix{a \cr b \cr}\right] = \left[\matrix{5 \cr -2 \cr 6 \cr}\right].$$](span101.png)

Row reduce to solve the system:

![$$\left[\matrix{ 1 & 0 & 5 \cr 1 & 1 & -2 \cr 0 & 1 & 6 \cr}\right] \quad \to \quad \left[\matrix{ 1 & 0 & 0 \cr 0 & 1 & 0 \cr 0 & 0 & 1 \cr}\right]$$](span102.png)

The last matrix says "![]() ", a contradiction. The

system is inconsistent, so there are no such numbers a and b.

Therefore,

", a contradiction. The

system is inconsistent, so there are no such numbers a and b.

Therefore, ![]() is not in the span of S.

is not in the span of S.![]()

Thus, to determine whether the vector ![]() is in the span of

is in the span of ![]() ,

,

![]() , ...,

, ..., ![]() in

in ![]() , form the augmented matrix

, form the augmented matrix

![$$\left[\matrix{\uparrow & \uparrow & & \uparrow & \uparrow \cr v_1 & v_2 & \cdots & v_m & b \cr \downarrow & \downarrow & & \downarrow & \downarrow \cr}\right]$$](span110.png)

If the system has a solution, b is in the span, and coefficients of a linear combination of the v's which add up to b are given by a solution to the system. If the system has no solutions, then b is not in the span of the v's.

(In a general vector space where vectors may not be "numbers in slots", you have to got back to the definition of spanning set.)

Example. Consider the following set of vectors

in ![]() :

:

![$$\left\{\left[\matrix{1 \cr 0 \cr -1 \cr}\right], \left[\matrix{2 \cr 1 \cr 3 \cr}\right], \left[\matrix{1 \cr 1 \cr -4 \cr}\right]\right\}$$](span112.png)

Prove that the span of the set is all of ![]() .

.

I have to show that any vector ![]() can be written as a

linear combination of the vectors in the set. That is, I must find

real numbers a, b, c such that

can be written as a

linear combination of the vectors in the set. That is, I must find

real numbers a, b, c such that

![$$a \cdot\left[\matrix{1 \cr 0 \cr -1 \cr}\right] + b \cdot\left[\matrix{2 \cr 1 \cr 3 \cr}\right] + c \cdot\left[\matrix{1 \cr 1 \cr -4 \cr}\right] = \left[\matrix{x \cr y \cr z \cr}\right].$$](span115.png)

The matrix equation is

![$$\left[\matrix{ 1 & 2 & 1 \cr 0 & 1 & 1 \cr -1 & 3 & -4 \cr}\right] \left[\matrix{a \cr b \cr c \cr}\right] = \left[\matrix{x \cr y \cr z \cr}\right].$$](span116.png)

Row reduce to solve:

![$$\left[\matrix{ 1 & 2 & 1 & x \cr 0 & 1 & 1 & y \cr -1 & 3 & -4 & z \cr}\right] \quad \to \quad \left[\matrix{ 1 & 0 & 0 & \dfrac{1}{8} (7 x - 11 y - z) \cr \noalign{\vskip2pt} 0 & 1 & 0 & \dfrac{1}{8} (x + 3 y + z) \cr \noalign{\vskip2pt} 0 & 0 & 1 & \dfrac{1}{8} (-x + 5 y - z) \cr}\right]$$](span117.png)

I get the ugly solution

![]()

This shows that, given any vector ![]() , I can find a linear

combination of the original three vectors which equals

, I can find a linear

combination of the original three vectors which equals ![]() .

.

Thus, the span of the original set of three vectors is all of ![]() .

.![]()

Example. Let

![]()

(a) Determine whether ![]() .

.

(b) Determine whether ![]() .

.

(a) I want to determine whether there are numbers ![]() such that

such that

![$$a \cdot \left[\matrix{1 \cr 2 \cr 1 \cr}\right] + b \cdot \left[\matrix{1 \cr 4 \cr 2 \cr}\right] = \left[\matrix{0 \cr 4 \cr 2 \cr}\right].$$](span126.png)

This gives the system

![$$\left[\matrix{1 & 1 \cr 2 & 4 \cr 1 & 2 \cr}\right] \left[\matrix{a \cr b \cr}\right] = \left[\matrix{0 \cr 4 \cr 2 \cr}\right].$$](span127.png)

Form the augmented matrix and row reduce:

![$$\left[\matrix{ 1 & 1 & 0 \cr 2 & 4 & 4 \cr 1 & 2 & 2 \cr}\right] \quad \to \quad \left[\matrix{ 1 & 0 & 3 \cr 0 & 1 & 2 \cr 0 & 0 & 0 \cr}\right]$$](span128.png)

The last matrix says ![]() and

and ![]() . Therefore,

. Therefore, ![]() is in the span of S:

is in the span of S:

![$$3 \cdot \left[\matrix{1 \cr 2 \cr 1 \cr}\right] + 2 \cdot \left[\matrix{1 \cr 4 \cr 2 \cr}\right] = \left[\matrix{0 \cr 4 \cr 2 \cr}\right].\quad\halmos$$](span132.png)

(b) I want to determine whether there are numbers ![]() such that

such that

![$$a \cdot \left[\matrix{1 \cr 2 \cr 1 \cr}\right] + b \cdot \left[\matrix{1 \cr 4 \cr 2 \cr}\right] = \left[\matrix{1 \cr 1 \cr 1 \cr}\right].$$](span134.png)

This gives the system

![$$\left[\matrix{1 & 1 \cr 2 & 4 \cr 1 & 2 \cr}\right] \left[\matrix{a \cr b \cr}\right] = \left[\matrix{1 \cr 1 \cr 1 \cr}\right].$$](span135.png)

Form the augmented matrix and row reduce:

![$$\left[\matrix{ 1 & 1 & 1 \cr 2 & 4 & 1 \cr 1 & 2 & 1 \cr}\right] \quad \to \quad \left[\matrix{ 1 & 0 & 0 \cr 0 & 1 & 0 \cr 0 & 0 & 1 \cr}\right]$$](span136.png)

The last row of the row reduced echelon matrix says "![]() ". This contradiction implies that the system is has no

solutions. Therefore,

". This contradiction implies that the system is has no

solutions. Therefore, ![]() is not in the span of S.

is not in the span of S.![]()

In the next example, we go back to the definition of the span of a set of vectors, rather than writing down a matrix equation.

Example. ![]() is the vector

space over

is the vector

space over ![]() of continuous functions

of continuous functions ![]() . Let

. Let

![]()

Show that h is not in ![]() , the span of f and g.

, the span of f and g.

We'll give a proof by contradiction.

Suppose there are numbers ![]() such that

such that

![]()

Set ![]() . We get

. We get

![]()

The equation becomes

![]()

Set ![]() . We get

. We get

![]()

The equation is now

![]()

Finally, set ![]() . We get

. We get

![]()

This contradiction shows there are no numbers ![]() which make

the initial equation true. Hence, h is not in the span of f and

g.

which make

the initial equation true. Hence, h is not in the span of f and

g.![]()

Another definition of the span of a set

There is another way to define the span of a set of vectors. The construction is a little more subtle than the one we gave earlier. It uses the intersection of a (possibly) infinite collection of subspaces, which we know by an earlier result is a subspace.

Let V be a vector space over a field F, and let S be a set of vectors in V. Let

![]()

That is, to form ![]() we intersect all subspaces of V which

contain S. Note that there is at least one subspace that contains S,

namely V itself. We will show that

we intersect all subspaces of V which

contain S. Note that there is at least one subspace that contains S,

namely V itself. We will show that ![]() is just the span

of S, as constructed earlier: The set of all linear combinations of

elements of S.

is just the span

of S, as constructed earlier: The set of all linear combinations of

elements of S.

(By the way, "![]() " is just temporary notation I'm using

for this discussion. After this, I'll just use

" is just temporary notation I'm using

for this discussion. After this, I'll just use ![]() to

denote the span of S.)

to

denote the span of S.)

Theorem. ![]() .

.

Proof. First, the span ![]() is a

subspace of V which contains S. So

is a

subspace of V which contains S. So ![]() is one

of the subspaces being intersected to construct

is one

of the subspaces being intersected to construct ![]() , and hence

, and hence

![]()

To complete the proof, I'll show that ![]() . Take an element of

. Take an element of ![]() , namely

a linear combination of elements of S:

, namely

a linear combination of elements of S:

![]()

Let W be a subspace of V which contains S. Then ![]() , and since W is a subspace,

, and since W is a subspace,

![]()

Thus, ![]() is contained in every

subspace which contains S --- that is, it is contained in every

subspace being intersected to construct

is contained in every

subspace which contains S --- that is, it is contained in every

subspace being intersected to construct ![]() . Hence, it is

contained in

. Hence, it is

contained in ![]() . This proves that

. This proves that ![]() .

.

Therefore, ![]() .

.![]()

If you want to construct an object in math using some "building

blocks", there are often two general ways to do so. The first

way is to start with the building blocks, and combine them to build

the object "from the inside out". This direct approach is

what we did in the definition of ![]() as all linear

combinations of elements of S. It requires that you know how to put

the blocks together to do the "building".

as all linear

combinations of elements of S. It requires that you know how to put

the blocks together to do the "building".

Another approach is to take all objects of the "right kind" which contain the building blocks and find the "smallest". You find the "smallest" such object by intersecting all the objects of the "right kind" containing the building blocks. In this approach, you will usually have to intersect an infinite number of sets. And you will need to show that the intersection of sets of the "right kind" is still a set of the "right kind". This is the approach we used in intersecting all subspaces containing S.

Of course, if you've done things correctly, the two approaches give the same object at the end, as they did here. Both approaches are useful in mathematics.

Copyright 2022 by Bruce Ikenaga