Definition. Let V be a vector space over a

field F, and let ![]() ,

, ![]() . W is a

subspace of V if:

. W is a

subspace of V if:

(a) If ![]() , then

, then ![]() .

.

(b) If ![]() and

and ![]() , then

, then ![]() .

.

In other words, W is closed under addition of vectors and under scalar multiplication.

If we draw the vectors as arrows, we can picture the axioms in this way:

Remember that not all vectors can be drawn as arrows, so in general these pictures are just aids to your intuition.

A subspace W of a vector space V is itself a vector space, using the vector addition and scalar multiplication operations from V. If you go through the axioms for a vector space, you'll see that they all hold in W because they hold in V, and W is contained in V. Thus, the subspace axioms simply ensure that the vector addition and scalar multiplication operations from V "don't take you outside of W" when applied to vectors in W.

Remark. If W is a subspace, then axiom (a)

says that sum of two vectors in W is in W. You can show using induction that if ![]() ,

then

,

then ![]() for any

for any ![]() .

.

What do subspaces "look like"?

The subspaces of the plane ![]() are

are ![]() ,

, ![]() , and lines passing through the origin. In the

picture below, the lines A and B are subspaces of

, and lines passing through the origin. In the

picture below, the lines A and B are subspaces of ![]() .

.

In ![]() , the subspaces are the

, the subspaces are the ![]() ,

, ![]() , and lines or planes passing through the origin.

, and lines or planes passing through the origin.

Similar statements hold for ![]() .

.

We'll see below that a subspace must contain the zero vector, which explains why the examples I just gave are sets which pass through the origin.

As we've seen earlier, you get very different pictures of vectors

when F is a field other than ![]() . In these cases, pictures for

subspaces are also different. For example, consider the following

subspace of

. In these cases, pictures for

subspaces are also different. For example, consider the following

subspace of ![]() :

:

![]()

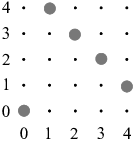

Here's a picture, with the gray dots denoting the 5 points of S:

While 4 of the points lie on a "line", the "line" is not a line through the origin. The origin is in S, but it doesn't lie on the same "line" as the other points.

Or consider the vector space ![]() over

over ![]() , consisting of continuous functions

, consisting of continuous functions ![]() . There is a subspace consisting of all multiples of

. There is a subspace consisting of all multiples of

![]() --- so things like

--- so things like ![]() ,

, ![]() ,

, ![]() ,

, ![]() , and so on. Here's a picture

which shows the graph of some of the elements of this subspace:

, and so on. Here's a picture

which shows the graph of some of the elements of this subspace:

Of course, there are actually an infinite number of "graphs" (functions) in this subspace --- I've only drawn a few. You can see our subspace is pretty far from "a line through the origin", even though it consists of all multiples of a single vector.

In what follows, we'll look at properties of subspaces, and discuss how to check whether a set is or is not a subspace.

First, every vector space contains at least two "obvious" subspaces, as described in the next result.

Proposition. if V is a vector space over a

field F, then ![]() and V are subspaces of V.

and V are subspaces of V.

Proof. I'll do the proof for ![]() by way of example. First, I have to take two vectors

in

by way of example. First, I have to take two vectors

in ![]() and show that their sum is in

and show that their sum is in ![]() . But

. But ![]() contains only the zero vector 0,

so my "two" vectors are 0 and 0 --- and

contains only the zero vector 0,

so my "two" vectors are 0 and 0 --- and ![]() , which is in

, which is in ![]() .

.

Next, I have to take ![]() and a vector in

and a vector in ![]() --- which, as I just saw, must be 0 --- and show that

their product is in

--- which, as I just saw, must be 0 --- and show that

their product is in ![]() . But

. But ![]() . This

verifies the second axiom, and so

. This

verifies the second axiom, and so ![]() is a subspace.

is a subspace.![]()

Obviously, the very uninteresting vector space consisting of just a

zero vector (i.e. ![]() ) has only the one subspace

) has only the one subspace ![]() .

.

If a vector space V is nonzero and one-dimensional ---

roughly speaking, if V "looks like" a line --- then ![]() and V are the only subspaces, and they are distinct.

In this case, V consists of all multiples

and V are the only subspaces, and they are distinct.

In this case, V consists of all multiples ![]() of any nonzero vector

of any nonzero vector

![]() by all scalars

by all scalars ![]() .

.

Beyond those cases, a vector space V always has subspaces other than

![]() and V. For example, if

and V. For example, if ![]() , take a nonzero

vector

, take a nonzero

vector ![]() and consider the set of all multiples

and consider the set of all multiples ![]() of x by scalars

of x by scalars ![]() . You can check that this is a subspace ---

the "line" passing through x.

. You can check that this is a subspace ---

the "line" passing through x.

If you want to show that a subset of a vector space is a subspace, you can combine the verifications for the two subspace axioms into a single verification.

Proposition. Let V be a vector space over a field F, and let W be a subset of V.

W is a subspace of V if and only if ![]() and

and ![]() implies

implies ![]() .

.

Proof. Suppose W is a subspace of V, and let

![]() and

and ![]() . Since W is closed under scalar

multiplication,

. Since W is closed under scalar

multiplication, ![]() . Since W is closed under vector addition,

. Since W is closed under vector addition,

![]() .

.

Conversely, suppose ![]() and

and ![]() implies

implies ![]() . Take

. Take ![]() : Our assumption says that if

: Our assumption says that if ![]() , then

, then ![]() . This proves that W is closed

under vector addition. Again in our assumption, take

. This proves that W is closed

under vector addition. Again in our assumption, take ![]() . The assumption then says that if

. The assumption then says that if ![]() and

and ![]() , then

, then ![]() . This proves

that W is closed under scalar multiplication. Hence, W is a

subspace.

. This proves

that W is closed under scalar multiplication. Hence, W is a

subspace.![]()

Note that the two axioms for a subspace are independent: Both can be true, both can be false, or one can be true and the other false. Hence, some of our examples will ask that you check each axiom separately, proving that it holds if it's true and disproving it by a counterexample if it's false.

Lemma. Let W be a subspace of a vector space V.

(a) The zero vector is in W.

(b) If ![]() , then

, then ![]() .

.

Note: These are not part of the axioms for a subspace: They are properties a subspace must have. So if you are checking the axioms for a subspace, you don't need to check these properties. But on the other hand, if a subset does not have one of these properties (e.g. the subset doesn't contain the zero vector), then it can't be a subspace.

Proof. (a) Take any vector ![]() (which you can do because W is nonempty), and take

(which you can do because W is nonempty), and take

![]() . Since W is closed under scalar multiplication,

. Since W is closed under scalar multiplication, ![]() . But

. But ![]() , so

, so ![]() .

.

(b) Since ![]() and

and ![]() ,

, ![]() is in

W.

is in

W.![]()

Example. Consider the real vector space ![]() , the usual x-y plane.

, the usual x-y plane.

(a) Show that the following sets are subspaces of ![]() :

:

![]()

(These are just the x and y-axes.)

(b) Show that the union ![]() is not a subspace.

is not a subspace.

(a) I'll check that ![]() is a subspace. (The proof for

is a subspace. (The proof for ![]() is similar.) First, I have to show that two elements of

is similar.) First, I have to show that two elements of ![]() add to an element of

add to an element of ![]() . An element of

. An element of ![]() is a pair with the

second component 0. So

is a pair with the

second component 0. So ![]() ,

, ![]() are two arbitrary

elements of

are two arbitrary

elements of ![]() . Add them:

. Add them:

![]()

![]() is in

is in ![]() , because its second component is 0. Thus,

, because its second component is 0. Thus,

![]() is closed under sums.

is closed under sums.

Next, I have to show that ![]() is closed under scalar

multiplication. Take a scalar

is closed under scalar

multiplication. Take a scalar ![]() and a vector

and a vector ![]() . Take their product:

. Take their product:

![]()

The product ![]() is in

is in ![]() because its second

component is 0. Therefore,

because its second

component is 0. Therefore, ![]() is closed under scalar

multiplication.

is closed under scalar

multiplication.

Thus, ![]() is a subspace.

is a subspace.

Notice that in doing the proof, I did not use

specific vectors in ![]() like

like ![]() or

or ![]() . I'm trying to prove statements about

arbitrary elements of

. I'm trying to prove statements about

arbitrary elements of ![]() , so I use

"variable" elements.

, so I use

"variable" elements.![]()

(b) I'll show that ![]() is not closed under vector

addition. Remember that the union of two sets consists of everything

in the first set or in the second set (or in both). Thus,

is not closed under vector

addition. Remember that the union of two sets consists of everything

in the first set or in the second set (or in both). Thus, ![]() , because

, because ![]() . And

. And ![]() , because

, because ![]() . But

. But

![]()

![]() because its second component isn't 0. And

because its second component isn't 0. And

![]() because its first component isn't 0. Since

because its first component isn't 0. Since

![]() isn't in either

isn't in either ![]() or

or ![]() , it's not in their union.

, it's not in their union.

Pictorially, it's easy to see: ![]() doesn't lie in either the

x-axis (

doesn't lie in either the

x-axis (![]() ) or the y-axis (

) or the y-axis (![]() ):

):

Thus, ![]() is not a subspace.

is not a subspace.

You can check, however, that ![]() is closed under scalar

multiplication: Multiplying a vector on the x-axis by a number gives

another vector on the x-axis, and multiplying a vector on the y-axis

by a number gives another vector on the y-axis.

is closed under scalar

multiplication: Multiplying a vector on the x-axis by a number gives

another vector on the x-axis, and multiplying a vector on the y-axis

by a number gives another vector on the y-axis.![]()

The last example shows that the union of subspaces is not in general a subspace. However, the intersection of subspaces is a subspace, as we'll see later.

Example. Prove or disprove: The following

subset of ![]() is a subspace of

is a subspace of ![]() :

:

![]()

If you're trying to decide whether a set is a subspace, it's always

good to check whether it contains the zero vector before you start

checking the axioms. In this case, the set consists of 3-dimensional

vectors whose third components are equal to 1. Obviously, the zero

vector ![]() doesn't satisfy this condition.

doesn't satisfy this condition.

Since W doesn't contain the zero vector, it's not a subspace of ![]() .

.![]()

Example. Consider the following subset of the

vector space ![]() :

:

![]()

Check each axiom for a subspace (i.e. closure under addition and closure under scalar multiplication). If the axiom holds, prove it; if the axiom doesn't hold, give a specific counterexample.

Notice that this problem is open-ended, in that you aren't told at the start whether a given axiom holds or not. So you have to decide whether you're going to try to prove that the axiom holds, or whether you're going to try to find a counterexample. In these kinds of situations, look at the statement of the problem --- in this case, the definition of W. See if your mathematical experience causes you to lean one way or another --- if so, try that approach first.

If you can't make up your mind, pick either "prove" or "disprove" and get started! Usually, if you pick the wrong approach you'll know it pretty quickly --- in fact, getting stuck taking the wrong approach may give you an idea of how to make the right approach work.

Suppose I start by trying to prove that the set is closed under sums.

I take two vectors in W --- say ![]() and

and ![]() . I add them:

. I add them:

![]()

The last vector isn't in the right form --- it would be if ![]() was equal to

was equal to ![]() . Based on your

knowledge of trigonometry, you should know that doesn't sound right.

You might reason that if a simple identity like "

. Based on your

knowledge of trigonometry, you should know that doesn't sound right.

You might reason that if a simple identity like "![]() " was true, you probably

would have learned about it!

" was true, you probably

would have learned about it!

I now suspect that the sum axiom doesn't hold. I need a specific counterexample --- that is, two vectors in W whose sum is not in W.

To choose things for a counterexample, you should try to choose things which are not too "special" or your "counterexample" might accidentally satisfy the axiom, which is not what you want. At the same time, you should avoid things which are too "ugly", because it makes the counterexample less convincing if a computer is needed (for instance) to compute the numbers. You may need a few tries to find a good counterexample. Remember that the things in your counterexample should involve specific numbers, not "variables".

Returning to our problem, I need two vectors in W whose sum isn't in

W. I'll use ![]() and

and

![]() . Note that

. Note that

![]()

On the other hand,

![]()

But ![]() because

because ![]() .

.

How did I choose the two vectors? I decided to use a multiple of ![]() in the first component, because the sine of a multiple of

in the first component, because the sine of a multiple of ![]() (in the second component) comes out to "nice numbers". If I

had used (say)

(in the second component) comes out to "nice numbers". If I

had used (say) ![]() , I'd have needed a computer to

tell me that

, I'd have needed a computer to

tell me that ![]() , and a

counterexample would have looked kind of ugly. In addition, an

approximation of this kind really isn't a proof.

, and a

counterexample would have looked kind of ugly. In addition, an

approximation of this kind really isn't a proof.

How did I know to use ![]() and

and ![]() ? Actually, I didn't know till I did the work that these numbers

would produce a counterexample --- you often can't know without

trying whether the numbers you've chosen will work.

? Actually, I didn't know till I did the work that these numbers

would produce a counterexample --- you often can't know without

trying whether the numbers you've chosen will work.

Thus, W is not closed under vector addition, and so it is not a subspace. If that was the question, I'd be done, but I was asked to each axiom. It is possible for one of the axioms to hold even if the other one does not. So I'll consider scalar multiplication.

I'll give a counterexample to show that the scalar multiplication

axiom doesn't hold. I need a vector in W; I'll use ![]() again. I

also need a real number; I'll use 2. Now

again. I

also need a real number; I'll use 2. Now

![]()

But ![]() , because

, because ![]() .

.

Thus, neither the addition axiom nor the scalar multiplication axiom

holds. Obviously, W is not a subspace.![]()

Example. Let F be a field, and let ![]() . Consider the following subset of

. Consider the following subset of ![]() :

:

![]()

Show that W is a subspace of ![]() .

.

This set is defined by a property rather than by appearance, and axiom checks for this kind of set often give people trouble. The problem is that elements of W don't "look like" anything --- if you need to refer to a couple of arbitrary elements of W, you might call them u and v (for instance). There's nothing about the symbols u and v which tells you that they belong to W. But u and v are like people who belong to a club: You can't tell from their appearance that they're club members, but you could tell from the property that they both have membership cards.

When you write a proof, you start from assumptions and

reason to the conclusion. You should not start with

the conclusion and "work backwards". Sometimes reasoning

that works in one direction might not work in the opposite direction.

For example, suppose x is a real number. If ![]() , then

, then ![]() . But if

. But if ![]() , it doesn't follow that

, it doesn't follow that ![]() --- maybe

--- maybe ![]() .

.

Reasoning in mathematics is deductive, not confirmational.

In this problem, to check closure under addition, you assume that u

and v and in W, and show that ![]() is in W. You do not start by

assuming that

is in W. You do not start by

assuming that ![]() is in W.

is in W.

Nevertheless, in deciding how to do a proof, it's okay to work backwards "on scratch paper" to figure out what to do. Here's a way of sketching out a proof that allows you to work backward while ensuring that the steps work forward as well.

Start by putting down the assumptions ![]() and

and ![]() at the top and the conclusion

at the top and the conclusion ![]() at the bottom. Leave space in between to work.

at the bottom. Leave space in between to work.

Next, use the definition of W to translate each of the statements:

![]() means

means ![]() , so put "

, so put "![]() " below "

" below "![]() ". Likewise,

". Likewise,

![]() means

means ![]() , so put "

, so put "![]() " below "

" below "![]() ". On the

other hand,

". On the

other hand, ![]() means

means ![]() , but since

"

, but since

"![]() " is what we want to

conclude, put "

" is what we want to

conclude, put "![]() "

above "

"

above "![]() ".

".

At this point, you can either work downwards from ![]() and

and ![]() , or upwards from

, or upwards from ![]() . But if you work upwards from

. But if you work upwards from ![]() , you must ensure that the algebra you do

is reversible --- that it works downwards as well.

, you must ensure that the algebra you do

is reversible --- that it works downwards as well.

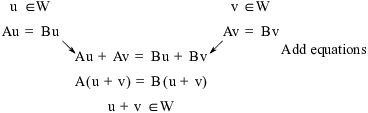

I'll work downwards from ![]() and

and ![]() . What algebra could I do which would get me closer

to

. What algebra could I do which would get me closer

to ![]() ? Since the target involves

addition, it's natural to add the equations:

? Since the target involves

addition, it's natural to add the equations:

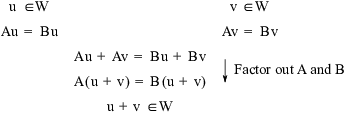

At this point, I'm almost done. To finish, I have to explain how to

go from ![]() to

to ![]() . You

can see that I just need to factor A out of the left side and factor

B out of the right side:

. You

can see that I just need to factor A out of the left side and factor

B out of the right side:

The proof is complete! If you were just writing the proof for yourself, the sketch above might be good enough. If you were writing this proof more formally --- say for an assignment or a paper --- you might add some explanatory words.

For instance, you might say: "I need to show that W is closed

under addition. Let ![]() and let

and let ![]() . By definition of W, this means that

. By definition of W, this means that ![]() and

and ![]() . Adding the equations, I get

. Adding the equations, I get ![]() . Factoring A out of the left side and B

out of the right side, I get

. Factoring A out of the left side and B

out of the right side, I get ![]() . By definition

of W, this means that

. By definition

of W, this means that ![]() . Hence, W is closed under

addition."

. Hence, W is closed under

addition."

By the way, be careful not to write things like "![]() " --- do you see why this doesn't make

sense? "

" --- do you see why this doesn't make

sense? "![]() " is an equation

that

" is an equation

that ![]() satisfies. You can't write "

satisfies. You can't write "![]() ", since an equation can't be an

element of W. Elements of W are vectors. You say "

", since an equation can't be an

element of W. Elements of W are vectors. You say "![]() ", as in the last line.

", as in the last line.

Here's a sketch of a similar "fill-in" proof for closure under scalar multiplication:

Try working through the proof yourself.![]()

Example. Consider the following subset of the

polynomial ring ![]() :

:

![]()

Show that V is not a subspace of ![]() .

.

The zero polynomial (i.e. the zero vector) is not in V, because the

zero polynomial does not give 1 when you plug in ![]() . Hence, V is not a subspace.

. Hence, V is not a subspace.

Alternatively, the constant polynomial ![]() is an element of

V --- it gives 1 when you plug in 2 --- but

is an element of

V --- it gives 1 when you plug in 2 --- but ![]() is not. So V is not closed under scalar

multiplication.

is not. So V is not closed under scalar

multiplication.

See if you can give an example which shows that V is not closed under

vector addition.![]()

Proposition. If A is an ![]() matrix over the field F, consider the set V of

n-dimensional vectors x which satisfy

matrix over the field F, consider the set V of

n-dimensional vectors x which satisfy

![]()

Then V is a subspace of ![]() .

.

Proof. Suppose ![]() . Then

. Then ![]() and

and ![]() , so

, so

![]()

Hence, ![]() .

.

Suppose ![]() and

and ![]() . Then

. Then ![]() , so

, so

![]()

Therefore, ![]() .

.

Thus, V is a subspace.![]()

The subspace defined in the last proposition is called the null space of A.

Definition. Let A be an ![]() matrix over the field F.

matrix over the field F.

![]()

As a specific example of the last proposition, consider the following

system of linear equations over ![]() :

:

![$$\left[\matrix{ 1 & 1 & 0 & 1 \cr 0 & 0 & 1 & 3 \cr}\right] \left[\matrix{w \cr x \cr y \cr z \cr}\right] = \left[\matrix{0 \cr 0 \cr 0 \cr 0 \cr}\right].$$](subspaces234.png)

You can show by row reduction that the general solution can be written as

![]()

Thus,

![$$\left[\matrix{w \cr x \cr y \cr z \cr}\right] = \left[\matrix{-s - t \cr s \cr -3 t \cr t \cr}\right].$$](subspaces236.png)

The Proposition says that the set of all vectors of this form

constitute a subspace of ![]() .

.

For example, if you add two vectors of this form, you get another vector of this form:

![$$\left[\matrix{-s - t \cr s \cr -3 t \cr t \cr}\right] + \left[\matrix{-s' - t' \cr s' \cr -3 t' \cr t' \cr}\right] = \left[\matrix{-(s + s') - (t + t') \cr s + s' \cr -3(t + t') \cr t + t' \cr}\right].$$](subspaces238.png)

You can check directly that the set is also closed under scalar multiplication.

In terms of systems of linear equations, a vector ![]() is in the null space of the matrix

is in the null space of the matrix ![]() if it's a solution to the system

if it's a solution to the system

In this situation, we say that the vectors ![]() make up the solution space of

the system. Since the solution space of the system is another name

for the null space of A, the solution space is a subspace of

make up the solution space of

the system. Since the solution space of the system is another name

for the null space of A, the solution space is a subspace of ![]() .

.

We'll study the null space of a matrix in more detail later.

Example. ![]() denotes the real

vector space of continuous functions

denotes the real

vector space of continuous functions ![]() . Consider

the following subset of

. Consider

the following subset of ![]() :

:

![]()

Prove that S is a subspace of ![]() .

.

Let ![]() . Then

. Then

![]()

Adding the two equations and using the fact that "the integral of a sum is the sum of the integrals", we have

![]()

This proves that ![]() , so S is closed under

addition.

, so S is closed under

addition.

Let ![]() , so

, so

![]()

Let ![]() . Using the fact that constants can be

moved into integrals, we have

. Using the fact that constants can be

moved into integrals, we have

![]()

This proves that ![]() , so S is closed under scalar

multiplication. Thus, S is a subspace of

, so S is closed under scalar

multiplication. Thus, S is a subspace of ![]() .

.![]()

Intersections of subspaces.

We've seen that the union of subspaces is not necessarily a subspace. For intersections, the situation is different: The intersection of any number of subspaces is a subspace. The only signficant issue with the proof is that we will deal with an arbitrary collection of sets --- possibly infinite, and possibly uncountable. Except for taking care with the notation, the proof is fairly easy.

Theorem. Let V be a vector space over a field

F, and let ![]() be a collection of subspaces of V.

The intersection

be a collection of subspaces of V.

The intersection ![]() is a

subspace of V.

is a

subspace of V.

Proof. We have to show that ![]() is closed under vector addition

and under scalar multiplication.

is closed under vector addition

and under scalar multiplication.

Suppose ![]() . For x

and y to be in the intersection of the

. For x

and y to be in the intersection of the ![]() , they must be in each

, they must be in each

![]() for all

for all ![]() . So pick a particular

. So pick a particular ![]() ; we have

; we have ![]() . Now

. Now ![]() is a subspace, so it's closed under vector addition. Hence,

is a subspace, so it's closed under vector addition. Hence, ![]() . Since this is true for all

. Since this is true for all ![]() , I have

, I have ![]() .

.

Thus, ![]() is closed under vector

addition.

is closed under vector

addition.

Next, suppose ![]() and

and ![]() . For x to be in the intersection of the

. For x to be in the intersection of the ![]() , it must be in each

, it must be in each ![]() for all

for all ![]() . So pick a particular

. So pick a particular

![]() ; we have

; we have ![]() . Now

. Now ![]() is a subspace, so it's closed under scalar multiplication. Hence,

is a subspace, so it's closed under scalar multiplication. Hence,

![]() . Since this is true for all

. Since this is true for all ![]() , I have

, I have ![]() .

.

Thus, ![]() is closed under scalar

multiplication.

is closed under scalar

multiplication.

Hence, ![]() is a subspace of V.

is a subspace of V.![]()

You can see that the proof was pretty easy, the two parts being

pretty similar. The key idea is that something is in the intersection

of a bunch of sets if and only if it's in each of the sets. How many

sets there are in the bunch doesn't matter. If you're still feeling a

little uncomfortable, try writing out the proof for the case of

two subspaces: If U and V are subspaces of a vector space W

over a field F, then ![]() is a subspace of W. The notation

is easier for two subspaces, but the idea of the proof is the same as

the idea for the proof above.

is a subspace of W. The notation

is easier for two subspaces, but the idea of the proof is the same as

the idea for the proof above.

Copyright 2022 by Bruce Ikenaga